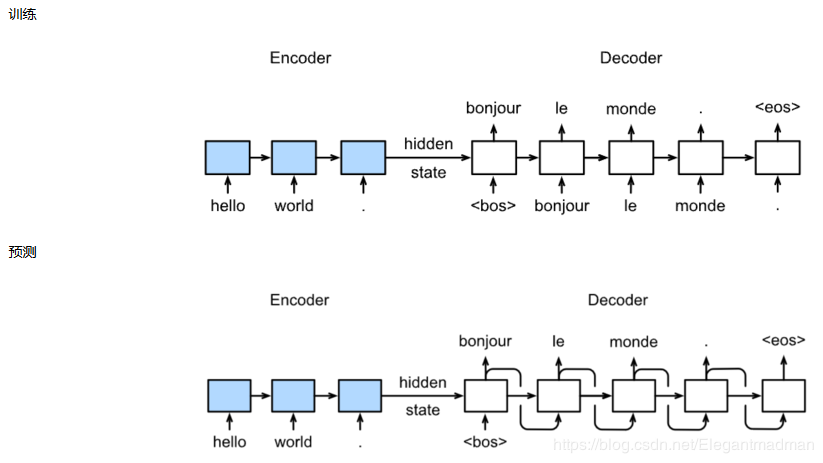

机器翻译及其技术实现

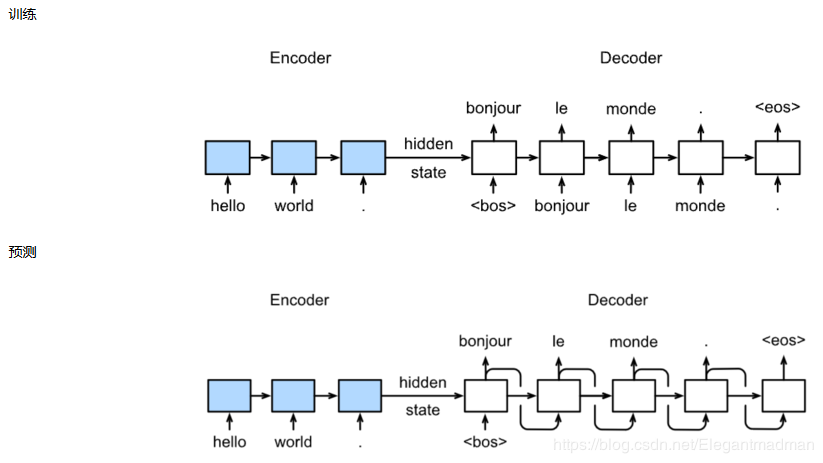

机器翻译(MT):将一段文本从一种语言自动翻译为另一种语言,用神经网络解决这个问题通常称为神经机器翻译(NMT)。 主要特征:输出是单词序列而不是单个单词。 输出序列的长度可能与源序列的长度不同。

数据预处理

去除特殊字符

Encoder

Encoder

代码实现

softmax屏蔽

代码实现

softmax屏蔽

作者:借此一生

def preprocess_raw(text):

text = text.replace('\u202f', ' ').replace('\xa0', ' ')

out = ''

for i, char in enumerate(text.lower()):

if char in (',', '!', '.') and i > 0 and text[i-1] != ' ':

out += ' '

out += char

return out

分词

num_examples = 50000

source, target = [], []

for i, line in enumerate(text.split('\n')):

if i > num_examples:

break

parts = line.split('\t')

if len(parts) >= 2:

source.append(parts[0].split(' '))

target.append(parts[1].split(' '))

source[0:3], target[0:3]

建立词典

def build_vocab(tokens):

tokens = [token for line in tokens for token in line]

return d2l.data.base.Vocab(tokens, min_freq=3, use_special_tokens=True)

载入数据集

def pad(line, max_len, padding_token):

if len(line) > max_len:

return line[:max_len]

return line + [padding_token] * (max_len - len(line))

def build_array(lines, vocab, max_len, is_source):

lines = [vocab[line] for line in lines]

if not is_source:

lines = [[vocab.bos] + line + [vocab.eos] for line in lines]

array = torch.tensor([pad(line, max_len, vocab.pad) for line in lines])

valid_len = (array != vocab.pad).sum(1) #第一个维度

return array, valid_len

def load_data_nmt(batch_size, max_len): # This function is saved in d2l.

src_vocab, tgt_vocab = build_vocab(source), build_vocab(target)

src_array, src_valid_len = build_array(source, src_vocab, max_len, True)

tgt_array, tgt_valid_len = build_array(target, tgt_vocab, max_len, False)

train_data = data.TensorDataset(src_array, src_valid_len, tgt_array, tgt_valid_len)

train_iter = data.DataLoader(train_data, batch_size, shuffle=True)

return src_vocab, tgt_vocab, train_iter

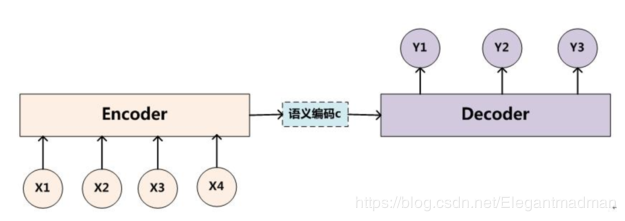

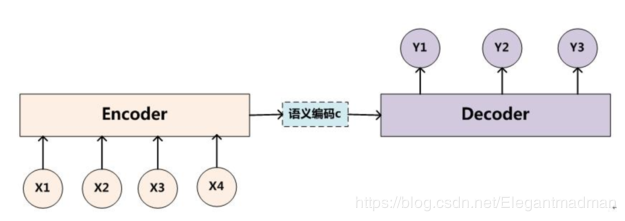

图解实现机制

Encoder

Encoder

class Seq2SeqEncoder(d2l.Encoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqEncoder, self).__init__(**kwargs)

self.num_hiddens=num_hiddens

self.num_layers=num_layers

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

def begin_state(self, batch_size, device):

return [torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device),

torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device)]

def forward(self, X, *args):

X = self.embedding(X) # X shape: (batch_size, seq_len, embed_size)

X = X.transpose(0, 1) # RNN needs first axes to be time

# state = self.begin_state(X.shape[1], device=X.device)

out, state = self.rnn(X)

# The shape of out is (seq_len, batch_size, num_hiddens).

# state contains the hidden state and the memory cell

# of the last time step, the shape is (num_layers, batch_size, num_hiddens)

return out, state

encoder = Seq2SeqEncoder(vocab_size=10, embed_size=8,num_hiddens=16, num_layers=2)

X = torch.zeros((4, 7),dtype=torch.long)

output, state = encoder(X)

output.shape, len(state), state[0].shape, state[1].shape

损失函数

def SequenceMask(X, X_len,value=0):

maxlen = X.size(1)

mask = torch.arange(maxlen)[None, :].to(X_len.device) < X_len[:, None]

X[~mask]=value

return X

X = torch.tensor([[1,2,3], [4,5,6]])

SequenceMask(X,torch.tensor([1,2]))

class MaskedSoftmaxCELoss(nn.CrossEntropyLoss):

# pred shape: (batch_size, seq_len, vocab_size)

# label shape: (batch_size, seq_len)

# valid_length shape: (batch_size, )

def forward(self, pred, label, valid_length):

# the sample weights shape should be (batch_size, seq_len)

weights = torch.ones_like(label)

weights = SequenceMask(weights, valid_length).float()

self.reduction='none'

output=super(MaskedSoftmaxCELoss, self).forward(pred.transpose(1,2), label)

return (output*weights).mean(dim=1)

训练

def train_ch7(model, data_iter, lr, num_epochs, device): # Saved in d2l

model.to(device)

optimizer = optim.Adam(model.parameters(), lr=lr)

loss = MaskedSoftmaxCELoss()

tic = time.time()

for epoch in range(1, num_epochs+1):

l_sum, num_tokens_sum = 0.0, 0.0

for batch in data_iter:

optimizer.zero_grad()

X, X_vlen, Y, Y_vlen = [x.to(device) for x in batch]

Y_input, Y_label, Y_vlen = Y[:,:-1], Y[:,1:], Y_vlen-1

Y_hat, _ = model(X, Y_input, X_vlen, Y_vlen)

l = loss(Y_hat, Y_label, Y_vlen).sum()

l.backward()

with torch.no_grad():

d2l.grad_clipping_nn(model, 5, device)

num_tokens = Y_vlen.sum().item()

optimizer.step()

l_sum += l.sum().item()

num_tokens_sum += num_tokens

if epoch % 50 == 0:

print("epoch {0:4d},loss {1:.3f}, time {2:.1f} sec".format(

epoch, (l_sum/num_tokens_sum), time.time()-tic))

tic = time.time()

embed_size, num_hiddens, num_layers, dropout = 32, 32, 2, 0.0

batch_size, num_examples, max_len = 64, 1e3, 10

lr, num_epochs, ctx = 0.005, 300, d2l.try_gpu()

src_vocab, tgt_vocab, train_iter = d2l.load_data_nmt(

batch_size, max_len,num_examples)

encoder = Seq2SeqEncoder(

len(src_vocab), embed_size, num_hiddens, num_layers, dropout)

decoder = Seq2SeqDecoder(

len(tgt_vocab), embed_size, num_hiddens, num_layers, dropout)

model = d2l.EncoderDecoder(encoder, decoder)

train_ch7(model, train_iter, lr, num_epochs, ctx)

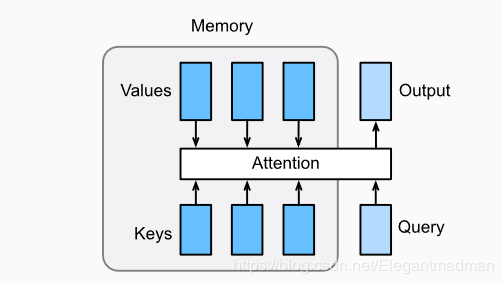

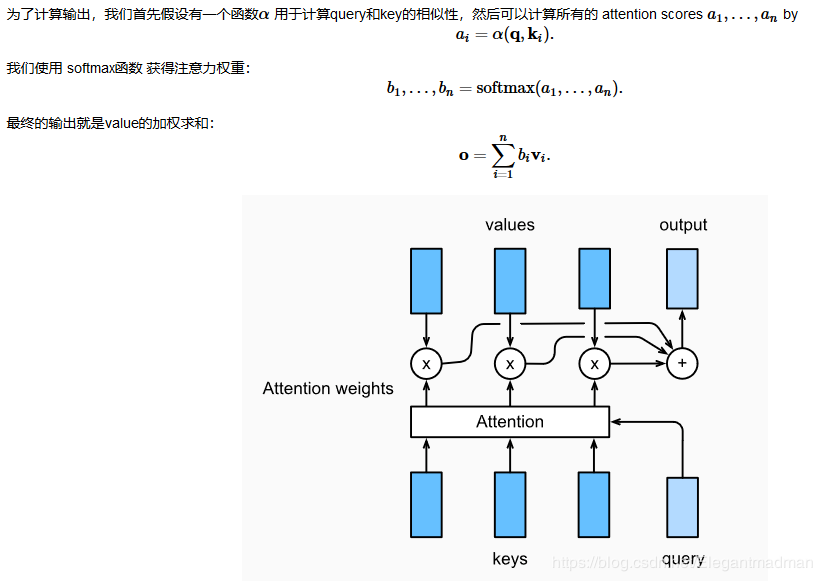

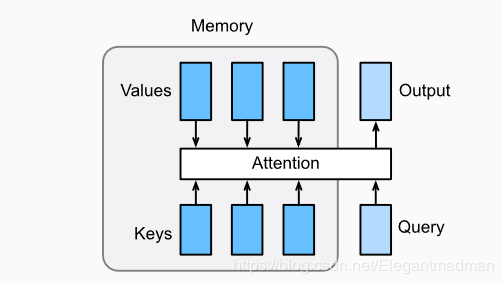

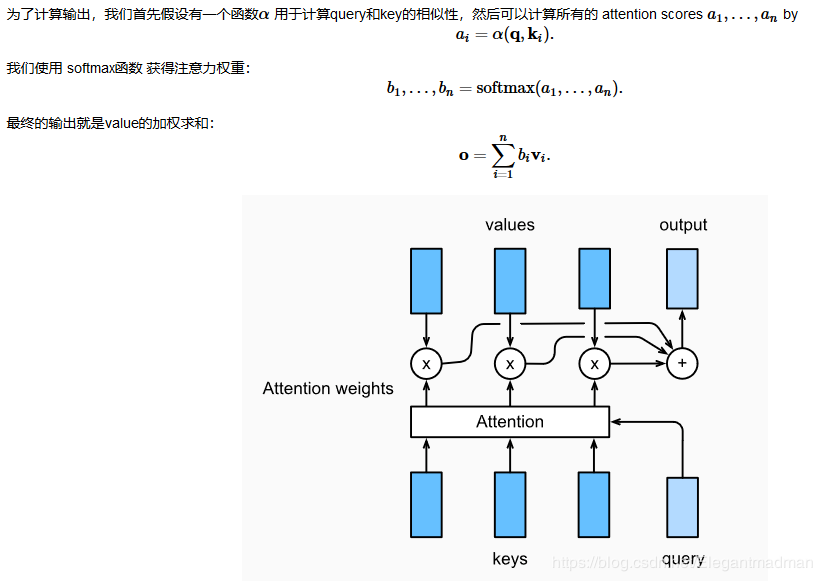

添加注意力机制

注意力机制图解

代码实现

softmax屏蔽

代码实现

softmax屏蔽

def SequenceMask(X, X_len,value=-1e6):

maxlen = X.size(1)

#print(X.size(),torch.arange((maxlen),dtype=torch.float)[None, :],'\n',X_len[:, None] )

mask = torch.arange((maxlen),dtype=torch.float)[None, :] >= X_len[:, None]

#print(mask)

X[mask]=value

return X

def masked_softmax(X, valid_length):

# X: 3-D tensor, valid_length: 1-D or 2-D tensor

softmax = nn.Softmax(dim=-1)

if valid_length is None:

return softmax(X)

else:

shape = X.shape

if valid_length.dim() == 1:

try:

valid_length = torch.FloatTensor(valid_length.numpy().repeat(shape[1], axis=0))#[2,2,3,3]

except:

valid_length = torch.FloatTensor(valid_length.cpu().numpy().repeat(shape[1], axis=0))#[2,2,3,3]

else:

valid_length = valid_length.reshape((-1,))

# fill masked elements with a large negative, whose exp is 0

X = SequenceMask(X.reshape((-1, shape[-1])), valid_length)

return softmax(X).reshape(shape)

点积注意力实现

# Save to the d2l package.

class DotProductAttention(nn.Module):

def __init__(self, dropout, **kwargs):

super(DotProductAttention, self).__init__(**kwargs)

self.dropout = nn.Dropout(dropout)

# query: (batch_size, #queries, d)

# key: (batch_size, #kv_pairs, d)

# value: (batch_size, #kv_pairs, dim_v)

# valid_length: either (batch_size, ) or (batch_size, xx)

def forward(self, query, key, value, valid_length=None):

d = query.shape[-1]

# set transpose_b=True to swap the last two dimensions of key

scores = torch.bmm(query, key.transpose(1,2)) / math.sqrt(d)

attention_weights = self.dropout(masked_softmax(scores, valid_length))

print("attention_weight\n",attention_weights)

return torch.bmm(attention_weights, value)

多层感知机注意力

# Save to the d2l package.

class MLPAttention(nn.Module):

def __init__(self, units,ipt_dim,dropout, **kwargs):

super(MLPAttention, self).__init__(**kwargs)

# Use flatten=True to keep query's and key's 3-D shapes.

self.W_k = nn.Linear(ipt_dim, units, bias=False)

self.W_q = nn.Linear(ipt_dim, units, bias=False)

self.v = nn.Linear(units, 1, bias=False)

self.dropout = nn.Dropout(dropout)

def forward(self, query, key, value, valid_length):

query, key = self.W_k(query), self.W_q(key)

#print("size",query.size(),key.size())

# expand query to (batch_size, #querys, 1, units), and key to

# (batch_size, 1, #kv_pairs, units). Then plus them with broadcast.

features = query.unsqueeze(2) + key.unsqueeze(1)

#print("features:",features.size()) #--------------开启

scores = self.v(features).squeeze(-1)

attention_weights = self.dropout(masked_softmax(scores, valid_length))

return torch.bmm(attention_weights, value)

添加后代码更改

class Seq2SeqAttentionDecoder(d2l.Decoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqAttentionDecoder, self).__init__(**kwargs)

self.attention_cell = MLPAttention(num_hiddens,num_hiddens, dropout)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size+ num_hiddens,num_hiddens, num_layers, dropout=dropout)

self.dense = nn.Linear(num_hiddens,vocab_size)

def init_state(self, enc_outputs, enc_valid_len, *args):

outputs, hidden_state = enc_outputs

# print("first:",outputs.size(),hidden_state[0].size(),hidden_state[1].size())

# Transpose outputs to (batch_size, seq_len, hidden_size)

return (outputs.permute(1,0,-1), hidden_state, enc_valid_len)

#outputs.swapaxes(0, 1)

def forward(self, X, state):

enc_outputs, hidden_state, enc_valid_len = state

#("X.size",X.size())

X = self.embedding(X).transpose(0,1)

# print("Xembeding.size2",X.size())

outputs = []

for l, x in enumerate(X):

# print(f"\n{l}-th token")

# print("x.first.size()",x.size())

# query shape: (batch_size, 1, hidden_size)

# select hidden state of the last rnn layer as query

query = hidden_state[0][-1].unsqueeze(1) # np.expand_dims(hidden_state[0][-1], axis=1)

# context has same shape as query

# print("query enc_outputs, enc_outputs:\n",query.size(), enc_outputs.size(), enc_outputs.size())

context = self.attention_cell(query, enc_outputs, enc_outputs, enc_valid_len)

# Concatenate on the feature dimension

# print("context.size:",context.size())

x = torch.cat((context, x.unsqueeze(1)), dim=-1)

# Reshape x to (1, batch_size, embed_size+hidden_size)

# print("rnn",x.size(), len(hidden_state))

out, hidden_state = self.rnn(x.transpose(0,1), hidden_state)

outputs.append(out)

outputs = self.dense(torch.cat(outputs, dim=0))

return outputs.transpose(0, 1), [enc_outputs, hidden_state,

enc_valid_len]

作者:借此一生