《动手学习深度学习》之二:机器翻译(打卡2.1)

1.机器翻译和数据集

1.1机器翻译

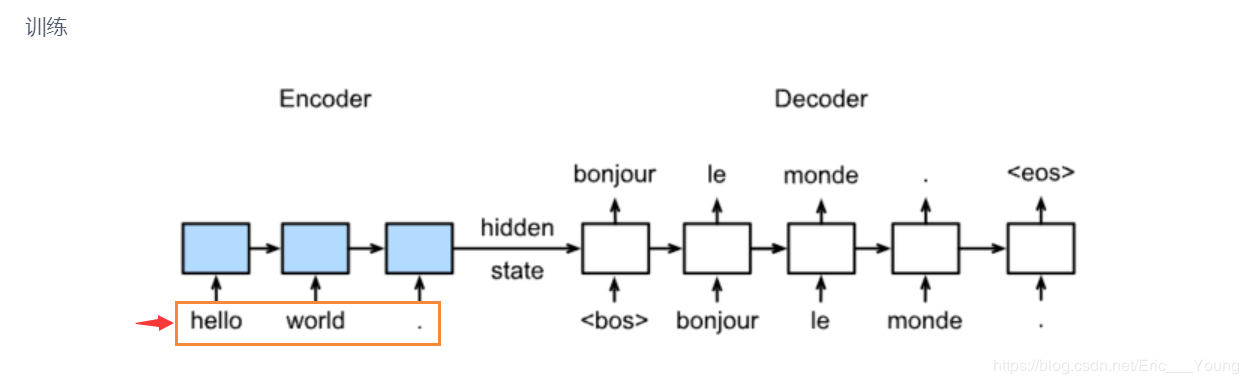

定义:将一段文本从一种语言自动翻译为另一种语言,用神经网络解决这个问题通常称为神经机器翻译(NMT)

主要特征:输出是单词序列而不是单个单词。 输出序列的长度可能与源序列的长度不同。

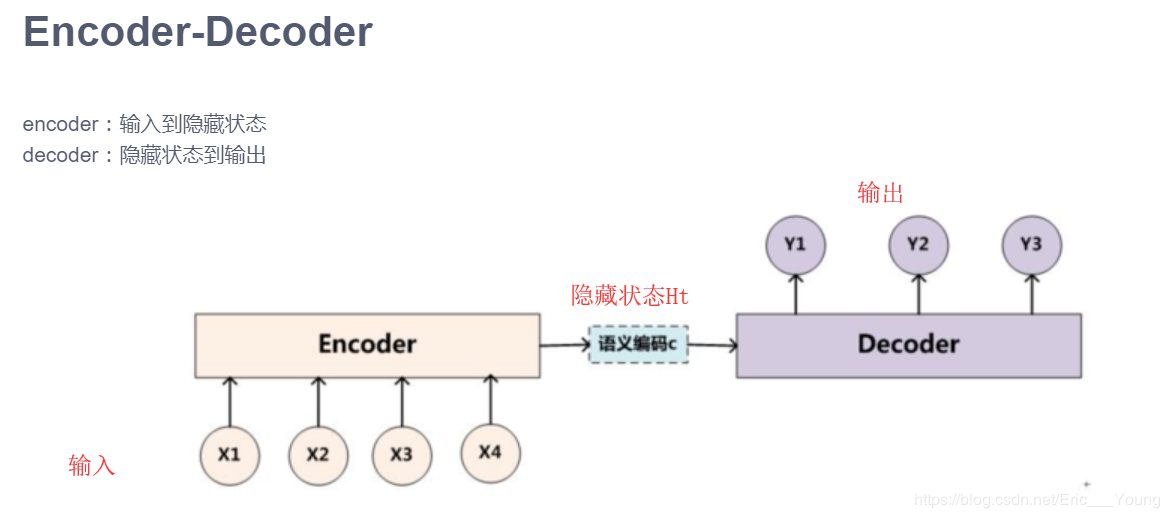

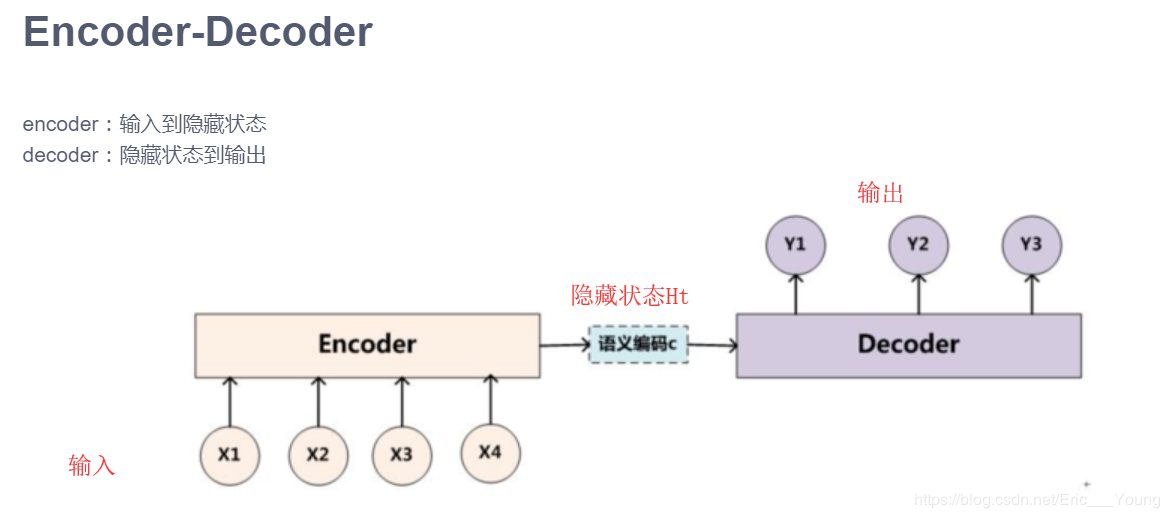

基本结构:Encoder-Decoder

encoder:输入到隐藏状态

decoder:隐藏状态到输出 通常应用在对话系统、生成式任务中

Encoder

Decoder

EncoderDecoder

1.2模型逻辑

Encoder

Decoder

EncoderDecoder

1.2模型逻辑

作者:氟西汀重度患者

encoder:输入到隐藏状态

decoder:隐藏状态到输出 通常应用在对话系统、生成式任务中

Encoder

Decoder

EncoderDecoder

1.2模型逻辑

Encoder

Decoder

EncoderDecoder

1.2模型逻辑

import sys

sys.path.append('/home/kesci/input/d2l9528/')

import collections

import d2l

import zipfile

from d2l.data.base import Vocab

import time

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils import data

from torch import optim

数据预处理

with open('/home/kesci/input/fraeng6506/fra.txt', 'r') as f:

raw_text = f.read()

print(raw_text[0:1000])

- 1)将数据集清洗、转化为神经网络的输入minbatch

- 1.去掉乱码,原来不同编码的空格用统一的空格代替,数据清洗

- 字符在计算机里是以编码的形式存在,我们通常所用的空格是 \x20 ,是在标准ASCII可见字符 0x20~0x7e 范围内。

- 而 \xa0 属于 latin1 (ISO/IEC_8859-1)中的扩展字符集字符,代表不间断空白符nbsp(non-breaking space),超出gbk编码范围,是需要去除的特殊字符。再数据预处理的过程中,我们首先需要对数据进行清洗。

- 2.统一为小写

- 3.标点前加空格处理

def preprocess_raw(text):

text = text.replace('\u202f', ' ').replace('\xa0', ' ')

out = ''

for i, char in enumerate(text.lower()):

if char in (',', '!', '.') and i > 0 and text[i-1] != ' ':

out += ' '

out += char

return out

text = preprocess_raw(raw_text)

print(text[0:1000])

- 2)分词:字符串---单词组成的列表

num_examples = 50000

source, target = [], []

for i, line in enumerate(text.split('\n')):

if i > num_examples:

break

parts = line.split('\t')

if len(parts) >= 2:

source.append(parts[0].split(' '))

target.append(parts[1].split(' '))

source[0:3], target[0:3]

d2l.set_figsize()

d2l.plt.hist([[len(l) for l in source], [len(l) for l in target]],label=['source', 'target'])

d2l.plt.legend(loc='upper right');

- 3)建立词典:单词组成的列表---单词id组成的列表

def build_vocab(tokens):

tokens = [token for line in tokens for token in line]

return d2l.data.base.Vocab(tokens, min_freq=3, use_special_tokens=True)

src_vocab = build_vocab(source)

len(src_vocab)

-4) 载入数据集

- 1.pad填充

def pad(line, max_len, padding_token):

if len(line) > max_len:

return line[:max_len]

return line + [padding_token] * (max_len - len(line))

pad(src_vocab[source[0]], 10, src_vocab.pad)

- 2.build_array建立数组,转化为张量;有效长度

def build_array(lines, vocab, max_len, is_source):

lines = [vocab[line] for line in lines]

if not is_source:

lines = [[vocab.bos] + line + [vocab.eos] for line in lines]

array = torch.tensor([pad(line, max_len, vocab.pad) for line in lines])

valid_len = (array != vocab.pad).sum(1) #第一个维度

return array, valid_len

- 3.load_data_nmt获得数据生成器

def load_data_nmt(batch_size, max_len): # This function is saved in d2l.

src_vocab, tgt_vocab = build_vocab(source), build_vocab(target)

src_array, src_valid_len = build_array(source, src_vocab, max_len, True)

tgt_array, tgt_valid_len = build_array(target, tgt_vocab, max_len, False)

train_data = data.TensorDataset(src_array, src_valid_len, tgt_array, tgt_valid_len)

train_iter = data.DataLoader(train_data, batch_size, shuffle=True)

return src_vocab, tgt_vocab, train_iter

src_vocab, tgt_vocab, train_iter = load_data_nmt(batch_size=2, max_len=8)

for X, X_valid_len, Y, Y_valid_len, in train_iter:

print('X =', X.type(torch.int32), '\nValid lengths for X =', X_valid_len,

'\nY =', Y.type(torch.int32), '\nValid lengths for Y =', Y_valid_len)

break

定义Sequence to Sequence模型

class Encoder(nn.Module):

def __init__(self, **kwargs):

super(Encoder, self).__init__(**kwargs)

def forward(self, X, *args):

raise NotImplementedError

class EncoderDecoder(nn.Module):

def __init__(self, encoder, decoder, **kwargs):

super(EncoderDecoder, self).__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

def forward(self, enc_X, dec_X, *args):

enc_outputs = self.encoder(enc_X, *args)

dec_state = self.decoder.init_state(enc_outputs, *args)

return self.decoder(dec_X, dec_state)

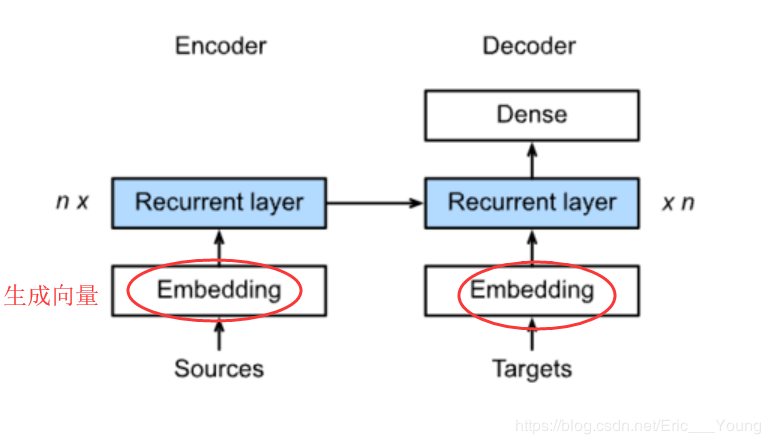

Seq2SeqEncoder:

class Seq2SeqEncoder(d2l.Encoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqEncoder, self).__init__(**kwargs)

self.num_hiddens=num_hiddens

self.num_layers=num_layers

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

def begin_state(self, batch_size, device):

return [torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device),

torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device)]

def forward(self, X, *args):

X = self.embedding(X) # X shape: (batch_size, seq_len, embed_size)

X = X.transpose(0, 1) # RNN needs first axes to be time

# state = self.begin_state(X.shape[1], device=X.device)

out, state = self.rnn(X)

# The shape of out is (seq_len, batch_size, num_hiddens).

# state contains the hidden state and the memory cell

# of the last time step, the shape is (num_layers, batch_size, num_hiddens)

return out, state

# 测试

encoder = Seq2SeqEncoder(vocab_size=10, embed_size=8,num_hiddens=16, num_layers=2)

X = torch.zeros((4, 7),dtype=torch.long)

output, state = encoder(X)

output.shape, len(state), state[0].shape, state[1].shape

Seq2SeqDecoder:

class Seq2SeqDecoder(d2l.Decoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqDecoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

self.dense = nn.Linear(num_hiddens,vocab_size)

def init_state(self, enc_outputs, *args):

return enc_outputs[1]

def forward(self, X, state):

X = self.embedding(X).transpose(0, 1)

out, state = self.rnn(X, state)

# Make the batch to be the first dimension to simplify loss computation.

out = self.dense(out).transpose(0, 1)

return out, state

# 测试

decoder = Seq2SeqDecoder(vocab_size=10, embed_size=8,num_hiddens=16, num_layers=2)

state = decoder.init_state(encoder(X))

out, state = decoder(X, state)

out.shape, len(state), state[0].shape, state[1].shape

- 总的结构

- 训练

- 预测

def SequenceMask(X, X_len,value=0):

maxlen = X.size(1)

mask = torch.arange(maxlen)[None, :].to(X_len.device) < X_len[:, None]

X[~mask]=value

return X

# 测试

X = torch.tensor([[1,2,3], [4,5,6]])

SequenceMask(X,torch.tensor([1,2]))

X = torch.ones((2,3, 4))

SequenceMask(X, torch.tensor([1,2]),value=-1)

MaskedSoftmaxCELoss:计算平均损失

class MaskedSoftmaxCELoss(nn.CrossEntropyLoss):

# pred shape: (batch_size, seq_len, vocab_size)

# label shape: (batch_size, seq_len)

# valid_length shape: (batch_size, )

def forward(self, pred, label, valid_length):

# the sample weights shape should be (batch_size, seq_len)

weights = torch.ones_like(label)

weights = SequenceMask(weights, valid_length).float()

self.reduction='none'

output=super(MaskedSoftmaxCELoss, self).forward(pred.transpose(1,2), label)

return (output*weights).mean(dim=1)

# 测试

loss = MaskedSoftmaxCELoss()

loss(torch.ones((3, 4, 10)), torch.ones((3,4),dtype=torch.long), torch.tensor([4,3,0]))

训练

def train_ch7(model, data_iter, lr, num_epochs, device): # Saved in d2l

model.to(device)

optimizer = optim.Adam(model.parameters(), lr=lr)

loss = MaskedSoftmaxCELoss()

tic = time.time()

for epoch in range(1, num_epochs+1):

l_sum, num_tokens_sum = 0.0, 0.0

for batch in data_iter:

optimizer.zero_grad()

X, X_vlen, Y, Y_vlen = [x.to(device) for x in batch]

Y_input, Y_label, Y_vlen = Y[:,:-1], Y[:,1:], Y_vlen-1

Y_hat, _ = model(X, Y_input, X_vlen, Y_vlen)

l = loss(Y_hat, Y_label, Y_vlen).sum()

l.backward()

with torch.no_grad():

d2l.grad_clipping_nn(model, 5, device)

num_tokens = Y_vlen.sum().item()

optimizer.step()

l_sum += l.sum().item()

num_tokens_sum += num_tokens

if epoch % 50 == 0:

print("epoch {0:4d},loss {1:.3f}, time {2:.1f} sec".format(

epoch, (l_sum/num_tokens_sum), time.time()-tic))

tic = time.time()

embed_size, num_hiddens, num_layers, dropout = 32, 32, 2, 0.0

batch_size, num_examples, max_len = 64, 1e3, 10

lr, num_epochs, ctx = 0.005, 300, d2l.try_gpu()

src_vocab, tgt_vocab, train_iter = d2l.load_data_nmt(

batch_size, max_len,num_examples)

encoder = Seq2SeqEncoder(

len(src_vocab), embed_size, num_hiddens, num_layers, dropout)

decoder = Seq2SeqDecoder(

len(tgt_vocab), embed_size, num_hiddens, num_layers, dropout)

model = d2l.EncoderDecoder(encoder, decoder)

train_ch7(model, train_iter, lr, num_epochs, ctx)

测试

def translate_ch7(model, src_sentence, src_vocab, tgt_vocab, max_len, device):

src_tokens = src_vocab[src_sentence.lower().split(' ')]

src_len = len(src_tokens)

if src_len ' + translate_ch7(

model, sentence, src_vocab, tgt_vocab, max_len, ctx))

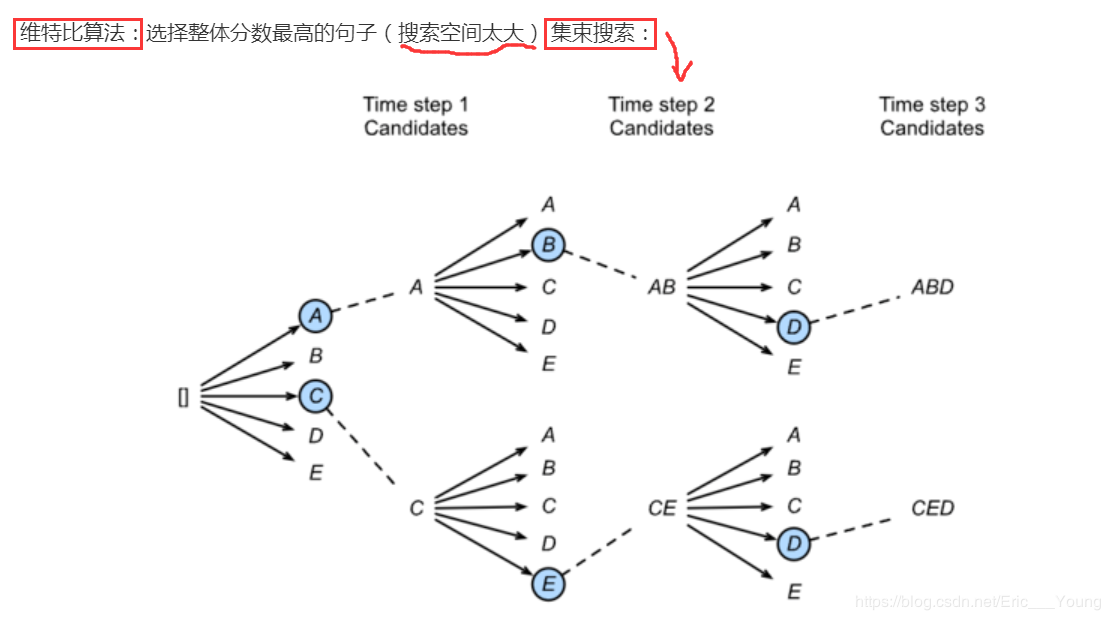

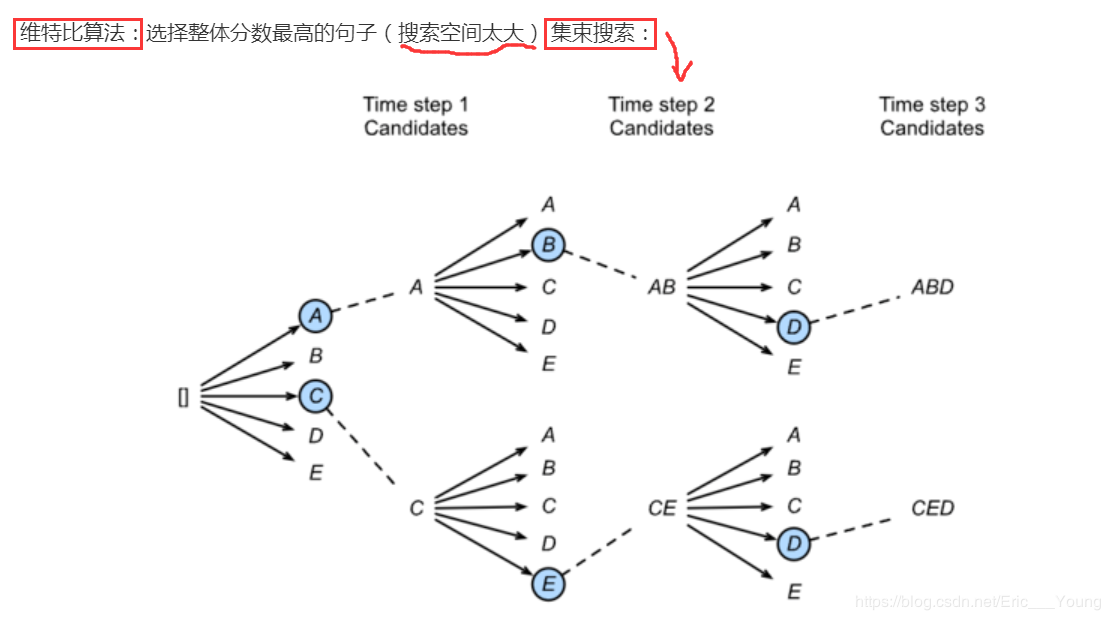

补充:Beam Search(集束搜索算法)

作者:氟西汀重度患者

相关文章

Iris

2021-08-03

Coral

2021-01-26

Floria

2021-01-03

Ula

2023-05-13

Jacinda

2023-05-13

Winona

2023-05-13

Fawn

2023-05-13

Echo

2023-05-13

Maha

2023-05-13

Kande

2023-05-15

Viridis

2023-05-17

Pandora

2023-07-07

Tallulah

2023-07-17

Janna

2023-07-20

Ophelia

2023-07-20

Natalia

2023-07-20

Irma

2023-07-20