Datawhale 组对学习打卡营 任务10:机器翻译及相关技术

目录

机器翻译和数据集

数据预处理

分词

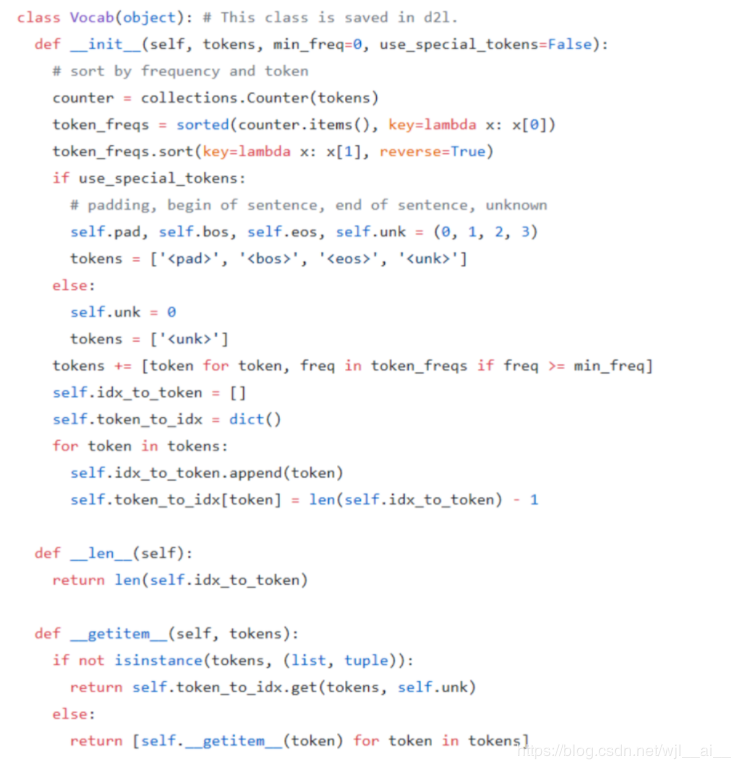

建立词典

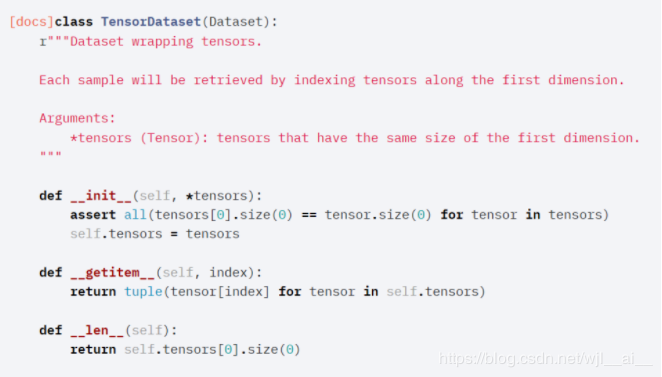

载入数据集

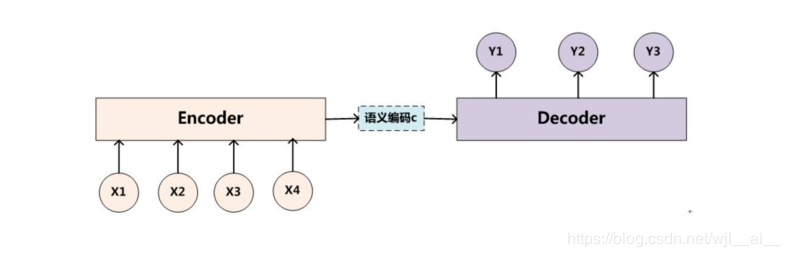

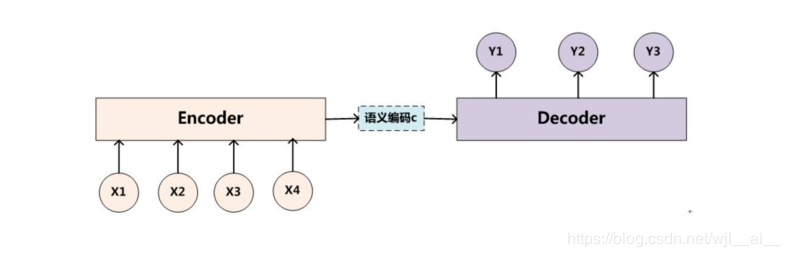

Encoder-Decoder

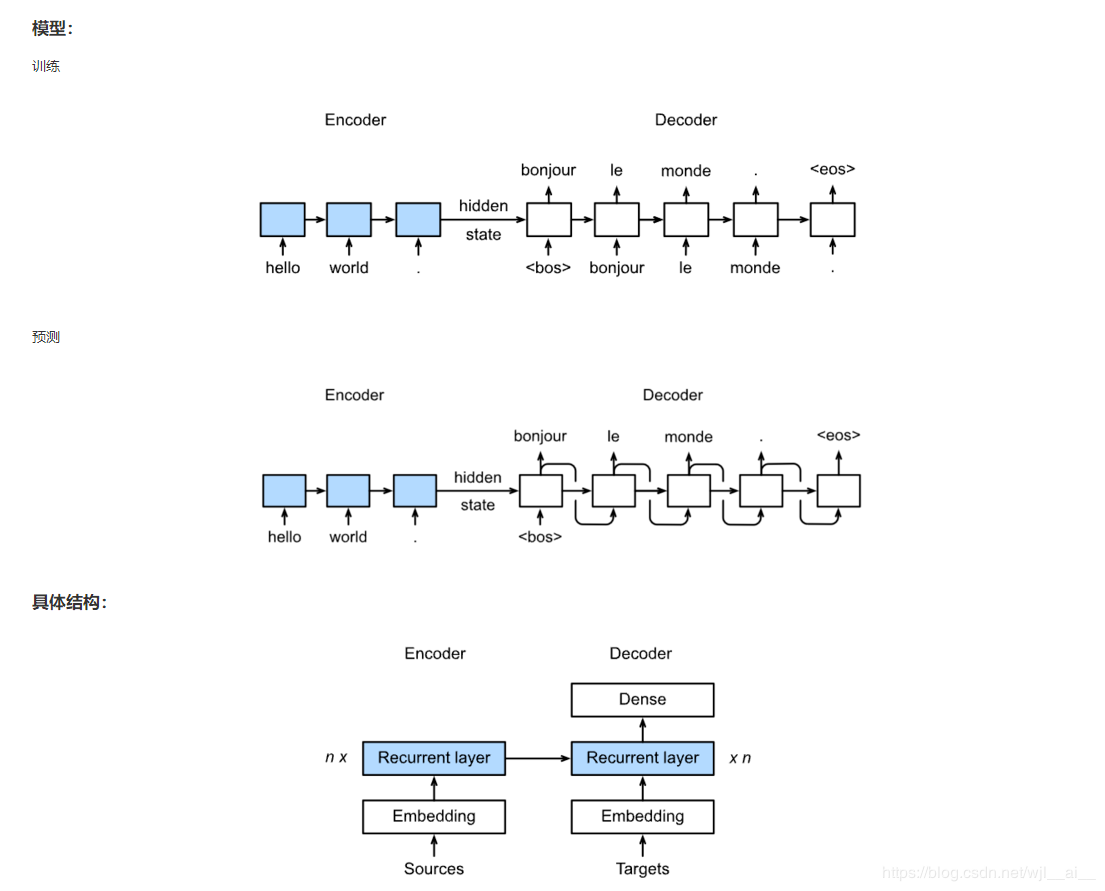

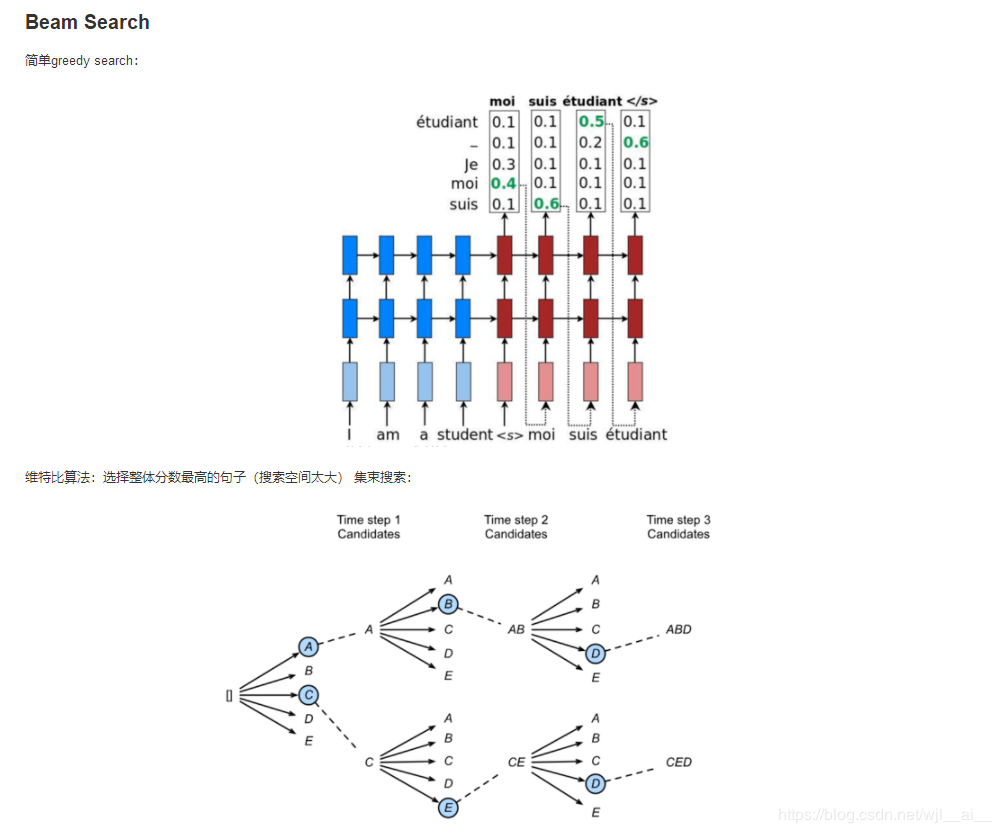

Sequence to Sequence模型

模型

具体结构

Encoder

Decoder

损失函数

训练

测试

作者:滑翔小飞侠

.

.

机器翻译(MT):将一段文本从一种语言自动翻译为另一种语言,用神经网络解决这个问题通常称为神经机器翻译(NMT)。 主要特征:输出是单词序列而不是单个单词。 输出序列的长度可能与源序列的长度不同。【如:i am Chinese(3个词) 翻译成中文 我是中国人(5个词)】

import os

os.listdir('/home/kesci/input/')

output:

['fraeng6506', 'd2l9528', 'd2l6239']

import sys

sys.path.append('/home/kesci/input/d2l9528/')

import collections

import d2l # Datawhale 团队提供的包

import zipfile

from d2l.data.base import Vocab

import time

import torch

import torch.nn as nn

import torch.nn.functional as F #

from torch.utils import data

from torch import optim

数据预处理

将数据集清洗、转化为神经网络的输入minbatch

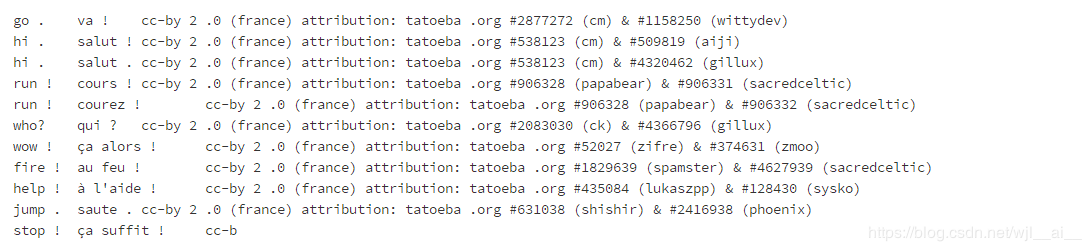

# 读数据

with open('/home/kesci/input/fraeng6506/fra.txt', 'r') as f:

raw_text = f.read()

print(raw_text[0:1000])

def preprocess_raw(text):

# 都乱码字符进行替换,如果是我们的文本,先观察问题的间隔字符是什么,根据自己的字符进行替换

text = text.replace('\u202f', ' ').replace('\xa0', ' ')

out = ''

# 转换成小写的字符串

for i, char in enumerate(text.lower()):

if char in (',', '!', '.') and i > 0 and text[i-1] != ' ':

out += ' '

out += char

return out

text = preprocess_raw(raw_text)

print(text[0:1000])

字符在计算机里是以编码的形式存在,我们通常所用的空格是 \x20 ,是在标准ASCII可见字符 0x20~0x7e 范围内。 而 \xa0 属于 latin1 (ISO/IEC_8859-1)中的扩展字符集字符,代表不间断空白符nbsp(non-breaking space),超出gbk编码范围,是需要去除的特殊字符。在数据预处理的过程中,我们首先需要对数据进行清洗。

字符串 – > 单词组成的列表

num_examples = 50000

source, target = [], []

for i, line in enumerate(text.split('\n')):

if i > num_examples:

break

parts = line.split('\t')

if len(parts) >= 2:

source.append(parts[0].split(' ')) # 第一个做观察值

target.append(parts[1].split(' ')) # 第二个做标签

source[0:3], target[0:3]

output:

([['go', '.'], ['hi', '.'], ['hi', '.']],

[['va', '!'], ['salut', '!'], ['salut', '.']])

d2l.set_figsize()

d2l.plt.hist([[len(l) for l in source], [len(l) for l in target]],label=['source', 'target'])

d2l.plt.legend(loc='upper right');

单词组成的列表 —> 单词id组成的列表

def build_vocab(tokens):

tokens = [token for line in tokens for token in line]

return d2l.data.base.Vocab(tokens, min_freq=3, use_special_tokens=True)

src_vocab = build_vocab(source)

len(src_vocab)

outpu:

3789

.

对句子进行等长处理 设定句子最大单词数是max_len, 超出最大长度的句子,截取前max_len个单词 不够长的,用设定字符进行填充def pad(line, max_len, padding_token):

if len(line) > max_len:

return line[:max_len]

return line + [padding_token] * (max_len - len(line))

pad(src_vocab[source[0]], 10, src_vocab.pad)

output:

[38, 4, 0, 0, 0, 0, 0, 0, 0, 0]

建立数组

def build_array(lines, vocab, max_len, is_source):

# vocab 是一个字典

# 参数 lines 是一个句子

lines = [vocab[line] for line in lines]

# 经过字典映射,形成一个向量

if not is_source: # 如果不是is_source

lines = [[vocab.bos] + line + [vocab.eos] for line in lines]

array = torch.tensor([pad(line, max_len, vocab.pad) for line in lines])

valid_len = (array != vocab.pad).sum(1) # 第一个维度

# valid_len 是记录原来句子的有效长度

return array, valid_len

# 最后返回的是id组成的数组和原来句子的有效长度

def load_data_nmt(batch_size, max_len): # This function is saved in d2l.

src_vocab, tgt_vocab = build_vocab(source), build_vocab(target)

# 建立观察值的字典和标签的字典

src_array, src_valid_len = build_array(source, src_vocab, max_len, True)

# 翻译前句子的等长数组,翻以前句子的有效长度

tgt_array, tgt_valid_len = build_array(target, tgt_vocab, max_len, False)

# 翻译后的句子等长数组,翻译后句子的有效长度

train_data = data.TensorDataset(src_array, src_valid_len, tgt_array, tgt_valid_len)

# 建立训练数据集

train_iter = data.DataLoader(train_data, batch_size, shuffle=True)

# 数据生成起,打乱

return src_vocab, tgt_vocab, train_iter

src_vocab, tgt_vocab, train_iter = load_data_nmt(batch_size=2, max_len=8)

for X, X_valid_len, Y, Y_valid_len, in train_iter:

# X_valid_len : 翻以前句子的有效长度

# Y_valid_len : 翻译后句子的有效长度

print('X =', X.type(torch.int32), '\nValid lengths for X =', X_valid_len,

'\nY =', Y.type(torch.int32), '\nValid lengths for Y =', Y_valid_len)

break

output:

X = tensor([[ 5, 24, 3, 4, 0, 0, 0, 0],

[ 12, 1388, 7, 3, 4, 0, 0, 0]], dtype=torch.int32)

Valid lengths for X = tensor([4, 5])

Y = tensor([[ 1, 23, 46, 3, 3, 4, 2, 0],

[ 1, 15, 137, 27, 4736, 4, 2, 0]], dtype=torch.int32)

Valid lengths for Y = tensor([7, 7])

Encoder-Decoder

encoder:输入到隐藏状态

decoder:隐藏状态到输出

class Encoder(nn.Module):

def __init__(self, **kwargs):

super(Encoder, self).__init__(**kwargs)

def forward(self, X, *args):

raise NotImplementedError

class Decoder(nn.Module):

def __init__(self, **kwargs):

super(Decoder, self).__init__(**kwargs)

def init_state(self, enc_outputs, *args):

raise NotImplementedError

def forward(self, X, state):

raise NotImplementedError

class EncoderDecoder(nn.Module):

def __init__(self, encoder, decoder, **kwargs):

super(EncoderDecoder, self).__init__(**kwargs)

self.encoder = encoder

self.decoder = decoder

def forward(self, enc_X, dec_X, *args):

enc_outputs = self.encoder(enc_X, *args)

dec_state = self.decoder.init_state(enc_outputs, *args)

return self.decoder(dec_X, dec_state)

# 可以应用在对话系统、生成式任务中。

Sequence to Sequence模型

class Seq2SeqEncoder(d2l.Encoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqEncoder, self).__init__(**kwargs)

self.num_hiddens=num_hiddens

self.num_layers=num_layers

# 通过nn.Embedding 对词的 id 进行映射 成长度相同的词向量

self.embedding = nn.Embedding(vocab_size, embed_size)

# embed_size : 词向量的维度

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

# 初始化初始状态

def begin_state(self, batch_size, device):

return [torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device),

torch.zeros(size=(self.num_layers, batch_size, self.num_hiddens), device=device)]

def forward(self, X, *args):

X = self.embedding(X) # X shape: (batch_size, seq_len, embed_size)

X = X.transpose(0, 1) # RNN需要第一个轴是时间

# state = self.begin_state(X.shape[1], device=X.device)

out, state = self.rnn(X)

# The shape of out is (seq_len, batch_size, num_hiddens).

# state contains the hidden state and the memory cell

# of the last time step, the shape is (num_layers, batch_size, num_hiddens)

return out, state

参数:

batch_size :处理句子数

seq_len :有效词数

vocab_size :句子单词的id列表的长度

embed_size :对句子中的单词映射成向量的维度

# 初始化encoder模型参数

encoder = Seq2SeqEncoder(vocab_size=10, embed_size=8,num_hiddens=16, num_layers=2)

X = torch.zeros((4, 7),dtype=torch.long)

output, state = encoder(X)

output.shape, len(state), state[0].shape, state[1].shape

# 这个不知道为什么,可以看《Datawhale 组对学习打卡营 任务9:循环神经网络进阶》中的LSTM 和 这章的Sequence to Sequence模型

Output:

(torch.Size([7, 4, 16]), 2, torch.Size([2, 4, 16]), torch.Size([2, 4, 16]))

Decoder

代码Encoder类似

class Seq2SeqDecoder(d2l.Decoder):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

dropout=0, **kwargs):

super(Seq2SeqDecoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.LSTM(embed_size,num_hiddens, num_layers, dropout=dropout)

# 把隐层神经元映射到词典里,选出概率最大的那个词

self.dense = nn.Linear(num_hiddens,vocab_size)

def init_state(self, enc_outputs, *args):

return enc_outputs[1]

def forward(self, X, state):

X = self.embedding(X).transpose(0, 1)

out, state = self.rnn(X, state)

# Make the batch to be the first dimension to simplify loss computation.

out = self.dense(out).transpose(0, 1)

return out, state

# 初始化decoder模型参数

decoder = Seq2SeqDecoder(vocab_size=10, embed_size=8,num_hiddens=16, num_layers=2)

state = decoder.init_state(encoder(X))

out, state = decoder(X, state)

out.shape, len(state), state[0].shape, state[1].shape

output:

(torch.Size([4, 7, 10]), 2, torch.Size([2, 4, 16]), torch.Size([2, 4, 16]))

损失函数

def SequenceMask(X, X_len,value=0):

# X是一个batch

# X_len 是一个有效长度

# X.size(1) 每个句子的id列表长度

maxlen = X.size(1) # 设定句子单词的最大有效长度

# 把 torch.arange(maxlen)[None, :] 放到 X_len 相同的device上才能计算(使用gpu的情况下)

mask = torch.arange(maxlen)[None, :].to(X_len.device) < X_len[:, None]

X[~mask]=value

return X

X = torch.tensor([[1,2,3], [4,5,6]])

SequenceMask(X,torch.tensor([1,2]))

output:

tensor([[1, 0, 0],

[4, 5, 0]])

X = torch.ones((2,3, 4))

SequenceMask(X, torch.tensor([1,2]),value=-1)

output:

tensor([[[ 1., 1., 1., 1.],

[-1., -1., -1., -1.],

[-1., -1., -1., -1.]],

[[ 1., 1., 1., 1.],

[ 1., 1., 1., 1.],

[-1., -1., -1., -1.]]])

```python

class MaskedSoftmaxCELoss(nn.CrossEntropyLoss):

# pred shape: (batch_size, seq_len, vocab_size)

# label shape: (batch_size, seq_len)

# valid_length shape: (batch_size, )

def forward(self, pred, label, valid_length):

# the sample weights shape should be (batch_size, seq_len)

# 定义label大小的权重

weights = torch.ones_like(label)

# 用SequenceMask函数,把有效长度的的保留1,超出有效长度的全改为0

weights = SequenceMask(weights, valid_length).float()

self.reduction='none'

output=super(MaskedSoftmaxCELoss, self).forward(pred.transpose(1,2), label)

return (output*weights).mean(dim=1) # 对第1维取平均,因为第1维是句子里每个单词的id

loss = MaskedSoftmaxCELoss()

loss(torch.ones((3, 4, 10)), torch.ones((3,4),dtype=torch.long), torch.tensor([4,3,0]))

output:

tensor([2.3026, 1.7269, 0.0000])

训练

def train_ch7(model, data_iter, lr, num_epochs, device): # Saved in d2l

model.to(device) # 如果是Gpu,后序必须 .to(device),放在同一快训练,否则可以不用.to(device)

optimizer = optim.Adam(model.parameters(), lr=lr)

loss = MaskedSoftmaxCELoss()

tic = time.time() # 计时

for epoch in range(1, num_epochs+1):

l_sum, num_tokens_sum = 0.0, 0.0

for batch in data_iter:

optimizer.zero_grad()

X, X_vlen, Y, Y_vlen = [x.to(device) for x in batch]

Y_input, Y_label, Y_vlen = Y[:,:-1], Y[:,1:], Y_vlen-1

Y_hat, _ = model(X, Y_input, X_vlen, Y_vlen)

# Y_hat 是预测的,Y_label 是真实的

l = loss(Y_hat, Y_label, Y_vlen).sum()

l.backward()

with torch.no_grad():# 梯度裁剪

d2l.grad_clipping_nn(model, 5, device)

num_tokens = Y_vlen.sum().item()

optimizer.step()

l_sum += l.sum().item()

num_tokens_sum += num_tokens

if epoch % 50 == 0:

print("epoch {0:4d},loss {1:.3f}, time {2:.1f} sec".format(

epoch, (l_sum/num_tokens_sum), time.time()-tic))

tic = time.time() # 计时

embed_size, num_hiddens, num_layers, dropout = 32, 32, 2, 0.0

batch_size, num_examples, max_len = 64, 1e3, 10

lr, num_epochs, ctx = 0.005, 300, d2l.try_gpu()

src_vocab, tgt_vocab, train_iter = d2l.load_data_nmt(

batch_size, max_len,num_examples)

encoder = Seq2SeqEncoder(

len(src_vocab), embed_size, num_hiddens, num_layers, dropout)

decoder = Seq2SeqDecoder(

len(tgt_vocab), embed_size, num_hiddens, num_layers, dropout)

model = d2l.EncoderDecoder(encoder, decoder)

train_ch7(model, train_iter, lr, num_epochs, ctx)

output:

epoch 50,loss 0.093, time 38.2 sec

epoch 100,loss 0.046, time 37.9 sec

epoch 150,loss 0.032, time 36.8 sec

epoch 200,loss 0.027, time 37.5 sec

epoch 250,loss 0.026, time 37.8 sec

epoch 300,loss 0.025, time 37.3 sec

测试

def translate_ch7(model, src_sentence, src_vocab, tgt_vocab, max_len, device):

# src_sentence 是一句话的输入(字符串)

src_tokens = src_vocab[src_sentence.lower().split(' ')] # 分词

src_len = len(src_tokens) # 有效长度

if src_len ' + translate_ch7(

model, sentence, src_vocab, tgt_vocab, max_len, ctx))

output:

Go . => va !

Wow ! => !

I'm OK . => ça va .

I won ! => j'ai gagné !

作者:滑翔小飞侠

相关文章

Iris

2021-08-03

Coral

2021-01-26

Kande

2023-05-13

Ula

2023-05-13

Jacinda

2023-05-13

Winona

2023-05-13

Fawn

2023-05-13

Echo

2023-05-13

Maha

2023-05-13

Kande

2023-05-15

Viridis

2023-05-17

Pandora

2023-07-07

Tallulah

2023-07-17

Janna

2023-07-20

Ophelia

2023-07-20

Natalia

2023-07-20

Irma

2023-07-20

Kirima

2023-07-20