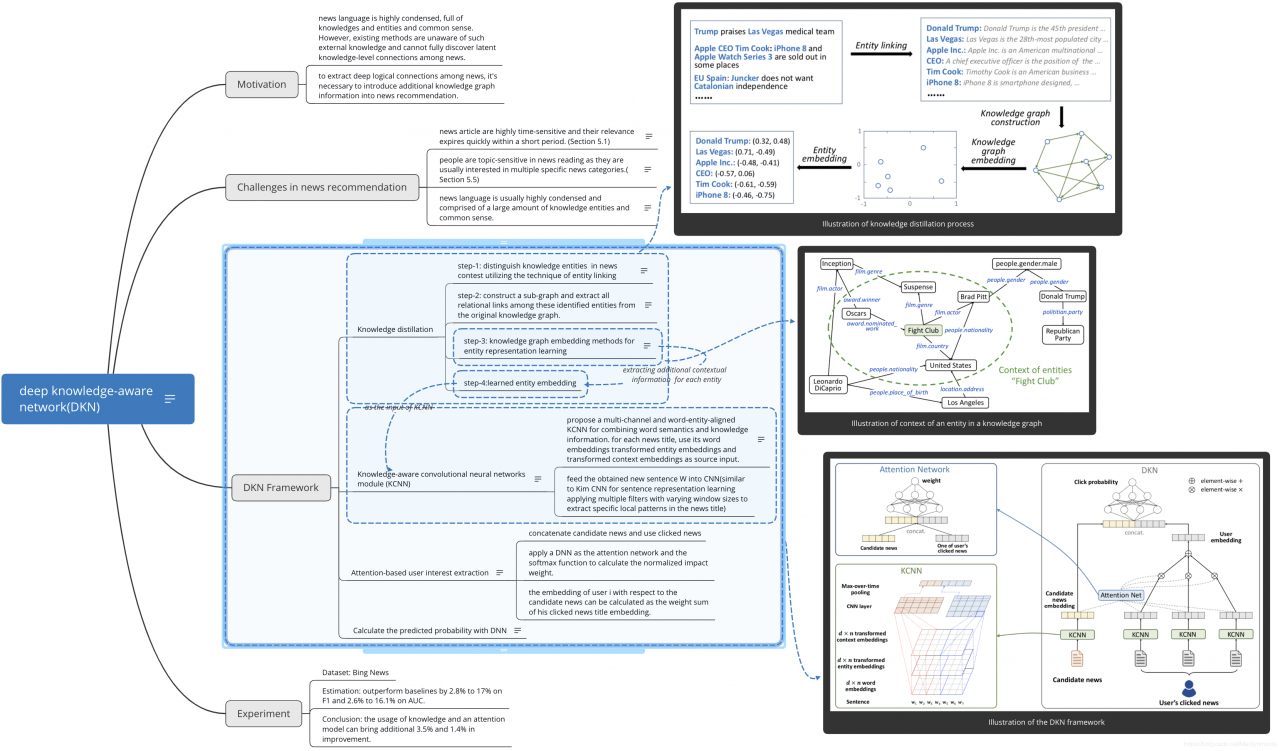

2018_WWW_DKN- Deep Knowledge-Aware Network for News Recommendation阅读笔记

Xmind思维导图:

notes: to disambiguate mentions in texts by associating them with predefined entities in a knowledge graph. step-2: construct a sub-graph and extract all relational links among these identified entities from the original knowledge graph.

notes: Note that the relations among identified entities only may be sparse and lack diversity. Therefore,expand the knowledge sub-graph to all entities within one hop of identified ones step-3: knowledge graph embedding methods for entity representation learning

notes: 1) Given the extracted knowledge graph, a great many knowledge graph embedding methods, such as TransE , TransH, TransR , and TransD, can be utilized for entity representation learning. 2)the goal of knowledge graph embedding is to learn a low-dimensional representation vector for each entity and relation that preserves the structural information of the original knowledge graph. 3) It should be noted that though state-of-the-art knowledge graph embedding methods could generally preserve the structural information in the original graph, we find that the information of learned embedding for a single entity is still limited when used in subsequent recommendations. To help identify the position of entities in the knowledge graph, we propose extracting additional contextual information for each entity. step-4:learned entity embedding Knowledge-aware convolutional neural networks module (KCNN)

notes: KCNN is used to process each piece of title and generate an embedding vector.

KCNN treats words and entities as multiple channels, and explicitly keeps their alignment relationship during convolution. propose a multi-channel and word-entity-aligned KCNN for combining word semantics and knowledge information. for each news title, use its word embeddings transformed entity embeddings and transformed context embeddings as source input.

notes: First, try to combine words and associated entities is to treat the entities as “pseudo words” and concatenate them to the word sequence W.e.g.W = [w1 w2 … wn et1 et2 …]However, we argue that this simple concatenating strategy has the following limitations: 1) The concatenating strategy breaks up the connection between words and associated entities and is unaware of their alignment. 2) Word embeddings and entity embeddings are learned by different methods, meaning it is not suitable to convolute them together in a single vector space.3) The concatenating strategy implicitly forces word embeddings and entity embeddings to have the same dimension, which may not be optimal in practical settings since the optimal dimensions for word and entity embeddings may differ from each other.Since the transformation function is continuous, it can map the entity embeddings and context embeddings from the entity space to the word space while preserving their original spatial relationship. feed the obtained new sentence W into CNN(similar to Kim CNN for sentence representation learning applying multiple filters with varying window sizes to extract specific local patterns in the news title) Attention-based user interest extraction

notes: Motivation:To get final embedding of the user with respect to the current candidate news, we use an attention-based method to automatically match the candidate news to each piece of his clicked news, and aggregate the user’s historical interests with different weights. concatenate candidate news and use clicked news

apply a DNN as the attention network and the softmax function to calculate the normalized impact weight.

the embedding of user i with respect to the candidate news can be calculated as the weight sum of his clicked news title embedding. Calculate the predicted probability with DNN Calculate the predicted probability with DNN Experiment Dataset: Bing News Estimation: outperform baselines by 2.8% to 17% on F1 and 2.6% to 16.1% on AUC. Conclusion: the usage of knowledge and an attention model can bring additional 3.5% and 1.4% in improvement.

作者:Marilynmontu