使用python爬取虎牙主播直播封面图片(scrapy)

目的:使用Scrapy框架爬取虎牙主播直播封面图片

作者:firstlt0217

Scrapy(通过pip 安装Scrapy框架pip install Scrapy)和Python3.x安装教程可自行寻找教程安装,这里默认在Windows环境下已经配置成功。

1.新建项目(scrapy startproject)在开始爬取之前,必须创建一个新的Scrapy项目。Win+R打开cmd命令窗口,运行下列命令:

cd desktop

scrapy startproject huya

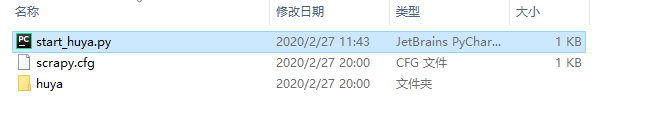

此时,已在桌面建立huya工程项目文件夹,文件夹中文件解释如下:

scrapy.cfg :项目的配置文件

huya/ :项目的Python模块,将会从这里引用代码

huya/items.py :项目的目标文件

huya/pipelines.py :项目的管道文件

huya/settings.py :项目的设置文件

huya/spiders/ :存储爬虫代码目录

接着,进入huya/spiders/目录,cmd输入命令如下:

cd huya

cd huya

cd spiders

在当前目录下输入命令,将在huya/spiders/目录下创建文件huyaspider.py,并指定爬取域范围:huya.com

scrapy genspider huyaspider "huya.com"

2. 代码部分

1)items.py

打开huya/huya/目录下文件items.py,代码如下:

# -*- coding: utf-8 -*-

import scrapy

class HuyaItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 房间主题

nickname = scrapy.Field()

# 链接

imagelink = scrapy.Field()

# 存储路径

imagePath = scrapy.Field()

2)huyaspider.py

打开huya/huya/spiders/目录下文件huyaspider.py,修改代码如下:

# -*- coding: utf-8 -*-

import scrapy

from huya.items import HuyaItem

class HuyaspiderSpider(scrapy.Spider):

name = 'huyaspider'

allowed_domains = ['huya.com']

start_urls = ['https://www.huya.com/g/2168']

def parse(self, response):

# 通过scrapy自带的xpath匹配出所有根节点列表集合

image_list = response.xpath('//div[@class="box-bd"]/ul/li')

for img_each in image_list:

huyaItem=HuyaItem()

huyaItem["nickname"] = img_each.xpath("./a/img[@class='pic']/@title").extract()[0]

huyaItem["imagelink"] = img_each.xpath("./a/img[@class='pic']/@data-original").extract()[0]

yield huyaItem

3)pipelines.py

打开huya/huya/目录下文件pipelines.py,修改代码如下:

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

from scrapy.utils.project import get_project_settings

from scrapy.pipelines.images import ImagesPipeline

import os

class HuyaPipeline(ImagesPipeline):

# def process_item(self, item, spider):

# return item

# 获取settings文件里设置的变量值

IMAGES_STORE = get_project_settings().get("IMAGES_STORE")

headers = get_project_settings().get("DEFAULT_REQUEST_HEADERS")

def get_media_requests(self, item, info):

image_url = item["imagelink"]

# headers是请求头主要是防反爬虫

yield scrapy.Request(image_url, headers=self.headers)

def item_completed(self, result, item, info):

image_path = [x["path"] for ok, x in result if ok]

# # 目录不存在则创建目录

if os.path.exists(self.IMAGES_STORE) == False:

os.mkdir(self.image_path)

os.rename(self.IMAGES_STORE + "/" + image_path[0], self.IMAGES_STORE + "/" + item["nickname"] + ".jpg")

item["imagePath"] = self.IMAGES_STORE + "/" + item["nickname"]

return item

3)setting.py

打开huya/huya/目录下文件setting.py,添加代码如下:

IMAGES_STORE = "C:/Users/**用户名**/Desktop/huya/Images"

打开huya/huya/目录下文件setting.py,修改代码如下:

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'User-Agent' : 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0;',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

#'Accept-Language': 'en',

}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'huya.pipelines.HuyaPipeline': 300,

}

3.爬取数据

cmd命令窗口:

scrapy crawl huyaspider

pycharm启动,在huya/目录下建立start_huya.py文件,添加代码如下:

#!/usr/bin/env python

# -*- coding:utf-8 -*-

from scrapy import cmdline

cmdline.execute("scrapy crawl huyaspider".split())

作者:firstlt0217