Python实现爬取亚马逊产品评论

Python实现爬取亚马逊产品评论

作者:Mr.Tao~

一、最近一直在研究爬取亚马逊评论相关的信息,亚马逊的反爬机制还是比较严格的,时不时就封cookie啊封ip啊啥的。而且他们的网页排版相对没有那么规则,所以对我们写爬虫的还是有点困扰的,经过一天的研究现在把成果及心得分享给大家

1.先是我们所需要的库,我们这里是用xpath进行内容匹配,将爬取的内容存入Mysql,所以以下就是我们所需要的库

import requests

import lxml.html

import pandas as pd

import pymysql

import random

import time

2.接下来是根据ASIN和请求头的cookie来获取网页

def get_response(ASIN, p, headers):

url = 'https://www.amazon.com/dp/' + str(ASIN) + '&pageNumber=%s'%str(p)

html = requests.get(url, headers=headers, timeout=8).text

response = lxml.html.fromstring(html)

return response

3.接下来就是正文,用requests获取网页,lxml解析,xpath匹配

def Spider(response, j):

info = []

new_date = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/span/text()' % str(j))

# 爬取评论者名称

new_name = response.xpath(

'//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[1]/a/div[2]/span/text()' % str(j))

# 爬取评论星级

new_star = \

response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[2]/a/i/span/text()' % str(j))[0]

# 爬取评论者购买size

new_size = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[3]/a/text()' % str(j))

# 爬取评论者购买颜色分类

new_color = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[3]/a/text()[2]' % str(j))

# 匹配评论者评论

new_content = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[4]/span/text()' % str(j))

# print(new_size, new_color)

if len(new_name) == 0:

new_name = response.xpath('//*[@id="cm_cr-review_list"]/'

'div[%s]/div[1]/div[1]/div[1]/div[1]/div[1]/a/div[2]/span/text()' % str(j))

if len(new_color) == 0:

new_color = new_size

new_size = [None]

info.append([new_date, new_name, new_color, new_size, new_star, new_content])

return info

4.然后将获取的数据存入Mysql数据库

def save_mysql(info, ASIN):

db = pymysql.connect(host='localhost', user='root', password='root', db='jisulife', charset='utf8mb4')

cursor = db.cursor()

sql_insert = 'INSERT INTO amazon_reviews(ASIN, A_date,A_name,A_size, A_color, A_star,A_content) ' \

'VALUES (%s, %s, %s,%s, %s, %s, %s)'

new_info = info[0]

cursor.execute(sql_insert,

(ASIN, new_info[0], new_info[1], new_info[2], new_info[3],new_info[4], new_info[5]))

db.commit()

db.close()

5.设置主函数

if __name__ == '__main__':

ASIN_list = pd.read_excel('E:\\WT\\project\\data\\ASIN.xls')['ASIN']

cookie_list = pd.read_excel('E:\\WT\\project\\data\\cookie.xls')['cookie']

cookie = random.choice(cookie_list)

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome'

'49.0.2623.112 Safari/537.36',

'cookie': cookie

}

for i in range(0, len(ASIN_list)):

ASIN = ASIN_list[i]

try:

# 亚马逊评论页数最多500页

for p in range(0, 500):

res = get_response(ASIN, p, headers)

for j in range(1, 11):

info = Spider(res, j)

save_mysql(info, ASIN)

time.sleep(random.uniform(3, 5))

except:

pass

6.完整code如下

import requests

import lxml.html

import pandas as pd

import pymysql

import random

import time

def get_response(ASIN, p, headers):

url = 'https://www.amazon.com/dp/' + str(ASIN) + '&pageNumber=%s'%str(p)

html = requests.get(url, headers=headers, timeout=8).text

response = lxml.html.fromstring(html)

return response

def Spider(response, j):

info = []

new_date = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/span/text()' % str(j))

# 爬取评论者名称

new_name = response.xpath(

'//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[1]/a/div[2]/span/text()' % str(j))

# 爬取评论星级

new_star = \

response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[2]/a/i/span/text()' % str(j))[0]

# 爬取评论者购买size

new_size = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[3]/a/text()' % str(j))

# 爬取评论者购买颜色分类

new_color = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[3]/a/text()[2]' % str(j))

# 匹配评论者评论

new_content = response.xpath('//*[@id="cm_cr-review_list"]/div[%s]/div[1]/div[1]/div[4]/span/text()' % str(j))

# print(new_size, new_color)

if len(new_name) == 0:

new_name = response.xpath('//*[@id="cm_cr-review_list"]/'

'div[%s]/div[1]/div[1]/div[1]/div[1]/div[1]/a/div[2]/span/text()' % str(j))

if len(new_color) == 0:

new_color = new_size

new_size = [None]

info.append([new_date, new_name, new_color, new_size, new_star, new_content])

return info

def save_mysql(info, ASIN):

db = pymysql.connect(host='localhost', user='root', password='root', db='jisulife', charset='utf8mb4')

cursor = db.cursor()

sql_insert = 'INSERT INTO amazon_reviews(ASIN, A_date,A_name,A_size, A_color, A_star,A_content) ' \

'VALUES (%s, %s, %s,%s, %s, %s, %s)'

new_info = info[0]

cursor.execute(sql_insert,

(ASIN, new_info[0], new_info[1], new_info[2], new_info[3],new_info[4], new_info[5]))

db.commit()

db.close()

if __name__ == '__main__':

ASIN_list = pd.read_excel('E:\\WT\\project\\data\\ASIN.xls')['ASIN']

cookie_list = pd.read_excel('E:\\WT\\project\\data\\cookie.xls')['cookie']

cookie = random.choice(cookie_list)

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome'

'49.0.2623.112 Safari/537.36',

'cookie': cookie

}

for i in range(0, len(ASIN_list)):

ASIN = ASIN_list[i]

try:

# 亚马逊评论页数最多500页

for p in range(0, 500):

res = get_response(ASIN, p, headers)

for j in range(1, 11):

info = Spider(res, j)

save_mysql(info, ASIN)

time.sleep(random.uniform(3, 5))

except:

pass

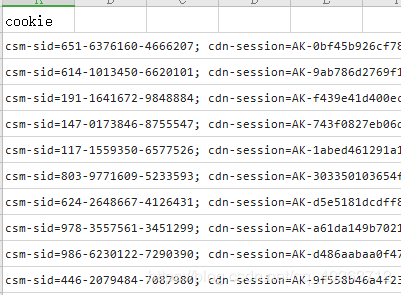

7.ASIN表、cookie表的排版如下图

作者:Mr.Tao~