hbase2.1.6协处理器使用

对于2.1.6这个版本,使用协处理器需要引入hbase-common依赖。

协处理器分为两种,一种是observer协处理器,一种是endpoint协处理器。

下面首先记录第一种协处理器的使用步骤。

一、observer协处理器 案例背景有user和people两个表,每个表都有一个person列族。现在要实现的是向user表插入“person:name”之前,先将其rowkey插入到people表的“person:lastname”。相当于是二级索引的概念。

步骤1.修改配置文件hbase-site.xml,保证在协处理器加载和运行出现异常时,regionserver仍然可以提供服务。

hbase.table.sanity.checks

false

hbase.coprocessor.abortonerror

false

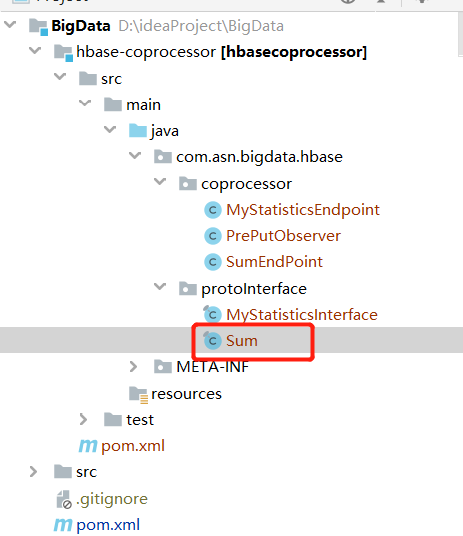

2.首先在当前项目下新建一个模块hbase-coprocessor,用来开发协处理器。目录结构如下:

3.开发协处理器逻辑实现类

package com.asn.bigdata.hbase.coprocessor;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CoprocessorEnvironment;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.coprocessor.ObserverContext;

import org.apache.hadoop.hbase.coprocessor.RegionCoprocessor;

import org.apache.hadoop.hbase.coprocessor.RegionCoprocessorEnvironment;

import org.apache.hadoop.hbase.coprocessor.RegionObserver;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.hbase.wal.WALEdit;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.util.Optional;

public class PrePutObserver implements RegionObserver, RegionCoprocessor {

private static final Logger logger = LoggerFactory.getLogger(PrePutObserver.class);

private static Configuration conf = null;

private static Connection connection = null;

private static Table table = null;

private RegionCoprocessorEnvironment env = null;

static{

conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "flink1,flink2,flink3");

conf.set("hbase.zookeeper.property.clientPort", "2181");

try {

connection = ConnectionFactory.createConnection(conf);

table = connection.getTable(TableName.valueOf("people"));

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void start(CoprocessorEnvironment e) throws IOException {

logger.info("CoprocessorEnvironment............start...........................");

this.env = (RegionCoprocessorEnvironment) e;

}

@Override

public void stop(CoprocessorEnvironment env) throws IOException {

logger.info("CoprocessorEnvironment............stop...........................");

}

/**

* 必须加入该方法,否则可能会报错

*/

@Override

public Optional getRegionObserver() {

return Optional.of(this);

}

@Override

public void prePut(ObserverContext c, Put put, WALEdit edit, Durability durability) throws IOException {

logger.info("run PrePutObserver............prePut...........................");

try {

byte[] userKey = put.getRow();

Cell cell = put.get(Bytes.toBytes("person"), Bytes.toBytes("name")).get(0);

Put o_put = new Put(cell.getValueArray(),cell.getValueOffset(),cell.getValueLength());

o_put.addColumn(Bytes.toBytes("person"), Bytes.toBytes("lastname"), userKey);

table.put(o_put);

table.close();

} catch (IOException e) {

logger.error(e.getMessage());

throw e;

}

}

}

4.打包放到hdfs

这个东西打包是关键,打包方式不正确的话,会导致在挂载协处理器时或执行put操作时报错。正确的打包步骤如下:

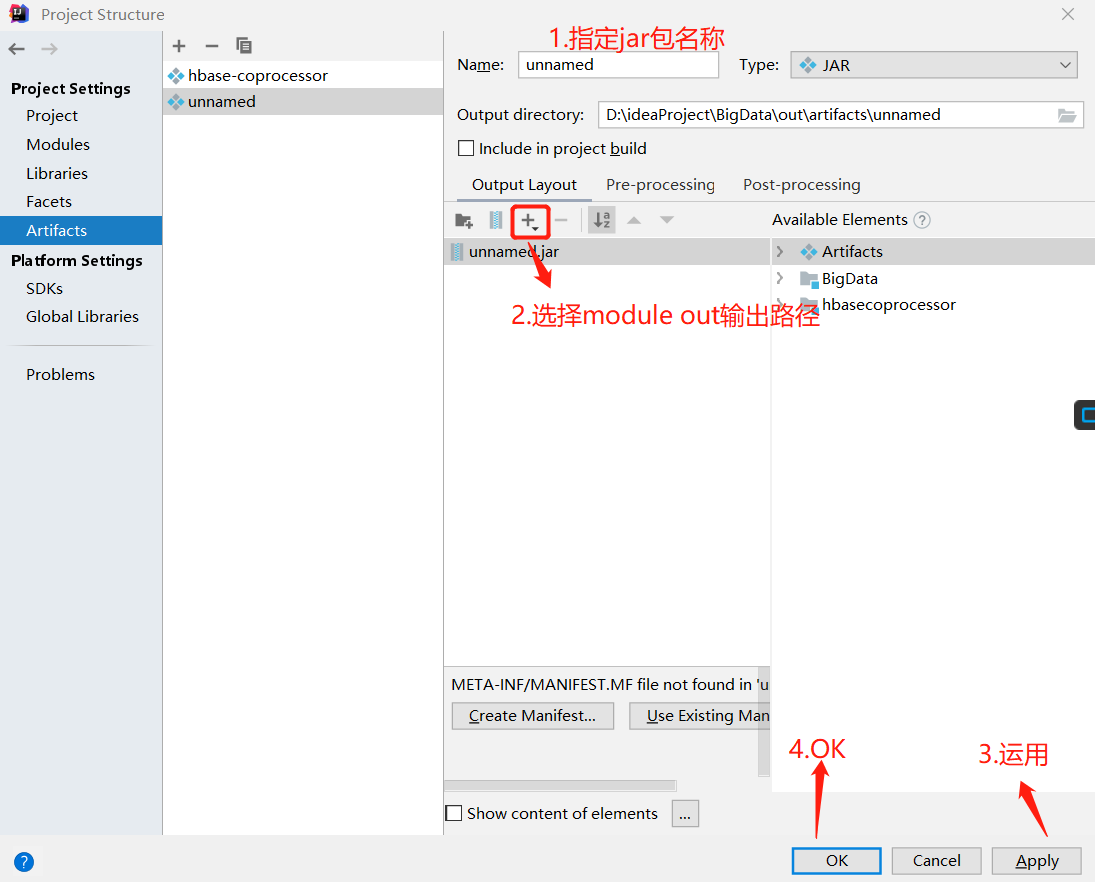

1)依次点击File->Project Structure->Artifacts->+号->JAR->Empty,进入如下界面,接着按照序号操作

2)依次点击Build->Build Artifacts->选择刚才命名的jar->build进行打包

3)将生成的jar包上传到hdfs

5.进入hbase shell,首先disable user表

6.给user表加载协处理器

hbase(main):011:0> alter 'user',METHOD => 'table_att','coprocessor' => 'hdfs://flink1:9000/test/hbase/coprocessor/hbase-coprocessor.jar|com.asn.bigdata.hbase.coprocessor.PrePutObserver|1001|'

如果没有在hbase-site.xml中配置上述配置的话,这里可能会遇到如下报错:

ERROR: org.apache.hadoop.hbase.DoNotRetryIOException: hdfs://flink1:9000/test/hbase/coprocessor/d.jar Set hbase.table.sanity.checks to false at conf or table descriptor if you want to bypass sanity checks

at org.apache.hadoop.hbase.master.HMaster.warnOrThrowExceptionForFailure(HMaster.java:2228)

at org.apache.hadoop.hbase.master.HMaster.sanityCheckTableDescriptor(HMaster.java:2075)

at org.apache.hadoop.hbase.master.HMaster.access$200(HMaster.java:246)

at org.apache.hadoop.hbase.master.HMaster$12.run(HMaster.java:2562)

at org.apache.hadoop.hbase.master.procedure.MasterProcedureUtil.submitProcedure(MasterProcedureUtil.java:134)

at org.apache.hadoop.hbase.master.HMaster.modifyTable(HMaster.java:2558)

at org.apache.hadoop.hbase.master.HMaster.modifyTable(HMaster.java:2595)

at org.apache.hadoop.hbase.master.MasterRpcServices.modifyTable(MasterRpcServices.java:1347)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:132)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)

报错日志写出了解决方式,就是在hbase-site.xml中添加以下配置:

hbase.table.sanity.checks

false

7.查看user表信息,并enable user表

8.测试

向user表插入数据

hbase(main):011:0> put 'user','0001','person:name','asn'

查看person表内容

hbase(main):011:0> scan 'people'

ROW COLUMN+CELL

wangsen column=person:lastname, timestamp=1581819549421, value=0001

1 row(s)

Took 0.0806 seconds

完成!

二、endpoint协处理器

与Observer类型不同的是,Endpoint协处理器需要与服务器直接通信,服务端是对于Protobuf Service的实现,所以两者之间会有一个基于protocl的RPC接口,客户端和服务端都需要进行基于接口的代码逻辑实现。

案例背景

统计上一篇博客中的user表的记录数

步骤

1、编写sum.proto文件

option java_package = "com.asn.bigdata.hbase.protoInterface";

option java_outer_classname = "Sum";

option java_generic_services = true;

option java_generate_equals_and_hash = true;

option optimize_for = SPEED;

message SumRequest {

required string family = 1;

required string column = 2;

}

message SumResponse {

required int64 sum = 1 [default = 0];

}

service SumService {

rpc getSum(SumRequest)

returns (SumResponse);

}

2、编译

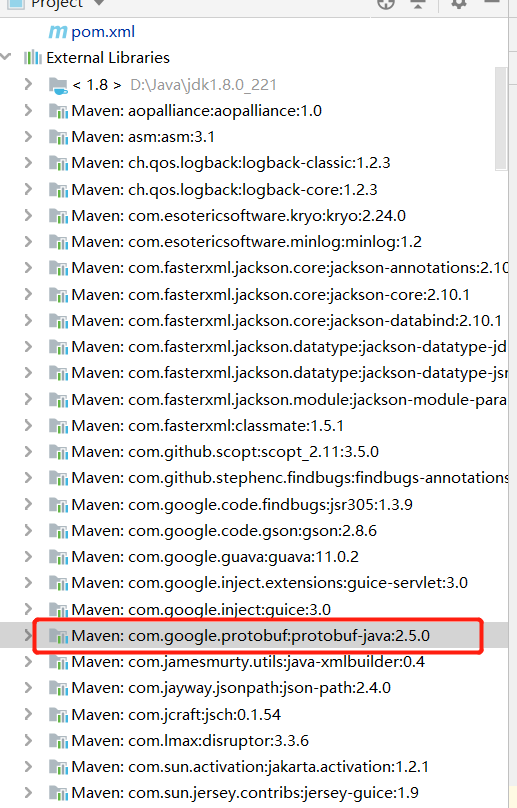

注意,这时候需要保证项目中依赖的protobuf-java的版本与编译器的版本一致,否则编译的文件拿到项目中就会报错。

我这里项目中是2.5.0的,因此需要下载2.5.0版本的编译器。官网已经不支持下载这个版本的,可以到我这里下载。

链接:https://pan.baidu.com/s/1LBB4a4rp3iqkyg154O3acw

提取码:uoqm

下载后解压 protoc-2.5.0-win32并配置到环境变量中,通过在cmd中运行protoc --version命令查看是否安装成功。

安装成功后,将上述statistics.proto文件copy到protoc.exe所在的目录下,然后在此目录下进入cmd,运行以下命令生成编译文件:

E:\MySoftware\protoc-2.5.0-win32>protoc --java_out=./ sum.proto

3.将编译得到的Sum.java文件拷贝到项目对应的包路径下

4.编写逻辑实现类

package com.asn.bigdata.hbase.coprocessor;

import com.asn.bigdata.hbase.protoInterface.Sum;

import com.google.protobuf.RpcCallback;

import com.google.protobuf.RpcController;

import com.google.protobuf.Service;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.Coprocessor;

import org.apache.hadoop.hbase.CoprocessorEnvironment;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.coprocessor.CoprocessorException;

import org.apache.hadoop.hbase.coprocessor.CoprocessorService;

import org.apache.hadoop.hbase.coprocessor.RegionCoprocessorEnvironment;

import org.apache.hadoop.hbase.regionserver.InternalScanner;

import org.apache.hadoop.hbase.shaded.protobuf.ResponseConverter;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class SumEndPoint extends Sum.SumService implements Coprocessor, CoprocessorService {

private RegionCoprocessorEnvironment env;

@Override

public Service getService() {

return this;

}

@Override

public void start(CoprocessorEnvironment env) throws IOException {

if (env instanceof RegionCoprocessorEnvironment) {

this.env = (RegionCoprocessorEnvironment)env;

} else {

throw new CoprocessorException("Must be loaded on a table region!");

}

}

@Override

public void stop(CoprocessorEnvironment env) throws IOException {

// do nothing

}

@Override

public void getSum(RpcController controller, Sum.SumRequest request, RpcCallback done) {

Scan scan = new Scan();

scan.addFamily(Bytes.toBytes(request.getFamily()));

scan.addColumn(Bytes.toBytes(request.getFamily()), Bytes.toBytes(request.getColumn()));

Sum.SumResponse response = null;

InternalScanner scanner = null;

try {

scanner = env.getRegion().getScanner(scan);

List results = new ArrayList();

boolean hasMore = false;

long sum = 0L;

do {

hasMore = scanner.next(results);

for (Cell cell : results) {

sum = sum + Bytes.toLong(CellUtil.cloneValue(cell));

}

results.clear();

} while (hasMore);

response = Sum.SumResponse.newBuilder().setSum(sum).build();

} catch (IOException ioe) {

ResponseConverter.setControllerException(controller, ioe);

} finally {

if (scanner != null) {

try {

scanner.close();

} catch (IOException ignored) {}

}

}

done.run(response);

}

}

5.将实现类SumEndPoint打包放到hdfs

这个过程与上述的observer协处理器一样

6.disable user

7.挂载这个协处理器

hbase(main):004:0> alter 'user',METHOD => 'table_att','coprocessor' => 'hdfs://flink1:9000/test/hbase/coprocessor/SumEndPoint.jar|com.asn.bigdata.hbase.coprocessor.SumEndPoint|1001|'

Updating all regions with the new schema...

All regions updated.

Done.

Took 1.9677 seconds

hbase(main):005:0> desc 'user'

Table user is DISABLED

user, {TABLE_ATTRIBUTES => {coprocessor$1 => 'hdfs://flink1:9000/test/hbase/coprocessor/hbase-coprocessor.jar|com.as

n.bigdata.hbase.coprocessor.PostObserver|1001|', coprocessor$2 => 'hdfs://flink1:9000/test/hbase/coprocessor/SumEndP

oint.jar|com.asn.bigdata.hbase.coprocessor.SumEndPoint|1001|'}

COLUMN FAMILIES DESCRIPTION

{NAME => 'person', VERSIONS => '1', EVICT_BLOCKS_ON_CLOSE => 'false', NEW_VERSION_BEHAVIOR => 'false', KEEP_DELETED_

CELLS => 'FALSE', CACHE_DATA_ON_WRITE => 'false', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', MIN_VERSIONS => '

0', REPLICATION_SCOPE => '0', BLOOMFILTER => 'ROW', CACHE_INDEX_ON_WRITE => 'false', IN_MEMORY => 'false', CACHE_BLO

OMS_ON_WRITE => 'false', PREFETCH_BLOCKS_ON_OPEN => 'false', COMPRESSION => 'NONE', BLOCKCACHE => 'true', BLOCKSIZE

=> '65536'}

1 row(s)

Took 0.0524 seconds

8.enable user

9.编写客户端代码测试

import com.asn.bigdata.hbase.protoInterface.Sum;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.client.coprocessor.Batch;

import org.apache.hadoop.hbase.ipc.CoprocessorRpcUtils;

import org.apache.hbase.thirdparty.com.google.protobuf.ServiceException;

import java.io.IOException;

import java.util.Map;

public class TestEndPoint {

public static void main(String[] args) throws IOException {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum","flink1,flink2,flink3");

Connection connection = ConnectionFactory.createConnection(conf);

TableName tableName = TableName.valueOf("users");

Table table = connection.getTable(tableName);

final Sum.SumRequest request = Sum.SumRequest.newBuilder().setFamily("salaryDet").setColumn("gross").build();

try {

Map results = table.coprocessorService(

Sum.SumService.class,

null, /* start key */

null, /* end key */

new Batch.Call() {

@Override

public Long call(Sum.SumService aggregate) throws IOException {

CoprocessorRpcUtils.BlockingRpcCallback rpcCallback = new CoprocessorRpcUtils.BlockingRpcCallback();

aggregate.getSum(null, request, rpcCallback);

Sum.SumResponse response = rpcCallback.get();

return response.hasSum() ? response.getSum() : 0L;

}

}

);

for (Long sum : results.values()) {

System.out.println("Sum = " + sum);

}

} catch (ServiceException e) {

e.printStackTrace();

} catch (Throwable e) {

e.printStackTrace();

}

}

}

结果报错了:

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.instantiateException(RemoteWithExtrasException.java:99)

at org.apache.hadoop.hbase.ipc.RemoteWithExtrasException.unwrapRemoteException(RemoteWithExtrasException.java:89)

at org.apache.hadoop.hbase.protobuf.ProtobufUtil.makeIOExceptionOfException(ProtobufUtil.java:282)

at org.apache.hadoop.hbase.protobuf.ProtobufUtil.handleRemoteException(ProtobufUtil.java:269)

at org.apache.hadoop.hbase.client.RegionServerCallable.call(RegionServerCallable.java:129)

at org.apache.hadoop.hbase.client.RpcRetryingCallerImpl.callWithRetries(RpcRetryingCallerImpl.java:107)

... 11 common frames omitted

Caused by: org.apache.hadoop.hbase.ipc.RemoteWithExtrasException: java.io.IOException

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:472)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:132)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:324)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:304)

Caused by: java.lang.NullPointerException

at com.asn.bigdata.hbase.coprocessor.SumEndPoint.getSum(SumEndPoint.java:56)

at com.asn.bigdata.hbase.protoInterface.Sum$SumService.callMethod(Sum.java:1267)

at org.apache.hadoop.hbase.regionserver.HRegion.execService(HRegion.java:8230)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.execServiceOnRegion(RSRpcServices.java:2423)

at org.apache.hadoop.hbase.regionserver.RSRpcServices.execService(RSRpcServices.java:2405)

at org.apache.hadoop.hbase.shaded.protobuf.generated.ClientProtos$ClientService$2.callBlockingMethod(ClientProtos.java:42010)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:413)

... 3 more

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.onCallFinished(AbstractRpcClient.java:387)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient.access$100(AbstractRpcClient.java:95)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:410)

at org.apache.hadoop.hbase.ipc.AbstractRpcClient$3.run(AbstractRpcClient.java:406)

at org.apache.hadoop.hbase.ipc.Call.callComplete(Call.java:103)

at org.apache.hadoop.hbase.ipc.Call.setException(Call.java:118)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.readResponse(NettyRpcDuplexHandler.java:162)

at org.apache.hadoop.hbase.ipc.NettyRpcDuplexHandler.channelRead(NettyRpcDuplexHandler.java:192)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:310)

at org.apache.hbase.thirdparty.io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:284)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at org.apache.hbase.thirdparty.io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1359)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at org.apache.hbase.thirdparty.io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at org.apache.hbase.thirdparty.io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:935)

at org.apache.hbase.thirdparty.io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:138)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

at org.apache.hbase.thirdparty.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

at org.apache.hbase.thirdparty.io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:138)

... 1 common frames omitted

17:00:45.965 [main] WARN org.apache.hadoop.hbase.client.HTable - Error calling coprocessor service com.asn.bigdata.hbase.protoInterface.Sum$SumService for row

java.util.concurrent.ExecutionException: java.lang.NullPointerException

at java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.util.concurrent.FutureTask.get(FutureTask.java:192)

at org.apache.hadoop.hbase.client.HTable.coprocessorService(HTable.java:1009)

at org.apache.hadoop.hbase.client.HTable.coprocessorService(HTable.java:971)

at TestEndPoint.main(TestEndPoint.java:30)

Caused by: java.lang.NullPointerException: null

at TestEndPoint$1.call(TestEndPoint.java:41)

at TestEndPoint$1.call(TestEndPoint.java:34)

at org.apache.hadoop.hbase.client.HTable$12.call(HTable.java:997)

at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)

at java.util.concurrent.FutureTask.run(FutureTask.java)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

java.lang.NullPointerException

at TestEndPoint$1.call(TestEndPoint.java:41)

at TestEndPoint$1.call(TestEndPoint.java:34)

at org.apache.hadoop.hbase.client.HTable$12.call(HTable.java:997)

at java.util.concurrent.FutureTask.run$$$capture(FutureTask.java:266)

at java.util.concurrent.FutureTask.run(FutureTask.java)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Disconnected from the target VM, address: '127.0.0.1:58377', transport: 'socket'

不知道什么原因。。。。。。。。

看这个表所在的regionserver的日志,看到协处理器是成功加载了的

2020-02-19 16:32:01,631 INFO [RS_OPEN_REGION-regionserver/flink1:16020-1] regionserver.RegionCoprocessorHost: Loaded coprocessor com.asn.bigdata.hbase.coprocessor.SumEndPoint from HTD of users successfully.

2020-02-19 16:32:01,642 INFO [StoreOpener-4f388802eee5baa7bca202a3bbd85544-1] hfile.CacheConfig: Created cacheConfig for salaryDet: blockCache=LruBlockCache{blockCount=0, currentSize=71.76 KB, freeSize=94.30 MB, maxSize=94.38 MB, heapSize=71.76 KB, minSize=89.66 MB, minFactor=0.95, multiSize=44.83 MB, multiFactor=0.5, singleSize=22.41 MB, singleFactor=0.25}, cacheDataOnRead=true, cacheDataOnWrite=false, cacheIndexesOnWrite=false, cacheBloomsOnWrite=false, cacheEvictOnClose=false, cacheDataCompressed=false, prefetchOnOpen=false

2020-02-19 16:32:01,642 INFO [StoreOpener-4f388802eee5baa7bca202a3bbd85544-1] compactions.CompactionConfiguration: size [128 MB, 8.00 EB, 8.00 EB); files [3, 10); ratio 1.200000; off-peak ratio 5.000000; throttle point 2684354560; major period 604800000, major jitter 0.500000, min locality to compact 0.000000; tiered compaction: max_age 9223372036854775807, incoming window min 6, compaction policy for tiered window org.apache.hadoop.hbase.regionserver.compactions.ExploringCompactionPolicy, single output for minor true, compaction window factory org.apache.hadoop.hbase.regionserver.compactions.ExponentialCompactionWindowFactory

2020-02-19 16:32:01,653 INFO [StoreOpener-4f388802eee5baa7bca202a3bbd85544-1] regionserver.HStore: Store=salaryDet, memstore type=DefaultMemStore, storagePolicy=HOT, verifyBulkLoads=false, parallelPutCountPrintThreshold=50, encoding=NONE, compression=NONE

2020-02-19 16:32:01,662 INFO [RS_OPEN_REGION-regionserver/flink1:16020-1] regionserver.HRegion: Opened 4f388802eee5baa7bca202a3bbd85544; next sequenceid=5

2020-02-19 16:32:01,677 INFO [PostOpenDeployTasks:4f388802eee5baa7bca202a3bbd85544] regionserver.HRegionServer: Post open deploy tasks for users,,1582101057487.4f388802eee5baa7bca202a3bbd85544.

2020-02-19 16:32:03,338 INFO [LruBlockCacheStatsExecutor] hfile.LruBlockCache: totalSize=71.76 KB, freeSize=94.30 MB, max=94.38 MB, blockCount=0, accesses=0, hits=0, hitRatio=0, cachingAccesses=0, cachingHits=0, cachingHitsRatio=0,evictions=59, evicted=0, evictedPerRun=0.0

2020-02-19 16:32:08,341 INFO [HBase-Metrics2-1] impl.GlobalMetricRegistriesAdapter: Registering RegionServer,sub=Coprocessor.Region.CP_org.apache.hadoop.hbase.coprocessor.CoprocessorServiceBackwardCompatiblity$RegionCoprocessorService Metrics about HBase RegionObservers

2020-02-19 16:32:42,106 INFO [RpcServer.priority.FPBQ.Fifo.handler=15,queue=1,port=16020] regionserver.RSRpcServices: Close 4f388802eee5baa7bca202a3bbd85544, moving to flink2,16020,1582100508543

2020-02-19 16:32:42,150 INFO [RS_CLOSE_REGION-regionserver/flink1:16020-0] regionserver.HRegion: Closed users,,1582101057487.4f388802eee5baa7bca202a3bbd85544.

2020-02-19 16:32:42,150 INFO [RS_CLOSE_REGION-regionserver/flink1:16020-0] regionserver.HRegionServer: Adding 4f388802eee5baa7bca202a3bbd85544 move to flink2,16020,1582100508543 record at close sequenceid=5

作者:ASN_forever