Pytorch专题实战——线性回归(Linear Regression)

文章目录1.计算流程2.Pytorch搭建线性回归模型2.1.导入必要模块2.2.构造训练数据2.3.测试数据及输入输出神经元个数2.4.搭建模型并实例化2.5.训练

1.计算流程

作者:程旭员

1)设计模型: Design model (input, output, forward pass with different layers)

2) 构建损失函数与优化器:Construct loss and optimizer

3) 循环:Training loop

- Forward = compute prediction and loss

- Backward = compute gradients

- Update weights

2.Pytorch搭建线性回归模型

2.1.导入必要模块

import torch

import torch.nn as nn

2.2.构造训练数据

X = torch.tensor([[1],[2],[3],[4]], dtype=torch.float32)

Y = torch.tensor([[2],[4],[6],[8]], dtype=torch.float32)

n_samples, n_features = X.shape #4, 1(4个1维的样本)

2.3.测试数据及输入输出神经元个数

X_test = torch.tensor([5], dtype=torch.float32)

input_size = n_features

output_size = n_features

2.4.搭建模型并实例化

class LinearRegression(nn.Module):

def __init__(self,input_dim, output_dim):

super(LinearRegression,self).__init__()

self.lin = nn.Linear(input_dim, output_dim)

def forward(self, x):

return self.lin(x)

model = LinearRegression(input_size, output_size)

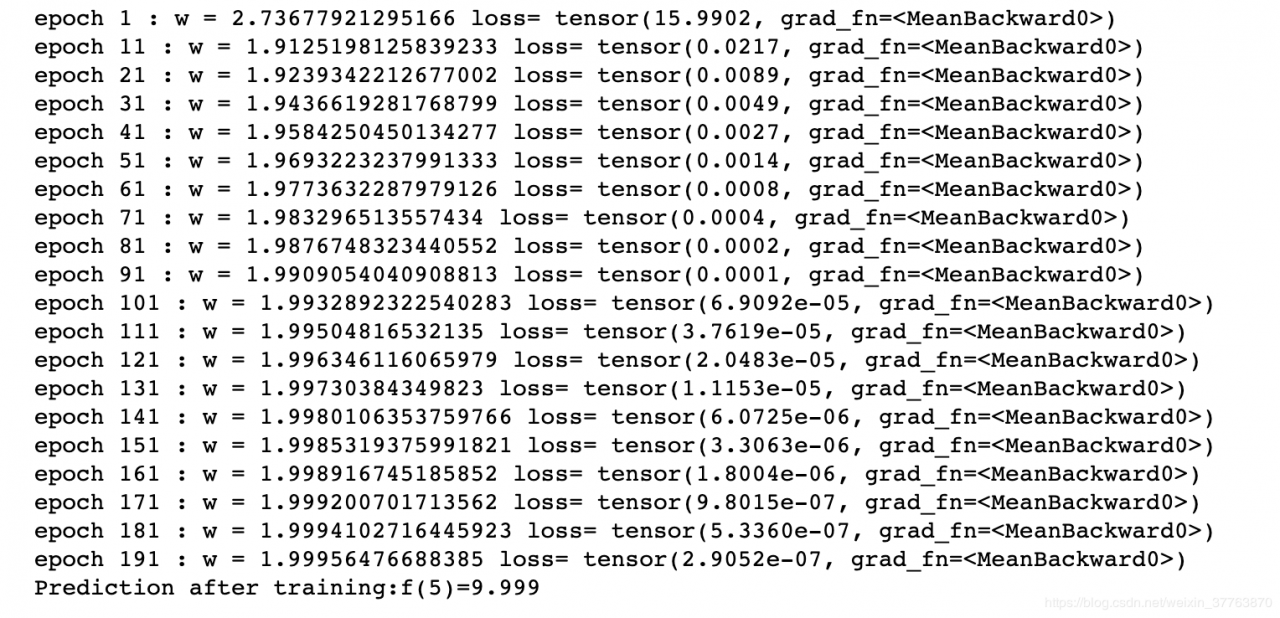

2.5.训练

print(f'Prediction before training:f(5)={model(X_test).item():.3f}')

learning_rate = 0.1

n_iters = 200

loss = nn.MSELoss() #损失函数

optimizer = torch.optim.SGD(model.parameters(), learning_rate) #优化器

for epoch in range(n_iters):

y_predicted = model(X)

l = loss(Y, y_predicted)

l.backward()

optimizer.step()

optimizer.zero_grad()

if epoch%10 == 0:

[w,b] = model.parameters()

print('epoch',epoch+1,': w =',w.item(),'loss=',l)

print(f'Prediction after training:f(5)={model(X_test).item():.3f}')

作者:程旭员