【spark2.4.4源码编译】windows环境编译spark2.4.4源码

windows环境编译spark2.4.4源码环境要求环境安装源码下载源码编译注意事项后记

环境要求

关于CodeCache的一些说法,我也在网上搜索资料进行了解,出错的原因是spark源码在编译过程中,已经编译的源码会被缓存起来,而用来缓存编译后的源码的地方就被称为CodeCache。随着编译的进行,CodeCache的空间就可能不够了,当CodeCache空间被耗尽以后,会让编译无法继续进行,而且会消耗大量的cpu,针对这一现象,相应解决办法当然就是加大CodeCached的空间(修改Maven bin目录下的mvn.cmd):

作者:Jack_Roy

操作系统环境:Windows 10(Windows7、Windows8亦可)

Java版本: jdk1.8

Scala版本:2.11.0

Maven版本:3.5.4

Git版本:版本无要求

以上相关组件的版本是根据spark2.4.4源码的pom文件里的组件版本进行梳理的:

1.8

3.5.4

2.11.12

根据这组配置,本人已经成功编译数遍。

环境安装1、Git Bash

首先说明,为什么要装Git Bash这个东西,这个主要是为了方便我们能在windows环境下执行linux环境下的一些操作。

下载链接:

https://git-scm.com/download/win

根据操作系统的位数下载相应的安装包之后,进行安装就可以了。

2、JDK1.8

下载链接:

https://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

3、Scala2.11.0

下载链接:

https://www.scala-lang.org/download/2.11.0.html

4、Maven3.5.4(镜像使用阿里云http://maven.aliyun.com/nexus/content/groups/public/)

下载链接:

https://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.5.4/binaries/

以上下载安装之后,在系统变量里配置相关环境变量:

最后打开Git(或者CMD)进行相关验证:

Jack_Roy@LAPTOP-FAAF41TU MINGW64 ~

$ mvn -version

Apache Maven 3.5.4 (1edded0938998edf8bf061f1ceb3cfdeccf443fe; 2018-06-18T02:33:14+08:00)

Maven home: D:\apache-maven-3.5.4

Java version: 1.8.0_181, vendor: Oracle Corporation, runtime: D:\JAVA\JDK\jre

Default locale: zh_CN, platform encoding: GBK

OS name: "windows 10", version: "10.0", arch: "amd64", family: "windows"

Jack_Roy@LAPTOP-FAAF41TU MINGW64 ~

$ java -version

java version "1.8.0_181"

Java(TM) SE Runtime Environment (build 1.8.0_181-b13)

Java HotSpot(TM) 64-Bit Server VM (build 25.181-b13, mixed mode)

Jack_Roy@LAPTOP-FAAF41TU MINGW64 ~

$ scala -version

Scala code runner version 2.11.0 -- Copyright 2002-2013, LAMP/EPFL

以上就是编译环境的全部准备工作了,如果你的java、scala/maven统统验证成功,那么就可以下载spark源码进行编译了。

源码下载spark2.4.4源码下载链接:

http://spark.apache.org/downloads.html

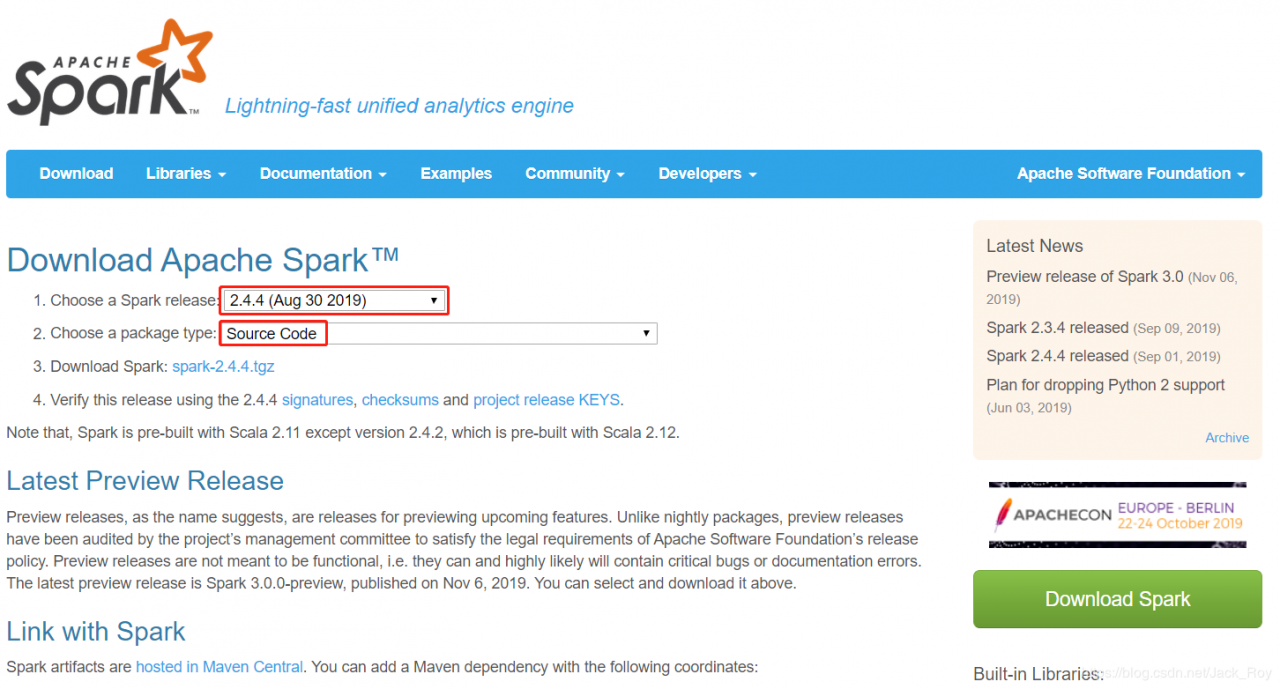

在下载页面选择相应版本和安装包类型(source code)后,就可以进行下载了:

下载完成后我们进行解压,然后在Git中找到下载路径:

$ cd d:

Jack_Roy@LAPTOP-FAAF41TU MINGW64 /d

$ cd spark_source/spark-2.4.4

Jack_Roy@LAPTOP-FAAF41TU MINGW64 /d/spark_source/spark-2.4.4

$ ll

total 220

-rw-r--r-- 1 Jack_Roy 197121 2298 8月 28 05:20 appveyor.yml

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 assembly/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 bin/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 build/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 common/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 conf/

-rw-r--r-- 1 Jack_Roy 197121 995 8月 28 05:20 CONTRIBUTING.md

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 core/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 data/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 dev/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 docs/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 examples/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 external/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 graphx/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 hadoop-cloud/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 launcher/

-rw-r--r-- 1 Jack_Roy 197121 13335 8月 28 05:20 LICENSE

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 licenses/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 mllib/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 mllib-local/

-rw-r--r-- 1 Jack_Roy 197121 1531 8月 28 05:20 NOTICE

-rw-r--r-- 1 Jack_Roy 197121 103442 8月 28 05:20 pom.xml

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 project/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 python/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 R/

-rw-r--r-- 1 Jack_Roy 197121 3952 8月 28 05:20 README.md

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 repl/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 resource-managers/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 sbin/

-rw-r--r-- 1 Jack_Roy 197121 18386 8月 28 05:20 scalastyle-config.xml

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 sql/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 streaming/

drwxr-xr-x 1 Jack_Roy 197121 0 11月 9 13:54 tools/

输入编译命令执行:

mvn -Pyarn -Phadoop-2.4 -Dscala-2.11 -DskipTests clean package

如下就是开始编译了:

$ mvn -Pyarn -Phadoop-2.4 -Dscala-2.11 -DskipTests clean package

[INFO] Scanning for projects...

Downloading from alimaven: http://maven.aliyun.com/nexus/content/groups/public/org/apache/apache/18/apache-18.pom

Downloaded from alimaven: http://maven.aliyun.com/nexus/content/groups/public/org/apache/apache/18/apache-18.pom (16 kB at 14 kB/s)

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] Spark Project Parent POM [pom]

[INFO] Spark Project Tags [jar]

[INFO] Spark Project Sketch [jar]

[INFO] Spark Project Local DB [jar]

[INFO] Spark Project Networking [jar]

[INFO] Spark Project Shuffle Streaming Service [jar]

[INFO] Spark Project Unsafe [jar]

[INFO] Spark Project Launcher [jar]

[INFO] Spark Project Core [jar]

[INFO] Spark Project ML Local Library [jar]

[INFO] Spark Project GraphX [jar]

[INFO] Spark Project Streaming [jar]

[INFO] Spark Project Catalyst [jar]

[INFO] Spark Project SQL [jar]

[INFO] Spark Project ML Library [jar]

[INFO] Spark Project Tools [jar]

[INFO] Spark Project Hive [jar]

[INFO] Spark Project REPL [jar]

[INFO] Spark Project YARN Shuffle Service [jar]

[INFO] Spark Project YARN [jar]

[INFO] Spark Project Assembly [pom]

[INFO] Spark Integration for Kafka 0.10 [jar]

[INFO] Kafka 0.10+ Source for Structured Streaming [jar]

[INFO] Spark Project Examples [jar]

[INFO] Spark Integration for Kafka 0.10 Assembly [jar]

[INFO] Spark Avro [jar]

[INFO]

[INFO] ----------------------------------

[INFO] Building Spark Project Parent POM 2.4.4 [1/26]

[INFO] --------------------------------[ pom ]---------------------------------

一个半小时后:

[INFO] --- maven-source-plugin:3.0.1:jar-no-fork (create-source-jar) @ spark-avro_2.11 ---

[INFO] Building jar: D:\spark_source\spark-2.4.4\external\avro\target\spark-avro_2.11-2.4.4-sources.jar

[INFO]

[INFO] --- maven-source-plugin:3.0.1:test-jar-no-fork (create-source-jar) @ spark-avro_2.11 ---

[INFO] Building jar: D:\spark_source\spark-2.4.4\external\avro\target\spark-avro_2.11-2.4.4-test-sources.jar

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary:

[INFO]

[INFO] Spark Project Parent POM 2.4.4 ..................... SUCCESS [04:27 min]

[INFO] Spark Project Tags ................................. SUCCESS [ 46.174 s]

[INFO] Spark Project Sketch ............................... SUCCESS [ 15.265 s]

[INFO] Spark Project Local DB ............................. SUCCESS [ 20.058 s]

[INFO] Spark Project Networking ........................... SUCCESS [ 21.526 s]

[INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 10.628 s]

[INFO] Spark Project Unsafe ............................... SUCCESS [ 26.987 s]

[INFO] Spark Project Launcher ............................. SUCCESS [01:08 min]

[INFO] Spark Project Core ................................. SUCCESS [07:51 min]

[INFO] Spark Project ML Local Library ..................... SUCCESS [01:11 min]

[INFO] Spark Project GraphX ............................... SUCCESS [01:24 min]

[INFO] Spark Project Streaming ............................ SUCCESS [02:55 min]

[INFO] Spark Project Catalyst ............................. SUCCESS [05:10 min]

[INFO] Spark Project SQL .................................. SUCCESS [08:33 min]

[INFO] Spark Project ML Library ........................... SUCCESS [05:52 min]

[INFO] Spark Project Tools ................................ SUCCESS [ 19.158 s]

[INFO] Spark Project Hive ................................. SUCCESS [04:58 min]

[INFO] Spark Project REPL ................................. SUCCESS [ 49.847 s]

[INFO] Spark Project YARN Shuffle Service ................. SUCCESS [ 13.486 s]

[INFO] Spark Project YARN ................................. SUCCESS [01:20 min]

[INFO] Spark Project Assembly ............................. SUCCESS [ 6.206 s]

[INFO] Spark Integration for Kafka 0.10 ................... SUCCESS [ 53.368 s]

[INFO] Kafka 0.10+ Source for Structured Streaming ........ SUCCESS [01:26 min]

[INFO] Spark Project Examples ............................. SUCCESS [01:22 min]

[INFO] Spark Integration for Kafka 0.10 Assembly .......... SUCCESS [ 6.046 s]

[INFO] Spark Avro 2.4.4 ................................... SUCCESS [ 51.744 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 53:25 min

[INFO] Finished at: 2019-11-09T16:58:39+08:00

[INFO] ------------------------------------------------------------------------

[WARNING] The requested profile "hadoop-2.4" could not be activated because it does not exist.

Java HotSpot(TM) 64-Bit Server VM warning: CodeCache is full. Compiler has been disabled.

Java HotSpot(TM) 64-Bit Server VM warning: Try increasing the code cache size using -XX:ReservedCodeCacheSize=

这便是编译成功,可以往IDEA里面导入了。

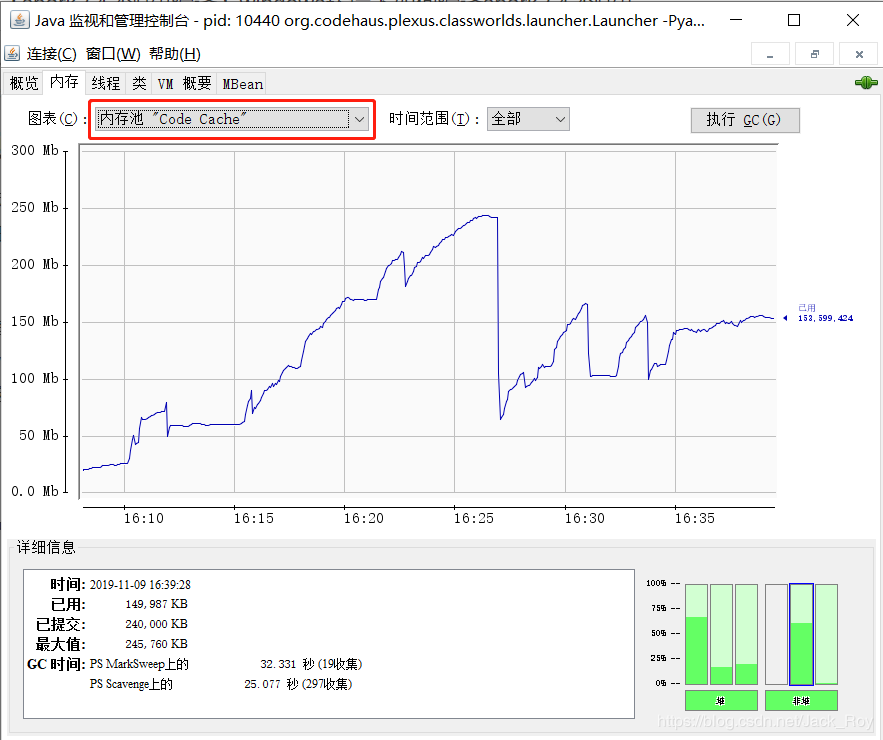

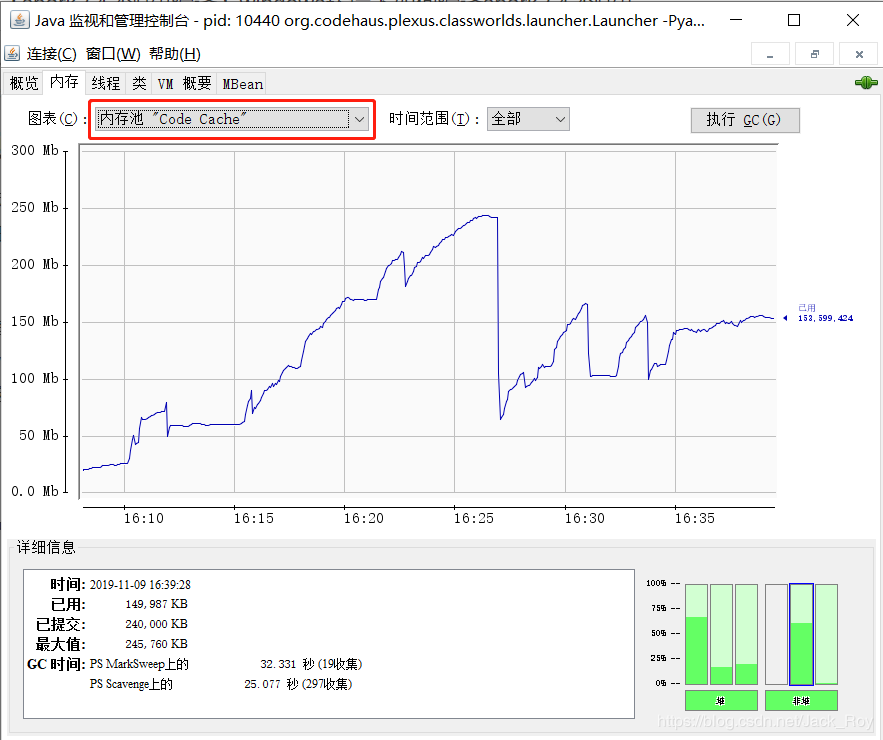

注意事项 编译过程大概一个半小时,机器性能不好需要更久,另外需要在稳定的网络环境下进行编译,不稳定的网络可能导致编译卡死。 如若编译中断,建议先清空Maven本地仓库后重新执行编译,以免部分依赖包不完整出错。 我曾再一次编译过程中碰到了关于“CodeCache”的错误,最终的解决办法是通过加大mven的jvm来解决的,编译过程中我们可以打开java安装目录下bin下的jconsole,然后选择内存,监控CodeCache的使用情况:

关于CodeCache的一些说法,我也在网上搜索资料进行了解,出错的原因是spark源码在编译过程中,已经编译的源码会被缓存起来,而用来缓存编译后的源码的地方就被称为CodeCache。随着编译的进行,CodeCache的空间就可能不够了,当CodeCache空间被耗尽以后,会让编译无法继续进行,而且会消耗大量的cpu,针对这一现象,相应解决办法当然就是加大CodeCached的空间(修改Maven bin目录下的mvn.cmd):

@REM Xms512m、Xmx1024m表示堆内存和最大堆内存

@REM PermSize、MaxPermSize表示非堆内存和最大非堆内存

@REM ReservedCodeCacheSize表示Code Cache空间

set MAVEN_OPTS=-Xms512m -Xmx1024m -XX:PermSize=512m -XX:MaxPermSize=1024M -XX:ReservedCodeCacheSize=512M

后记

大家在编译过程中碰到什么问题,欢迎进行交流。

作者:Jack_Roy

相关文章

Vanora

2020-07-25

Valencia

2020-07-04

Petra

2020-05-22

Tia

2023-07-20

Ophelia

2023-07-20

Sabah

2023-07-20

Viridis

2023-07-21

Octavia

2023-07-21

Dabria

2023-07-21

Alanni

2023-07-21

Nyako

2023-07-21

Lida

2023-07-21

Grizelda

2023-07-21

Ophelia

2023-07-21

Jacuqeline

2023-07-21

Posy

2023-07-22

Malinda

2023-08-08

Diane

2023-08-08

Kefira

2023-08-08