【文献阅读及pytorch实践】FCN:Fully Convolutional Networks for Semantic Segmentation

https://github.com/shelhamer/fcn.berkeleyvision.org

可视化prototxt:netron

跑通caffemodel:import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import caffe

# load image, switch to BGR, subtract mean, and make dims C x H x W for Caffe

im = Image.open('data/pascal/VOCdevkit/VOC2012/JPEGImages/2007_000129.jpg')

in_ = np.array(im, dtype=np.float32)

in_ = in_[:,:,::-1]

in_ -= np.array((104.00698793,116.66876762,122.67891434))

in_ = in_.transpose((2,0,1))

# load net

net = caffe.Net('voc-fcn8s/deploy.prototxt', 'voc-fcn8s/fcn8s-heavy-pascal.caffemodel', caffe.TEST)

# shape for input (data blob is N x C x H x W), set data

net.blobs['data'].reshape(1, *in_.shape)

net.blobs['data'].data[...] = in_

# run net and take argmax for prediction

net.forward()

out = net.blobs['score'].data[0].argmax(axis=0)

plt.imshow(out,cmap='gray');plt.axis('off')

plt.savefig('test.png')

plt.show()

数据集:

链接:https://pan.baidu.com/s/1qRvHIwxb7pHLq07WJ6ZGtg

提取码:pese

PASCAL VOC 2012 增强数据集(PASCAL VOC 2012 Augmented Dataset)是现目前语义分割领域最常用、也是最基础的benchmark数据集,它是由两个数据集合二为一制作的:

PASCAL VOC 20121:用于语义分割的图片数量分布为:训练集1464 ,验证集1449 ,测试集1456 ;

Semantic Boundaries Dataset2:训练集8498 ,验证集2857;

制作完成的增强数据集图片分布为:训练集10582,验证集1449,测试集1456。

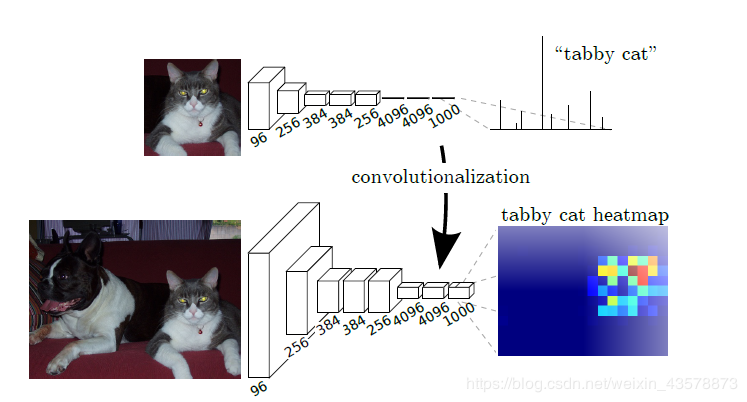

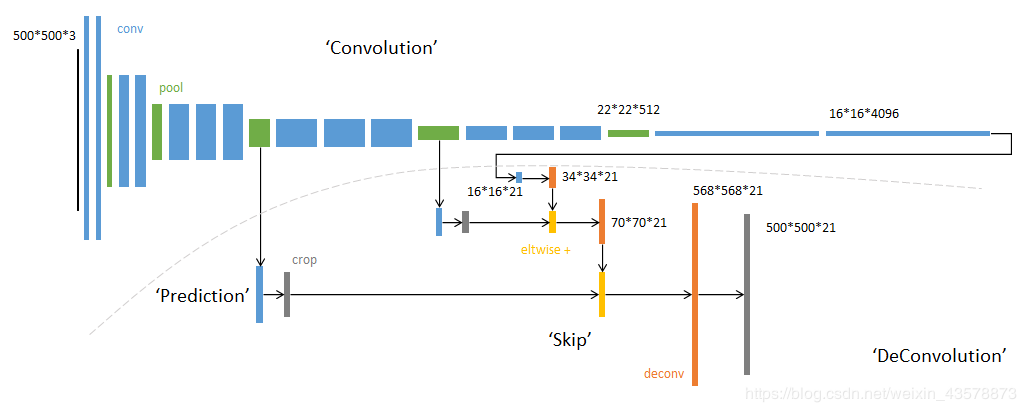

第一部分.文献阅读 核心思想: 1.不含全连接层的全卷积网络,可适应任意尺寸输入; 2.增大数据尺寸的反卷积层,能够输出精细的结果; 3. 结合不同深度层结果的跳级结构,同时确保鲁棒性和精确性。

通过转置卷积来扩大尺寸。

转置卷积(或称为反卷积,在数学里面这个叫法是错误的)的前向就是卷积的后向,转置卷积的后向就是卷积的前向。

一个形象的展示反卷积过程的项目:

https://github.com/vdumoulin/conv_arithmetic

卷积:N = (w − k + 2p) / s + 1

反卷积:N = (w - 1) × s + k - 2p

损失函数是在最后一层的 spatial map上的 pixel 的 loss 和,在每一个 pixel 使用 softmax loss

网络结构:

看作者提供的prototxt,crop与上图会产生一点偏差,如8s的最后一层crop是31,但是根据上图应该是34,原因在于pool之后尺寸变化有可能除不尽造成的向上保留的误差。

如果带着原始尺寸h,w做计算,就能跟作者给的crop对上。

def read_images(root, train):

txt_fname = root + ('train_aug.txt' if train else 'val.txt')

with open(txt_fname, 'rb') as f:

images = f.read().split()

data_path = []

label_path = []

for i in range(0, len(images), 2):

data_path.append(os.path.join(root + images[i].decode('gbk')))

label_path.append(os.path.join(root + images[i+1].decode('gbk')))

return data_path, label_path

构建自己的dataset:

class VocSegDataset(torch.utils.data.Dataset):

def __init__(self, root, train, transforms=None):

self.train = train

self.transforms = torchvision.transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

self.data_list, self.label_list = read_images(root, train)

def __getitem__(self, item):

img = self.data_list[item]

label = self.label_list[item]

img = PIL.Image.open(img)

label = PIL.Image.open(label)

img = torchvision.transforms.ToTensor()(img)

img = self.transforms(img)

label = numpy.array(label)

label = torch.tensor(label).to(torch.float32)

return img, label

def __len__(self):

return len(self.data_list)

构建dataloader:

def make_data_loader(cfg, is_train):

#root = cfg['data']['root']

root = 'D:/datasets/VOC2012/TrainVal/'

dataset = VocSegDataset(root, is_train)

if is_train:

dataloader = torch.utils.data.DataLoader(dataset, shuffle=True)

else:

dataloader = torch.utils.data.DataLoader(dataset, shuffle=False)

return dataloader

定义网络:

class FCN8s(torch.nn.Module):

def __init__(self):

super(FCN8s, self).__init__()

self.conv1_1 = torch.nn.Conv2d(3, 64, 3, padding=100)

self.conv1_2 = torch.nn.Conv2d(64, 64, 3, padding=1)

self.conv2_1 = torch.nn.Conv2d(64, 128, 3, padding=1)

self.conv2_2 = torch.nn.Conv2d(128, 128, 3, padding=1)

self.conv3_1 = torch.nn.Conv2d(128, 256, 3, padding=1)

self.conv3_2 = torch.nn.Conv2d(256, 256, 3, padding=1)

self.conv3_3 = torch.nn.Conv2d(256, 256, 3, padding=1)

self.conv4_1 = torch.nn.Conv2d(256, 512, 3, padding=1)

self.conv4_2 = torch.nn.Conv2d(512, 512, 3, padding=1)

self.conv4_3 = torch.nn.Conv2d(512, 512, 3, padding=1)

self.conv5_1 = torch.nn.Conv2d(512, 512, 3, padding=1)

self.conv5_2 = torch.nn.Conv2d(512, 512, 3, padding=1)

self.conv5_3 = torch.nn.Conv2d(512, 512, 3, padding=1)

self.fc6 = torch.nn.Conv2d(512, 4096, 7)

self.fc7 = torch.nn.Conv2d(4096, 4096, 1)

self.score_fr = torch.nn.Conv2d(4096, 21, 1)

self.upscore2 = torch.nn.ConvTranspose2d(21, 21, 4, stride=2)

self.score_pool4 = torch.nn.Conv2d(512, 21, 1)

self.score_pool3 = torch.nn.Conv2d(256, 21, 1)

self.upscore8 = torch.nn.ConvTranspose2d(21, 21, 16, stride=8)

def forward(self, input):

# layer 1

x = self.conv1_1(input)

x = torch.nn.functional.relu(x)

x = self.conv1_2(x)

x = torch.nn.functional.relu(x)

pool_1 = torch.nn.functional.max_pool2d(x, 2, stride=2)

# layer 2

x = self.conv2_1(pool_1)

x = torch.nn.functional.relu(x)

x = self.conv2_2(x)

x = torch.nn.functional.relu(x)

pool_2 = torch.nn.functional.max_pool2d(x, 2, stride=2)

# layer 3

x = self.conv3_1(pool_2)

x = torch.nn.functional.relu(x)

x = self.conv3_2(x)

x = torch.nn.functional.relu(x)

x = self.conv3_3(x)

x = torch.nn.functional.relu(x)

pool_3 = torch.nn.functional.max_pool2d(x, 2, stride=2)

# layer 4

x = self.conv4_1(pool_3)

x = torch.nn.functional.relu(x)

x = self.conv4_2(x)

x = torch.nn.functional.relu(x)

x = self.conv4_3(x)

x = torch.nn.functional.relu(x)

pool_4 = torch.nn.functional.max_pool2d(x, 2, stride=2)

# layer 5

x = self.conv5_1(pool_4)

x = torch.nn.functional.relu(x)

x = self.conv5_2(x)

x = torch.nn.functional.relu(x)

x = self.conv5_3(x)

x = torch.nn.functional.relu(x)

pool_5 = torch.nn.functional.max_pool2d(x, 2, stride=2)

# fc6

x = self.fc6(pool_5)

x = torch.nn.functional.relu(x)

x = torch.nn.functional.dropout2d(x, training=self.training)

# fc7

x = self.fc7(x)

x = torch.nn.functional.relu(x)

x = torch.nn.functional.dropout2d(x, training=self.training)

# score

x = self.score_fr(x)

# upsample pool_4

x = self.upscore2(x)

score_pool_4 = self.score_pool4(pool_4)

score_pool_4 = score_pool_4[:, :, 5:5+x.size()[2], 5:5+x.size()[3]]

x = x + score_pool_4

# upsample pool_3

x = self.upscore2(x)

score_pool_3 = self.score_pool3(pool_3)

score_pool_3 = score_pool_3[:, :, 9:9+x.size()[2], 9:9+x.size()[3]]

x = x + score_pool_3

x = self.upscore8(x)

x = x[:, :, 31:31+input.size()[2], 31:31+input.size()[3]].contiguous()

return x

之后就可以开始训练了。

作者:Dogged21