resnet+tensorflow1.14+遥感图片二分类

import tensorflow as tf

def _resnet_block_v1(inputs, filters, stride, projection, stage, blockname, TRAINING):

# defining name basis

conv_name_base = 'res' + str(stage) + blockname + '_branch'

bn_name_base = 'bn' + str(stage) + blockname + '_branch'

with tf.name_scope("conv_block_stage" + str(stage)):

if projection:

shortcut = tf.layers.conv2d(inputs, filters, (1,1),

strides=(stride, stride),

name=conv_name_base + '1',

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

shortcut = tf.layers.batch_normalization(shortcut, axis=-1, name=bn_name_base + '1',

training=TRAINING, reuse=tf.AUTO_REUSE)

else:

shortcut = inputs

outputs = tf.layers.conv2d(inputs, filters,

kernel_size=(3, 3),

strides=(stride, stride),

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

name=conv_name_base+'2a', reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

outputs = tf.layers.batch_normalization(outputs, axis=-1, name=bn_name_base+'2a',

training=TRAINING, reuse=tf.AUTO_REUSE)

outputs = tf.nn.relu(outputs)

outputs = tf.layers.conv2d(outputs, filters,

kernel_size=(3, 3),

strides=(1, 1),

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

name=conv_name_base+'2b', reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

outputs = tf.layers.batch_normalization(outputs, axis=-1, name=bn_name_base+'2b',

training=TRAINING, reuse=tf.AUTO_REUSE)

outputs = tf.add(shortcut, outputs)

outputs = tf.nn.relu(outputs)

return outputs

def _resnet_block_v2(inputs, filters, stride, projection, stage, blockname, TRAINING):

# defining name basis

conv_name_base = 'res' + str(stage) + blockname + '_branch'

bn_name_base = 'bn' + str(stage) + blockname + '_branch'

with tf.name_scope("conv_block_stage" + str(stage)):

shortcut = inputs

outputs = tf.layers.batch_normalization(inputs, axis=-1, name=bn_name_base+'2a',

training=TRAINING, reuse=tf.AUTO_REUSE)

outputs = tf.nn.relu(outputs)

if projection:

shortcut = tf.layers.conv2d(outputs, filters, (1,1),

strides=(stride, stride),

name=conv_name_base + '1',

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

shortcut = tf.layers.batch_normalization(shortcut, axis=-1, name=bn_name_base + '1',

training=TRAINING, reuse=tf.AUTO_REUSE)

outputs = tf.layers.conv2d(outputs, filters,

kernel_size=(3, 3),

strides=(stride, stride),

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

name=conv_name_base+'2a', reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

outputs = tf.layers.batch_normalization(outputs, axis=-1, name=bn_name_base+'2b',

training=TRAINING, reuse=tf.AUTO_REUSE)

outputs = tf.nn.relu(outputs)

outputs = tf.layers.conv2d(outputs, filters,

kernel_size=(3, 3),

strides=(1, 1),

kernel_initializer=tf.contrib.layers.variance_scaling_initializer(),

name=conv_name_base+'2b', reuse=tf.AUTO_REUSE, padding='same',

data_format='channels_last')

outputs = tf.add(shortcut, outputs)

return outputs

def inference(images, training, filters, n, ver):

"""Construct the resnet model

Args:

images: [batch*channel*height*width]

training: boolean

filters: integer, the filters of the first resnet stage, the next stage will have filters*2

n: integer, how many resnet blocks in each stage, the total layers number is 6n+2

ver: integer, can be 1 or 2, for resnet v1 or v2

Returns:

Tensor, model inference output

"""

#Layer1 is a 3*3 conv layer, input channels are 3, output channels are 16

inputs = tf.layers.conv2d(images, filters=16, kernel_size=(3, 3), strides=(1, 1),

name='conv1', reuse=tf.AUTO_REUSE, padding='same', data_format='channels_last')

#no need to batch normal and activate for version 2 resnet.

if ver==1:

inputs = tf.layers.batch_normalization(inputs, axis=-1, name='bn_conv1',

training=training, reuse=tf.AUTO_REUSE)

inputs = tf.nn.relu(inputs)

for stage in range(3):

stage_filter = filters*(2**stage)

for i in range(n):

stride = 1

projection = False

if i==0 and stage>0:

stride = 2

projection = True

if ver==1:

inputs = _resnet_block_v1(inputs, stage_filter, stride, projection,

stage, blockname=str(i), TRAINING=training)

else:

inputs = _resnet_block_v2(inputs, stage_filter, stride, projection,

stage, blockname=str(i), TRAINING=training)

#only need for version 2 resnet.

if ver==2:

inputs = tf.layers.batch_normalization(inputs, axis=-1, name='pre_activation_final_norm',

training=training, reuse=tf.AUTO_REUSE)

inputs = tf.nn.relu(inputs)

axes = [1, 2]

inputs = tf.reduce_mean(inputs, axes, keep_dims=True)

inputs = tf.identity(inputs, 'final_reduce_mean')

inputs = tf.reshape(inputs, [-1, filters*(2**2)])

inputs = tf.layers.dense(inputs=inputs, units=2, name='dense1', reuse=tf.AUTO_REUSE)

return inputs

import os

from cv2 import cv2 as cv

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

import threading

import resnet_model

os.environ["TF_CPP_MIN_LOG_LEVEL"]="2"

def read_tfRecord(file_tfRecord): #输入是.tfrecords文件地址

queue = tf.train.string_input_producer([file_tfRecord])

reader = tf.TFRecordReader()

_,serialized_example = reader.read(queue)

features = tf.parse_single_example(

serialized_example,

features={

'image_raw':tf.FixedLenFeature([], tf.string),

'label':tf.FixedLenFeature([], tf.int64)

}

)

image = tf.decode_raw(features['image_raw'],tf.uint8)

image = tf.reshape(image,[256*256*3])

image = tf.cast(image, tf.float32)

image = tf.cast(image, tf.float32) * (1./ 255) - 0.5

# image = tf.image.per_image_standardization(image)

label = tf.cast(features['label'], tf.int64)

one_hot_labels = tf.one_hot(indices=label,depth=2, on_value=1, off_value=0, axis=-1, dtype=tf.int32, name="one-hot")

one_hot_labels=tf.cast(one_hot_labels,tf.float32)

return image,one_hot_labels

if __name__ == '__main__':

outputdir1 = "/root/UCMerced1"

outputdir2 = "/root/UCMerced2"

traindata1,trainlabel1 = read_tfRecord(outputdir1+".tfrecords")

traindata2,trainlabel2 = read_tfRecord(outputdir2+".tfrecords")

image_batch1,label_batch1 = tf.train.shuffle_batch([traindata1,trainlabel1],

batch_size=20,capacity=200,min_after_dequeue = 10)

image_batch2,label_batch2 = tf.train.shuffle_batch([traindata2,trainlabel2],

batch_size=20,capacity=200,min_after_dequeue = 10)

with tf.name_scope("Input_layer"):

x=tf.placeholder("float",[None,256*256*3],name="x")

x_image=tf.reshape(x,[-1,256,256,3])

with tf.name_scope("training_bool"):

training=tf.placeholder(tf.bool)

filters = 16 #the first resnet block filter number

n = 5 #the basic resnet block number, total network layers are 6n+2

ver = 2 #the resnet block version

inputs=resnet_model.inference(x_image,training, filters, n, ver)

y_predict=tf.nn.softmax(inputs)

with tf.name_scope("optimizer"):

y_label = tf.placeholder("float",[None, 2])

#cross_entropy = -tf.reduce_sum(y_*tf.log(y))

cross_entropy= -tf.reduce_mean(y_label * tf.log(tf.clip_by_value(y_predict,1e-10,1.0)))

#loss_function=tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y_predict,labels=y_label))

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

with tf.control_dependencies(update_ops):

optimizer=tf.train.AdadeltaOptimizer(learning_rate=0.1).minimize(cross_entropy)

with tf.name_scope("evaluate_model"):

correct_prediction=tf.equal(tf.arg_max(y_label,1),tf.arg_max(y_predict,1))

accuracy=tf.reduce_mean(tf.cast(correct_prediction,"float"))

tf.summary.scalar('accuracy', accuracy)

#train_step = tf.train.GradientDescentOptimizer(0.01).minimize(cross_entropy)

saver = tf.train.Saver(max_to_keep=5)

merged = tf.summary.merge_all()

Epochs=20

Epochs=30

trainEpochs=Epochs-1

batchSize=20

loss_list=[]

epoch_list=[]

accuracy_list=[]

sess=tf.Session()

writer = tf.summary.FileWriter('/root/qzlogs',tf.get_default_graph())

coord = tf.train.Coordinator()

threads = tf.train.start_queue_runners(sess=sess,coord = coord)

sess.run(tf.global_variables_initializer())

batch_x2,batch_y2=sess.run([image_batch2,label_batch2])

try:

while not coord.should_stop():

for epoch in range(10000):

for i in range(8):

batch_x,batch_y=sess.run([image_batch1,label_batch1])

summary,_=sess.run([merged,optimizer],feed_dict={x:batch_x,y_label:batch_y,training:True})

writer.add_summary(summary,epoch)

loss,acc=sess.run([cross_entropy,accuracy],feed_dict={x:batch_x2,y_label:batch_y2,training:False})

epoch_list.append(epoch)

loss_list.append(loss)

accuracy_list.append(acc)

print("-----------------------------------------------------------")

print("Train Epoch:","%02d"%(epoch+1),"Loss=","{:.9f}".format(loss),"Accuracy=",acc)

saver.save(sess, "model_conv/my-model", global_step=epoch)

print ("save the model")

print("------------------------------------------------------------")

if epoch>=trainEpochs:

coord.request_stop()

except tf.errors.OutOfRangeError:

print ('Done training -- epoch limit reached')

finally:

# When done, ask the threads to stop. 请求该线程停止

coord.request_stop()

# And wait for them to actually do it. 等待被指定的线程终止

coord.join(threads)

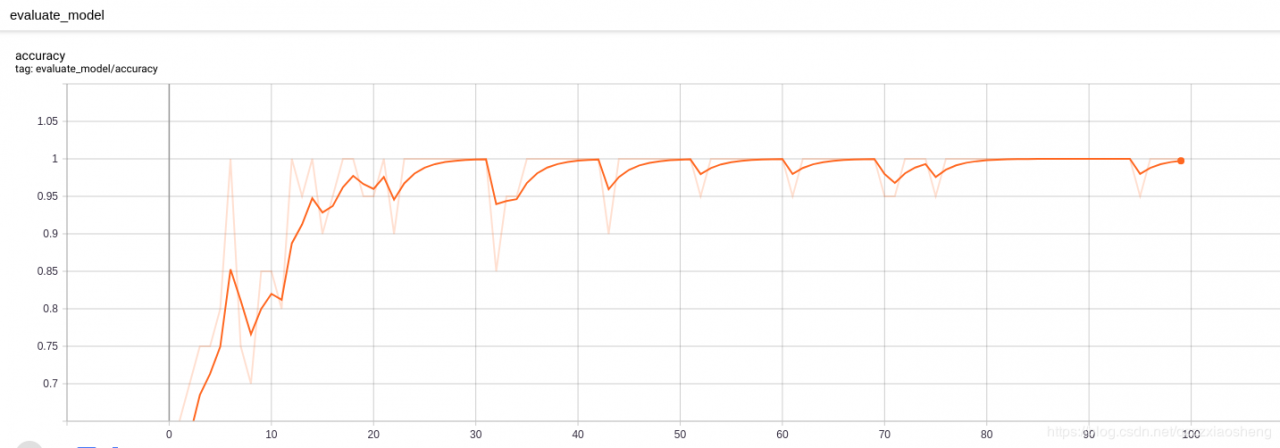

简要实现了如何用resnet编写二分类,环境是tensorflow1.14, 最后准确率高达1,不知道是不是过拟合了。

简要实现了如何用resnet编写二分类,环境是tensorflow1.14, 最后准确率高达1,不知道是不是过拟合了。

参考博客代码

作者:哈哈,有点意思