使用python爬取淘宝商品信息

1.首先要安装两个库,requests和re库

2.定义一个获取页面的函数

ef getHTMLText(url):

kv = {'cookie':'淘宝页面的cookie'}

try:

r = requests.get(url, headers=kv,timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

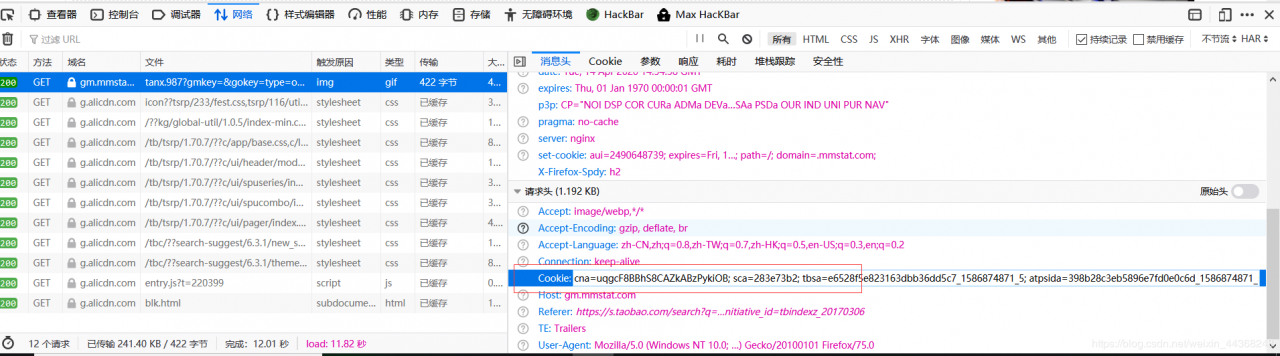

浏览器查看cookie的方法,摁下F2,在网络那里即可找到当前使用的cookie,看不到的话刷新一下(注意一定是淘宝的哦)

2.定义一个对页面进行解析的函数

2.定义一个对页面进行解析的函数

#对获取的页面进行解析

#ilt结果列表类型,html相关页面信息

def parsePage(ilt, html):

try:

plt = re.findall(r'\"view_price\"\:\"[\d\.]*\"', html)

tlt = re.findall(r'\"raw_title\"\:\".*?\"', html)

for i in range(len(plt)):

price = eval(plt[i].split(':')[1])

title = eval(tlt[i].split(':')[1])

ilt.append([price, title])

except:

print("")

3.定义输出商品信息的函数

#输出淘宝的商品信息

def printGoodsList(ilt):

tplt = "{:4}\t{:8}\t{:16}"

print(tplt.format("序号", "价格", "商品名称"))

count = 0

for g in ilt:

count = count + 1

print(tplt.format(count, g[0], g[1]))

4.定义主函数,记录程序的运行

#定义主函数,记录程序运行的相关进程

def main():

goods = '书包'

depth = 4 #设置爬取的深度,即爬取几个页面

start_url = 'https://s.taobao.com/search?q=' + goods

infoList = []

for i in range(depth): #遍历页面

try:

url = start_url

html = getHTMLText(url)

parsePage(infoList, html)

except:

continue

printGoodsList(infoList)

5.完整可运行的源代码如下:

import requests

import re

#获取页面函数

def getHTMLText(url):

kv = {'cookie':'thw=ca; v=0; cna=lV72Fg6CyVACAQ1LTjsroqGC; _m_h5_tk=ab017231908444ff9a47620d126610c3_1586861353081; _m_h5_tk_enc=a3d72eb3c5690474ec4279c210fe1200; cookie2=143d8c47edce133f238affd58002e93f; t=06cc25ec8972ddc4343a8cb6f0409166; _tb_token_=30e1983038bb7; _samesite_flag_=true; tfstk=cIG5Bd4-ygjSr4jLG0T4U_-a7aV1azKboLZ-PxLiDL1BGGiYDsml_laVrvjcMRUf.; sgcookie=EGOT2d5b63AZhZO6EU60n; unb=2490648739; uc3=vt3=F8dBxdGI5cNvSkTHUZg%3D&id2=UUwZ%2FXabOawSlw%3D%3D&nk2=o9ivn7VCfyA%3D&lg2=VFC%2FuZ9ayeYq2g%3D%3D; csg=a360b18b; lgc=%5Cu7405%5Cu90AA3888; cookie17=UUwZ%2FXabOawSlw%3D%3D; dnk=%5Cu7405%5Cu90AA3888; skt=e41b290160522485; existShop=MTU4Njg1NDE5OA%3D%3D; uc4=nk4=0%40oY6Q2W8txaepfrDEZ4F5PNonug%3D%3D&id4=0%40U27GjBJggmFd%2BiBU04WzGYTohXiM; tracknick=%5Cu7405%5Cu90AA3888; _cc_=UIHiLt3xSw%3D%3D; _l_g_=Ug%3D%3D; sg=895; _nk_=%5Cu7405%5Cu90AA3888; cookie1=BxZqssdAqsgD7pbOZHKEweTaJeydkiAEzNVgDvHsq5E%3D; enc=iZzRgUF2cATGP7DJkpfpVqbq3YAqPNrolb3doCtBPegyYD2dM9ZFNPH8cHEJcmHJ8xpZE%2BLW7r%2Bzgt8rpMcr%2Bg%3D%3D; uc1=cookie16=UtASsssmPlP%2Ff1IHDsDaPRu%2BPw%3D%3D&cookie21=VT5L2FSpccLuJBreK%2BBd&cookie15=U%2BGCWk%2F75gdr5Q%3D%3D&existShop=false&pas=0&cookie14=UoTUPOwl1xtHLA%3D%3D; mt=ci=112_1; hng=GLOBAL%7Czh-CN%7CUSD%7C999; isg=BGNjR_TbOd9YmfXr_NMndAfh8qcNWPea2FwJrpXAhEI51IL2BSln6hFFyqRauk-S; l=eBxGazrmQpcMF5g-BOfwq2ZP1hQTvIRfkukQacxpiT5P_j6p5BIFWZXWvfK9CnGVHsG2V3W-27aDBvYFzyCSnxv9-3k_J_DmndC..',

'user-agent':'Mozilla/5.0'}

try:

r = requests.get(url, headers=kv,timeout=30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

#对获取的页面进行解析

#ilt结果列表类型,html相关页面信息

def parsePage(ilt, html):

try:

plt = re.findall(r'\"view_price\"\:\"[\d\.]*\"', html)

tlt = re.findall(r'\"raw_title\"\:\".*?\"', html)

for i in range(len(plt)):

price = eval(plt[i].split(':')[1])

title = eval(tlt[i].split(':')[1])

ilt.append([price, title])

except:

print("")

#输出淘宝的商品信息

def printGoodsList(ilt):

tplt = "{:4}\t{:8}\t{:16}"

print(tplt.format("序号", "价格", "商品名称"))

count = 0

for g in ilt:

count = count + 1

print(tplt.format(count, g[0], g[1]))

#定义主函数,记录程序运行的相关进程

def main():

goods = '笔记本电脑'

depth = 4 #设置爬取的深度,即爬取几个页面

start_url = 'https://s.taobao.com/search?q=' + goods

infoList = []

for i in range(depth): #遍历页面

try:

url = start_url

html = getHTMLText(url)

parsePage(infoList, html)

except:

continue

printGoodsList(infoList)

main()

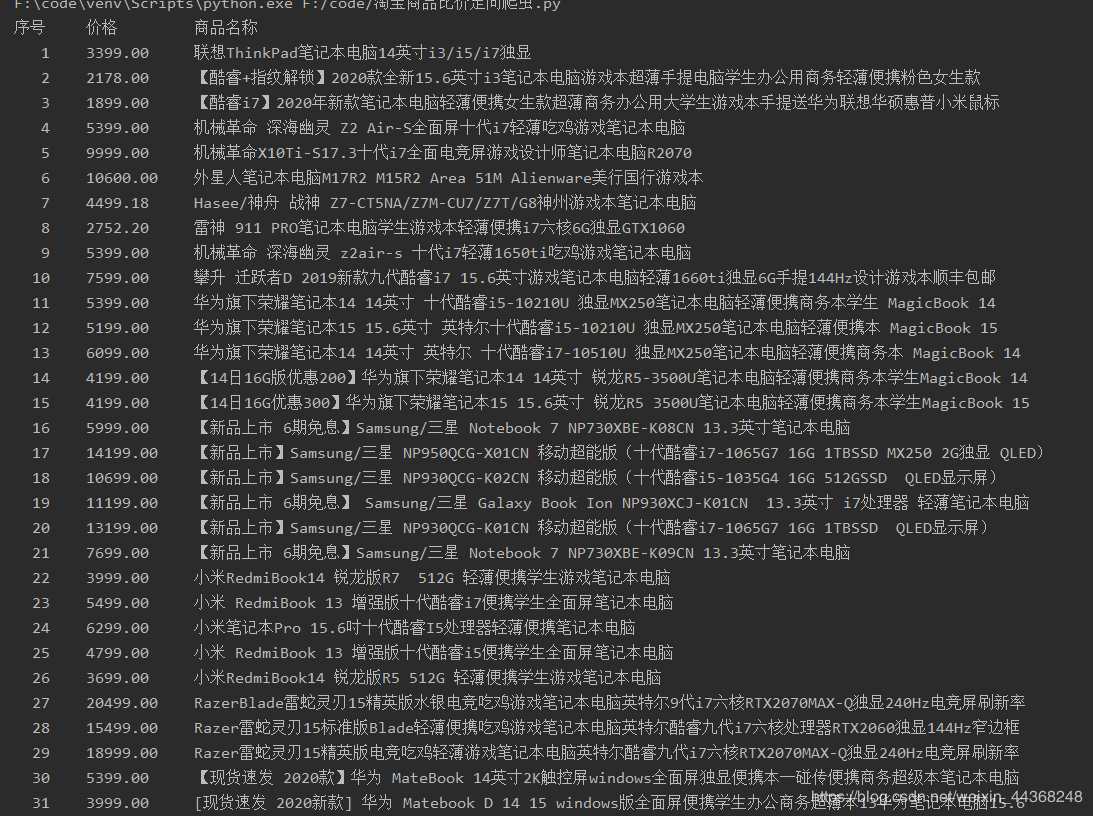

爬取到的数据如上图

爬取到的数据如上图

作者:大白杀仙