使用深度神经网络DNN完成手势识别之网络参数配置详解(Python+PaddlePaddle)

最近参加了深度学习 7日打卡营第五期-CV特辑(好像全国人民都在参加一样,平台爆满…),今天的内容是第二课的作业,具体请查看:

https://aistudio.baidu.com/aistudio/course/introduce/1149

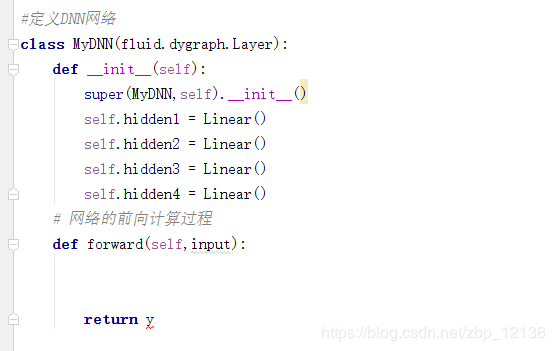

需要补充的代码是DNN深度神经网络这块:

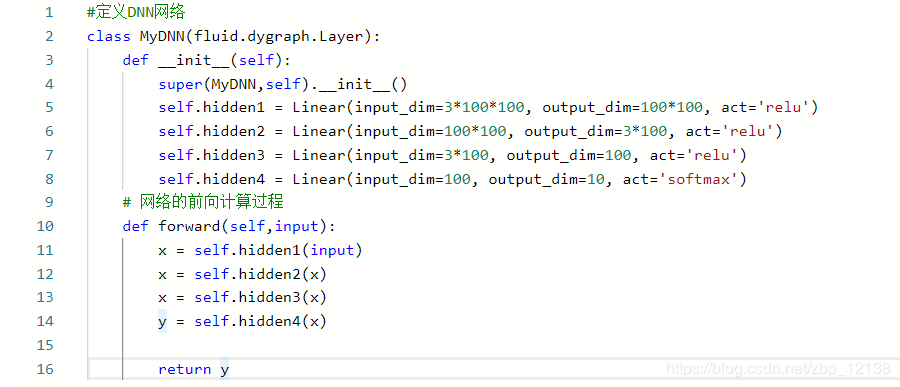

这里我们其实很快就能写出来,在Linear()里补全两个必备参数即可,再选一个激活函数,relu和softmax都是可以的,然后在forward里调用就行,就比如下面这样:

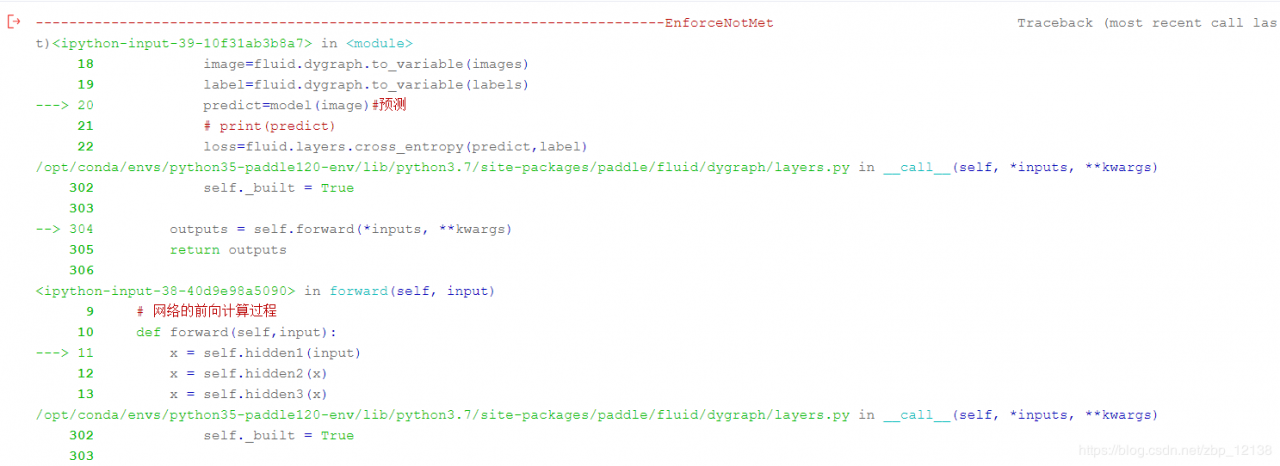

然而报错了:

还没完,后面还有

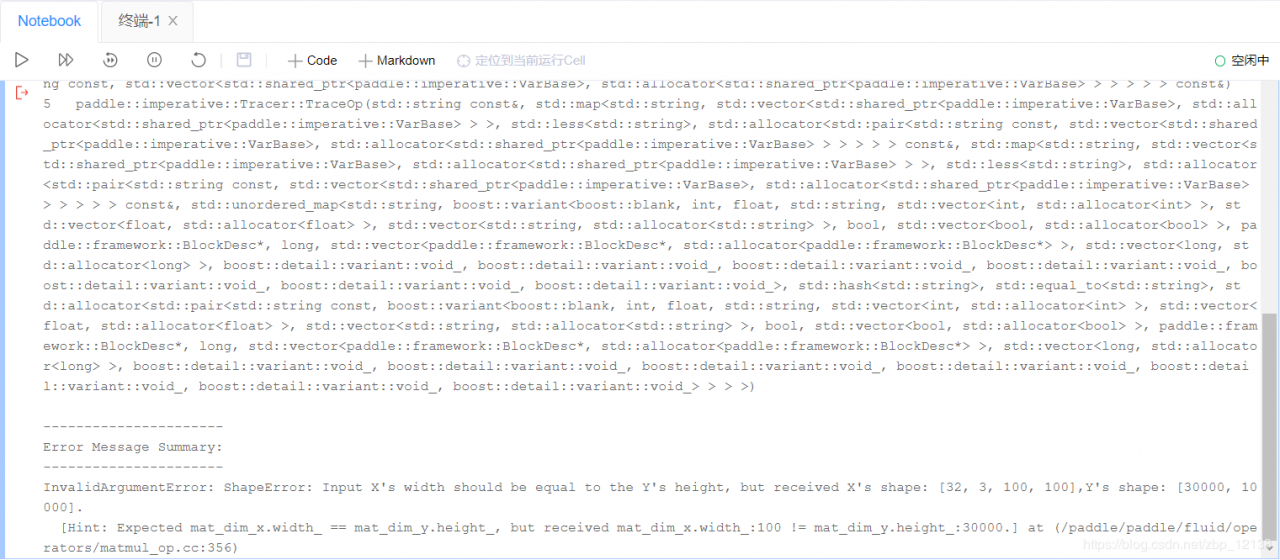

这一大堆报错不知道你有没有遇到过,反正我是遇到了,看一下最后的Error Message Summary:

InvalidArgumentError: ShapeError: Input X’s width should be equal to the Y’s height, but received X’s shape: [32, 3, 100, 100],Y’s shape: [30000, 10000].

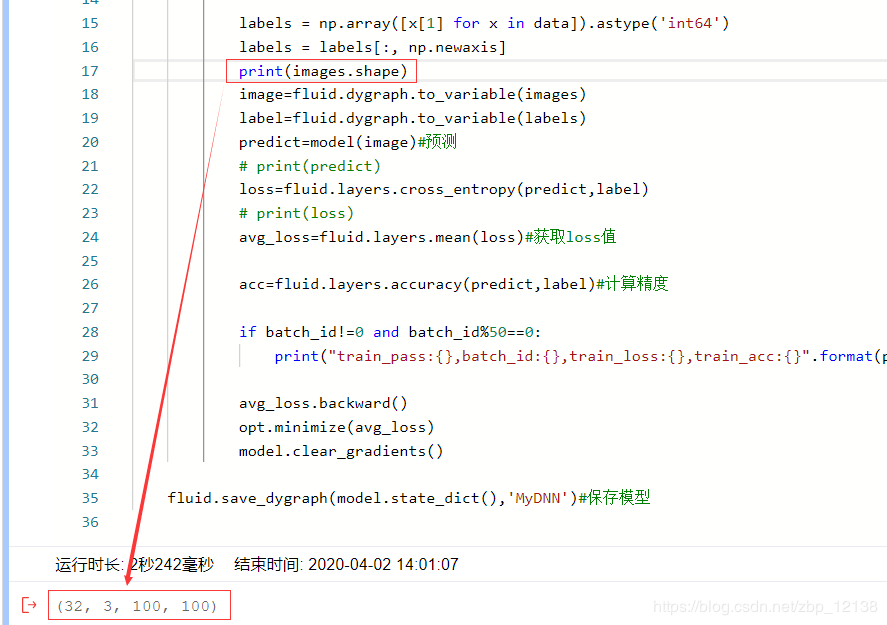

原来是输入的数据和网络不匹配而报的错,我们来看一下图片的shape:

说明这张图片的维度是四维的,而神经网络却是两维的,需要reshape一下:

x = fluid.layers.reshape(input, shape=[-1,3*100*100])

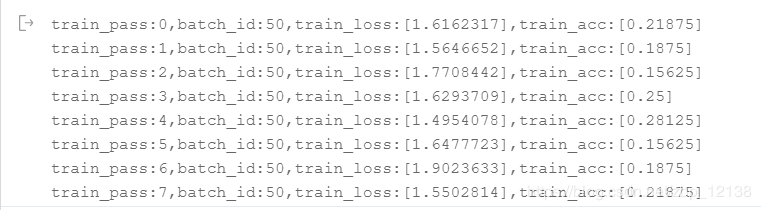

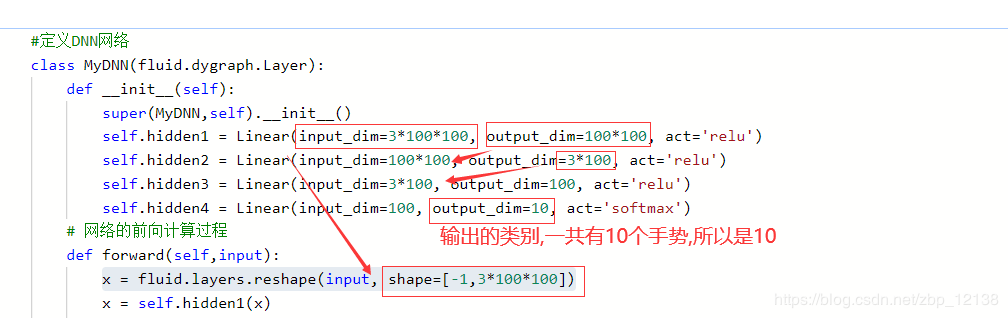

这样一来,维度就对应上了,也能开始训练了,但是这时的acc不是很高,loss也降不下去:

这时就要修改DNN网络的结构了,很多同学选择用其他网络提高训练准确度,但是我想试试DNN网络的最优效果是多少,于是我接着修改里面的参数,在修改前我们要明确的是:

上一层网络的输出要跟下一层网络的输入对应

就像这样:

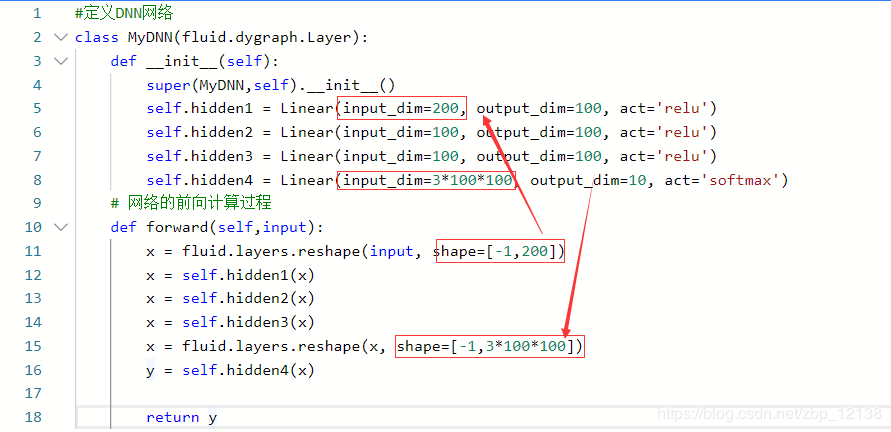

于是我配置了如下所示的网络:

看起来好像并没有什么毛病,而且完全符合刚刚说的:上一层的输出等于下一层的输入

经过群里大佬的讲解,我才发现是我的线代没学好,学过矩阵乘法,我们知道:

矩阵乘法只有在第一个矩阵的列数和第二个矩阵的行数相同时才有意义

这就是这里要用reshape()函数的原因,先来了解一下这个函数的用法:

import numpy as np

arr = np.arange(0,10)

print(arr)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr.dtype, arr.shape, arr.size, arr.ndim))

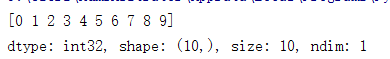

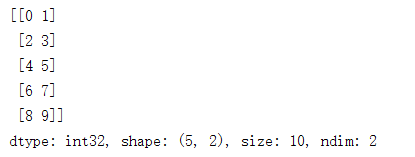

创建一个从0-9的一维arr数组,他的形状应该是1*10的,即一行10列:

下面我们改变他的形状,接着上面的代码:

arr = arr.reshape([2,5])

print(arr)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr.dtype, arr.shape, arr.size, arr.ndim))

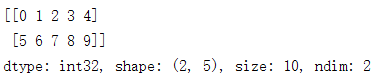

我设定了[2,5],意思就是把arr转换成2行5列的数组:

在已知第一个矩阵的行数时,我们只要知道第二个矩阵的列数即可,因此我们可以这样写:

arr = arr.reshape([-1,5])

print(arr)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr.dtype, arr.shape, arr.size, arr.ndim))

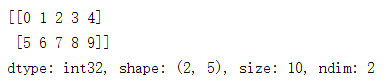

在arr数组不变时,它的效果跟reshape([2,5])一样:

再来一个例子加深印象:

arr = arr.reshape([-1,2])

print(arr)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr.dtype, arr.shape, arr.size, arr.ndim))

使用-1填充行数,它会自己根据列数确定行数;相反地,知道行数同样可以确定列数

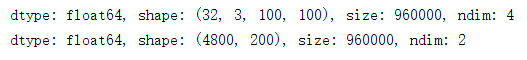

下面我们来看一下题目中的例子:(32, 3, 100, 100),我们先来创建一个四维矩阵:

arr_zero = np.ones([32, 3, 100, 100])

# print(arr_zero)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr_zero.dtype, arr_zero.shape, arr_zero.size, arr_zero.ndim))

试试看能不能转换成二维矩阵:

arr_zero = arr_zero.reshape([-1,200])

# print(arr_zero)

print('dtype: {}, shape: {}, size: {}, ndim: {}'.format(arr_zero.dtype, arr_zero.shape, arr_zero.size, arr_zero.ndim))

很显然,答案是可以的:

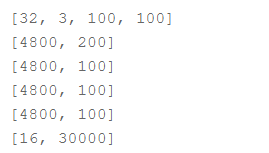

为了给大家有更直观的感受,我们回到AI Studio平台,打印一下各层网络输入输出的维度:

# 网络的前向计算过程

def forward(self,input):

print(input.shape)

x = fluid.layers.reshape(input, shape=[-1,200])

print(x.shape)

x = self.hidden1(x)

print(x.shape)

x = self.hidden2(x)

print(x.shape)

x = self.hidden3(x)

print(x.shape)

x = fluid.layers.reshape(x, shape=[-1,3*100*100])

print(x.shape)

y = self.hidden4(x)

return y

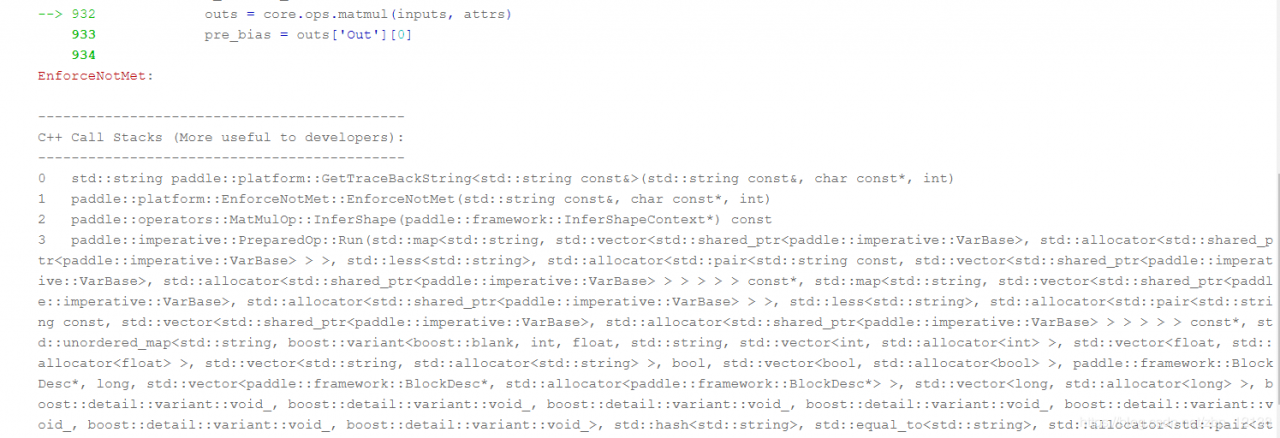

可以看到,在最后一层网络上出错了,我把报错信息拿过来:

Error: ShapeError: Input(X) and Input(Label) shall have the same shape except the last dimension. But received: the shape of Input(X) is [16, 10],the shape of Input(Label) is [32, 1].

本来应该输出一个[16,10]的,但是输入的形状却是[32,1],因为16不等于32,所以矩阵是无法相乘的

其实问题就出在第一个全连接层上:

def __init__(self):

super(MyDNN,self).__init__()

self.hidden1 = Linear(input_dim=200, output_dim=100, act='relu')

self.hidden2 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden3 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden4 = Linear(input_dim=3*100*100, output_dim=10, act='softmax')

第一层的输入是200,输出是100,相当于矩阵在输出时,列数少了一半,变成了100列,换句话说,原来这个矩阵的size是960000的,但是现在是480000,480000除以要变成的列数30000,等于16,也就是少了一半

解决方法就是在原来的基础上除以2:

#定义DNN网络

class MyDNN(fluid.dygraph.Layer):

def __init__(self):

super(MyDNN,self).__init__()

self.hidden1 = Linear(input_dim=200, output_dim=100, act='relu')

self.hidden2 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden3 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden4 = Linear(input_dim=3*100*50, output_dim=10, act='softmax')

# 网络的前向计算过程

def forward(self,input):

x = fluid.layers.reshape(input, shape=[-1,200])

x = self.hidden1(x)

x = self.hidden2(x)

x = self.hidden3(x)

x = fluid.layers.reshape(x, shape=[-1,3*100*50])

y = self.hidden4(x)

return y

至于具体的参数设置成多少,模型的效果比较好,还是要慢慢尝试啦~

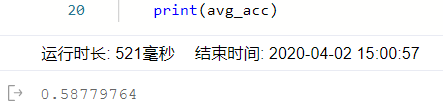

目前我用且仅用DNN深度神经网络训练出来的模型准确度是0.58:

实际检测一张图片试试:

效果还行吧,哈哈哈,下面是源代码,大家可以在本地跑一跑:

import os

import time

import random

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

import paddle

import paddle.fluid as fluid

import paddle.fluid.layers as layers

from multiprocessing import cpu_count

from paddle.fluid.dygraph import Pool2D,Conv2D

from paddle.fluid.dygraph import Linear

# 生成图像列表

data_path = '/home/aistudio/data/data23668/Dataset'

character_folders = os.listdir(data_path)

# print(character_folders)

if(os.path.exists('./train_data.list')):

os.remove('./train_data.list')

if(os.path.exists('./test_data.list')):

os.remove('./test_data.list')

for character_folder in character_folders:

with open('./train_data.list', 'a') as f_train:

with open('./test_data.list', 'a') as f_test:

if character_folder == '.DS_Store':

continue

character_imgs = os.listdir(os.path.join(data_path,character_folder))

count = 0

for img in character_imgs:

if img =='.DS_Store':

continue

if count%10 == 0:

f_test.write(os.path.join(data_path,character_folder,img) + '\t' + character_folder + '\n')

else:

f_train.write(os.path.join(data_path,character_folder,img) + '\t' + character_folder + '\n')

count +=1

print('列表已生成')

# 定义训练集和测试集的reader

def data_mapper(sample):

img, label = sample

img = Image.open(img)

img = img.resize((100, 100), Image.ANTIALIAS)

img = np.array(img).astype('float32')

img = img.transpose((2, 0, 1))

img = img/255.0

return img, label

def data_reader(data_list_path):

def reader():

with open(data_list_path, 'r') as f:

lines = f.readlines()

for line in lines:

img, label = line.split('\t')

yield img, int(label)

return paddle.reader.xmap_readers(data_mapper, reader, cpu_count(), 512)

# 用于训练的数据提供器

train_reader = paddle.batch(reader=paddle.reader.shuffle(reader=data_reader('./train_data.list'), buf_size=1024), batch_size=32)

# 用于测试的数据提供器

test_reader = paddle.batch(reader=data_reader('./test_data.list'), batch_size=32)

#定义DNN网络

class MyDNN(fluid.dygraph.Layer):

def __init__(self):

super(MyDNN,self).__init__()

self.hidden1 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden2 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden3 = Linear(input_dim=100, output_dim=100, act='relu')

self.hidden4 = Linear(input_dim=3*100*100, output_dim=10, act='softmax')

# 网络的前向计算过程

def forward(self,input):

x = self.hidden1(input)

x = self.hidden2(x)

x = self.hidden3(x)

x = fluid.layers.reshape(x, shape=[-1,3*100*100])

y = self.hidden4(x)

return y

#用动态图进行训练

with fluid.dygraph.guard():

model=MyDNN() #模型实例化

model.train() #训练模式

opt=fluid.optimizer.SGDOptimizer(learning_rate=0.01, parameter_list=model.parameters())#优化器选用SGD随机梯度下降,学习率为0.001.

epochs_num=20 #迭代次数

for pass_num in range(epochs_num):

for batch_id,data in enumerate(train_reader()):

images=np.array([x[0].reshape(3,100,100) for x in data],np.float32)

labels = np.array([x[1] for x in data]).astype('int64')

labels = labels[:, np.newaxis]

# print(images.shape)

image=fluid.dygraph.to_variable(images)

label=fluid.dygraph.to_variable(labels)

predict=model(image)#预测

# print(predict)

loss=fluid.layers.cross_entropy(predict,label)

# print(loss)

avg_loss=fluid.layers.mean(loss)#获取loss值

acc=fluid.layers.accuracy(predict,label)#计算精度

if batch_id!=0 and batch_id%50==0:

print("train_pass:{},batch_id:{},train_loss:{},train_acc:{}".format(pass_num,batch_id,avg_loss.numpy(),acc.numpy()))

avg_loss.backward()

opt.minimize(avg_loss)

model.clear_gradients()

fluid.save_dygraph(model.state_dict(),'MyDNN')#保存模型

#模型校验

with fluid.dygraph.guard():

accs = []

model_dict, _ = fluid.load_dygraph('MyDNN')

model = MyDNN()

model.load_dict(model_dict) #加载模型参数

model.eval() #训练模式

for batch_id,data in enumerate(test_reader()):#测试集

images=np.array([x[0].reshape(3,100,100) for x in data],np.float32)

labels = np.array([x[1] for x in data]).astype('int64')

labels = labels[:, np.newaxis]

image=fluid.dygraph.to_variable(images)

label=fluid.dygraph.to_variable(labels)

predict=model(image)

acc=fluid.layers.accuracy(predict,label)

accs.append(acc.numpy()[0])

avg_acc = np.mean(accs)

print(avg_acc)

#读取预测图像,进行预测

def load_image(path):

img = Image.open(path)

img = img.resize((100, 100), Image.ANTIALIAS)

img = np.array(img).astype('float32')

img = img.transpose((2, 0, 1))

img = img/255.0

print(img.shape)

return img

#构建预测动态图过程

with fluid.dygraph.guard():

infer_path = '手势.JPG'

model=MyDNN()#模型实例化

model_dict,_=fluid.load_dygraph('MyDNN')

model.load_dict(model_dict)#加载模型参数

model.eval()#评估模式

infer_img = load_image(infer_path)

infer_img=np.array(infer_img).astype('float32')

infer_img=infer_img[np.newaxis,:, : ,:]

infer_img = fluid.dygraph.to_variable(infer_img)

result=model(infer_img)

display(Image.open('手势.JPG'))

print(np.argmax(result.numpy()))

作者:Mr.郑先生_