跟着Leo机器学习实战:Kmeans聚类

跟着Leo机器学习实战:Kmeans聚类

Kmeans聚类

作者:LuckyLeo26

优点:容易实现

缺点:容易陷入局部最小值,在大规模数据收敛很慢。

适用数据类型:数值型数据

from numpy import *

def loadDataSet(fileName): #加载数据

dataMat = [] #assume last column is target value

fr = open(fileName)

for line in fr.readlines():

curLine = line.strip().split('\t')

fltLine = map(float,curLine) #map all elements to float()

dataMat.append(fltLine)

return dataMat

def distEclud(vecA, vecB):

return sqrt(sum(power(vecA - vecB, 2))) #计算两个向量的欧氏距离

def randCent(dataSet, k): #随机产生k个在数据范围内的中心点,并返回

n = shape(dataSet)[1]

centroids = mat(zeros((k,n)))#create centroid mat

for j in range(n):#create random cluster centers, within bounds of each dimension

minJ = min(dataSet[:,j])

rangeJ = float(max(dataSet[:,j]) - minJ)

centroids[:,j] = mat(minJ + rangeJ * random.rand(k,1))

return centroids

训练函数

def kMeans(dataSet, k, distMeas=distEclud, createCent=randCent):

m = shape(dataSet)[0] #获取样本数

clusterAssment = mat(zeros((m,2)))#记录样本被分配到哪个中心点,第二列记录到最近中心点的欧氏距离

centroids = createCent(dataSet, k) #随机产生k个中心点

clusterChanged = True

while clusterChanged:

clusterChanged = False

for i in range(m): #对每个样本寻找与其最近的中心点

minDist = inf; minIndex = -1

for j in range(k): #对每个样本寻找与其最近的中心点匹配

distJI = distMeas(centroids[j,:],dataSet[i,:])

if distJI < minDist:

minDist = distJI; minIndex = j

if clusterAssment[i,0] != minIndex: clusterChanged = True #判断分配是否发生改变

clusterAssment[i,:] = minIndex,minDist**2

print(centroids)

for cent in range(k):#recalculate centroids

ptsInClust = dataSet[nonzero(clusterAssment[:,0].A==cent)[0]]#获取分到同一类的样本

centroids[cent,:] = mean(ptsInClust, axis=0) #求分配到同一类样本的均值

return centroids, clusterAssment

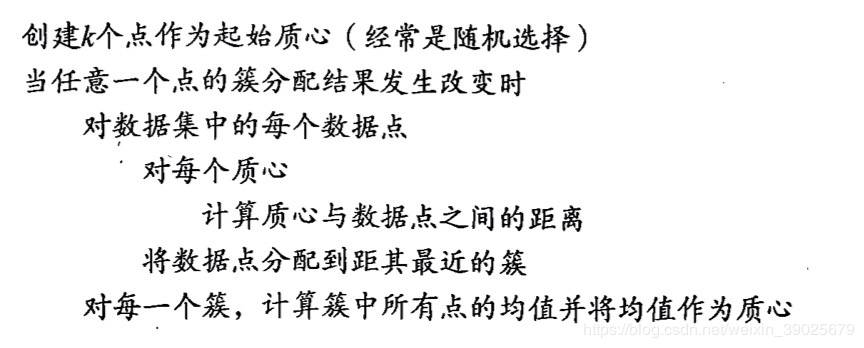

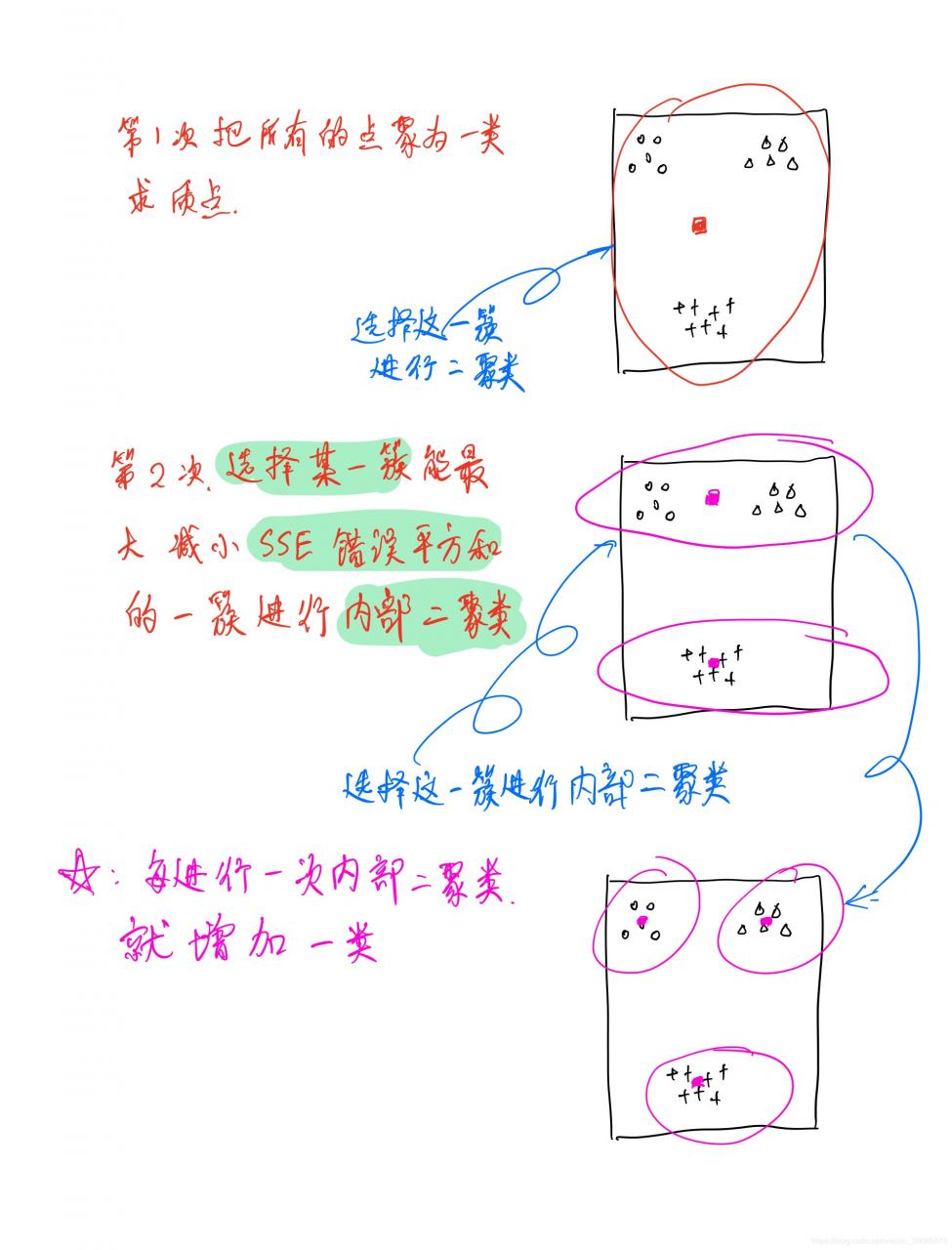

二分Kmeans聚类

原理

def biKmeans(dataSet, k, distMeas=distEclud):

m = shape(dataSet)[0]

clusterAssment = mat(zeros((m,2)))

centroid0 = mean(dataSet, axis=0).tolist()[0]

centList =[centroid0] #create a list with one centroid

for j in range(m):#calc initial Error

clusterAssment[j,1] = distMeas(mat(centroid0), dataSet[j,:])**2

while (len(centList) < k):

lowestSSE = inf

for i in range(len(centList)):

ptsInCurrCluster = dataSet[nonzero(clusterAssment[:,0].A==i)[0],:]#获取分为i类的数据

centroidMat, splitClustAss = kMeans(ptsInCurrCluster, 2, distMeas) #对同一类再进行内部二分类

sseSplit = sum(splitClustAss[:,1])#获取二分类之后的SSE误差平方差

sseNotSplit = sum(clusterAssment[nonzero(clusterAssment[:,0].A!=i)[0],1])#获取二分类之前的SSE误差平方差

print("sseSplit, and notSplit: ",sseSplit,sseNotSplit)

if (sseSplit + sseNotSplit) < lowestSSE:

bestCentToSplit = i

bestNewCents = centroidMat

bestClustAss = splitClustAss.copy()

lowestSSE = sseSplit + sseNotSplit

bestClustAss[nonzero(bestClustAss[:,0].A == 1)[0],0] = len(centList) #change 1 to 3,4, or whatever

bestClustAss[nonzero(bestClustAss[:,0].A == 0)[0],0] = bestCentToSplit

print ('the bestCentToSplit is: ',bestCentToSplit)

print ('the len of bestClustAss is: ', len(bestClustAss))

centList[bestCentToSplit] = bestNewCents[0,:].tolist()[0]#replace a centroid with two best centroids

centList.append(bestNewCents[1,:].tolist()[0])

clusterAssment[nonzero(clusterAssment[:,0].A == bestCentToSplit)[0],:]= bestClustAss#reassign new clusters, and SSE

return mat(centList), clusterAssment

作者:LuckyLeo26