Python爬虫,猫眼网站(可进行二次请求)

猫眼网站

strTitle = strTitle.get_text().replace(’\n’, ‘’).replace(’ ', ‘’) 循环输出

for i in range(0, len(result)):

print(list(result)[i]) 正则表达式

result = re.findall(

‘<dd.?index.?">(.?)<.?title="(.?)".?data-src="(.?)".?star">(.?)<.?time">(.?)</p.?integer">(.?)<.?fraction">(.*?)<’,

strPageSource, re.S)

主要使用可以查看资源:python爬虫课程要点.doc 数据截取 [3:5] 表示第三位到第五位

_lData[len(_lData) - 1][3] = _lData[len(_lData)-1][3][3::]

作者:帅气转身而过

一、 项目要求

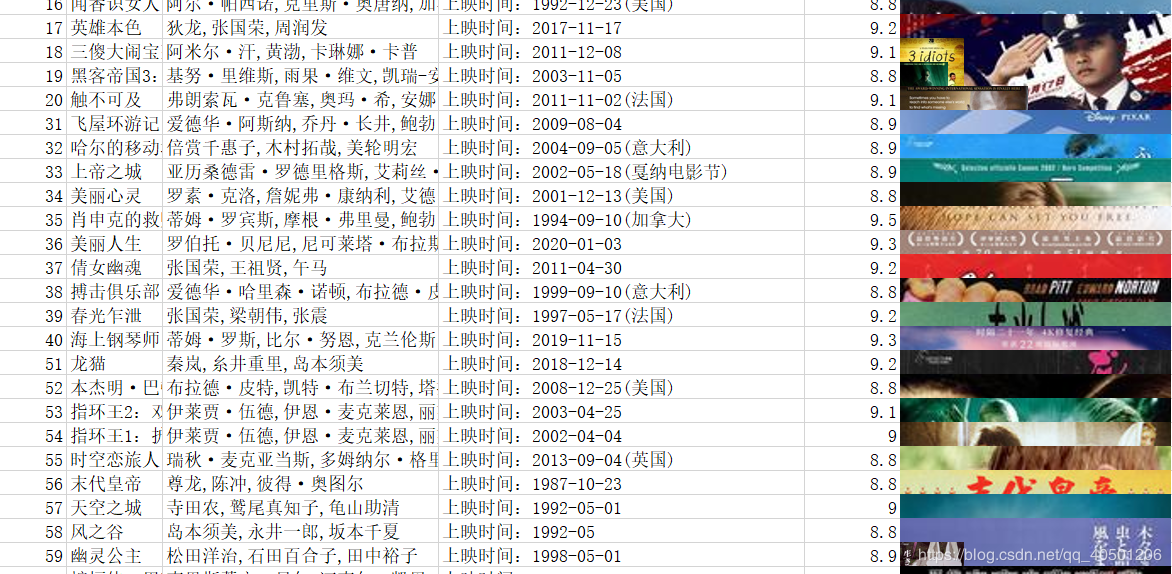

(1)保存100部电影的信息,排名,电影名称,演员,上映时间,评分

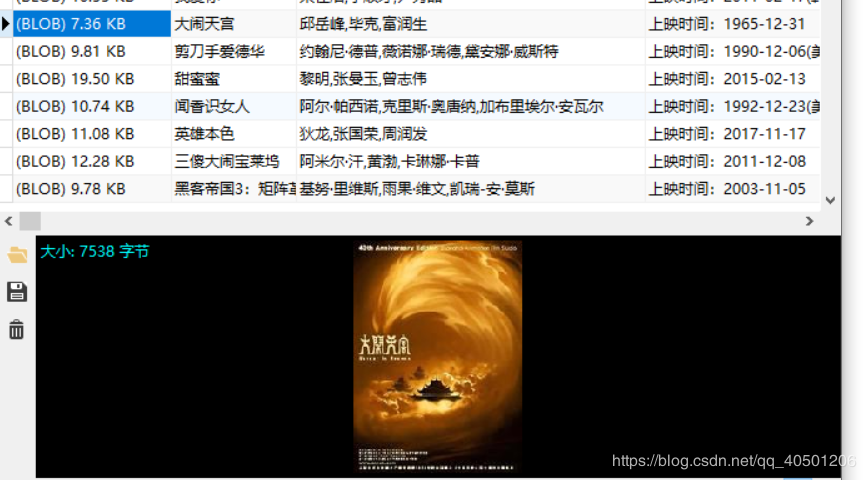

(2)抓取猫眼网站数据并保存到mysql数据库,存储xml,execl文件并统计排分

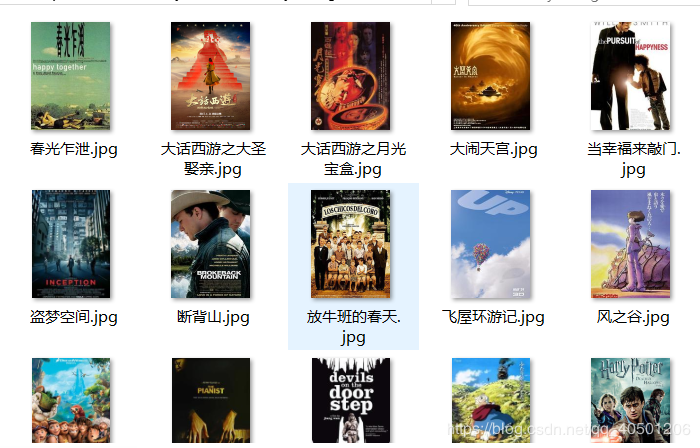

(3)点击任意一个电影,爬取跳转网页上的介绍、演职人员,奖项,图集信息,并且保存到本地

(4)统计演员演的电影数目,最后以图表形式输出

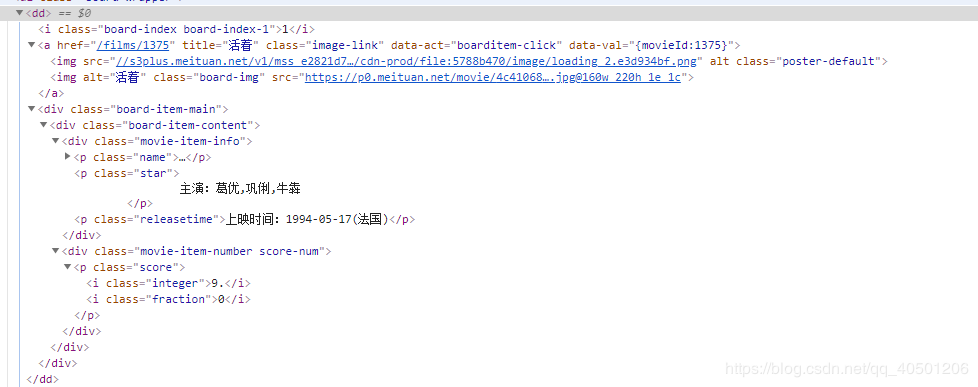

二、 分析目标网站结构

目标网址:https://maoyan.com/board/4

1)通过观察网页结构,发现一页只出现十部电影,每次翻页就会改变请求网页里面的offset值

https://maoyan.com/board/4?offset=0

https://maoyan.com/board/4?offset=10

https://maoyan.com/board/4?offset=20

2)电影数据都在dd标签里

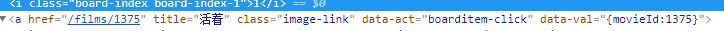

3)二次请求的url在每个电影的a标签当中的href属性里

str = 'https://maoyan.com'+i.attrs['href']

4)通过观察网页结构,发现剧情简介在span标签里面;演职 人员的照片的地址在img标签的src属性当中

三、 项目代码(部分)

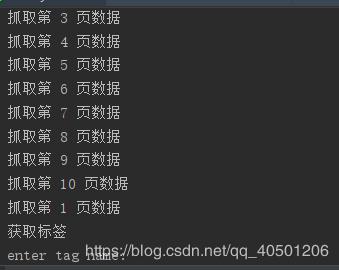

# 返回每页源码

def getPage():

for i in range(10):

strUrl = 'https://maoyan.com/board/4?offset=%s'%(i*10)

headers = {

'User-Agent': random.choice(user_agent)

}

response = requests.get(strUrl, headers=headers)

print('抓取第', str(i + 1), '页数据')

yield response.text

pass

# 提取信息

def getPData(strPageSource):

result = re.findall(

'(.*?)(.*?)(.*?)(.*?)(.*?)<',

strPageSource, re.S)

for item in result:

global _lData

_lData.append(list(item))

imgCon = getImg(item[2], item[1])

_lData[len(_lData)-1][3] = item[3].replace('\n', '').replace(' ', '')

_lData[len(_lData) - 1][3] = _lData[len(_lData)-1][3][3::]

_lData[len(_lData)-1].append(imgCon)

# 保存mysql数据

def saveMySql():

conn = pymysql.connect(host = 'localhost', user = 'root', password = '123456', db = 'maoyan')

cursor = conn.cursor()

for item in _lData:

try:

strSql = 'insert into maoyan(img, title, actor, date, score) values(%s, %s, %s, %s, %s)'

cursor.execute(strSql, (item[7], item[1], item[3], item[4], str(item[5] + item[6])))

conn.commit()

except:

conn.rollback()

print('data error!')

conn.close()

pass

# 二次请求

def second_get(strUrl):

headers={

'User-Agent': random.choice(user_agent)

}

r = requests.get(strUrl, headers=headers)

# print(r.text)

return r.text;

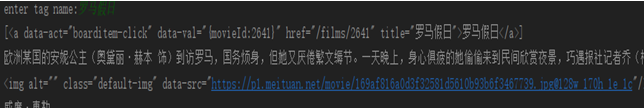

#获取href

def second(strPageSource):

print("获取标签")

strInput = input('enter tag name:')

soup = BeautifulSoup(strPageSource, "lxml")

for p in soup.find_all('p', attrs={'class':'name'}):

inputstr = p.get_text()

print(inputstr)

if strInput in inputstr:

stra = p.contents

# print(p.contents)

for i in stra:

str = 'https://maoyan.com'+i.attrs['href']

# print(str)

return str

#简介获取

def getforth(strSource):

soup = BeautifulSoup(strSource, "lxml")

for span in soup.find_all('span', attrs={'class': 'dra'}):

print(span.get_text())

# 提取二次信息

def second_getPData(strSource):

soup = BeautifulSoup(strSource, "lxml")

for img, strTitle in zip(soup.find_all('img',attrs={'class': 'default-img'}),soup.find_all('a', attrs={'class': 'name'})):

print(img)

imgUrl = img.attrs['data-src']

# strTitle = soup.find('a', attrs={'class': 'name'}).get_text().replace('\n', '').replace(' ', '')

strTitle = strTitle.get_text().replace('\n', '').replace(' ', '')

print(strTitle)

second_getImg(imgUrl,strTitle)

# 请求二次图片

def second_getImg(imgUrl,strTitle):

headers = {

'User_Agent' : random.choice(user_agent)

}

res = requests.get(imgUrl, headers = headers)

strPath = r'F:\mine\experience\maoyanout\picture' + '\\'+ strTitle + '.jpg'

with open(strPath, 'wb')as fw:

fw.write(res.content)

return res.content

#获奖信息

def second_getward(url):

nominate = re.findall("(提名:.+)<", url)

award = re.findall("(获奖:.+)<", url)

result, iCount = np.unique(nominate, return_counts=True)

result1, iCount1 = np.unique(award, return_counts=True)

for i in range(0, len(result)):

print(list(result)[i])

for i in range(0, len(result1)):

print(list(result1)[i])

四、项目代码注意点

replace(),处理空格和回车strTitle = strTitle.get_text().replace(’\n’, ‘’).replace(’ ', ‘’) 循环输出

for i in range(0, len(result)):

print(list(result)[i]) 正则表达式

result = re.findall(

‘<dd.?index.?">(.?)<.?title="(.?)".?data-src="(.?)".?star">(.?)<.?time">(.?)</p.?integer">(.?)<.?fraction">(.*?)<’,

strPageSource, re.S)

主要使用可以查看资源:python爬虫课程要点.doc 数据截取 [3:5] 表示第三位到第五位

_lData[len(_lData) - 1][3] = _lData[len(_lData)-1][3][3::]

五、项目演示

主界面

数据库

Excel表格

照片

作者:帅气转身而过