Ambari HDP 下 SPARK2 与 Phoenix 整合

1、环境说明

2、条件

HBase 安装完成

Phoenix 已经启用,Ambari界面如下所示:

作者:跟着大数据和AI去旅行

| 操作系统 | CentOS Linux release 7.4.1708 (Core) |

|---|---|

| Ambari |

2.6.x |

| HDP |

2.6.3.0 |

| Spark |

2.x |

| Phoenix |

4.10.0-HBase-1.2 |

Phoenix 官网整合教程: http://phoenix.apache.org/phoenix_spark.html

步骤:

进入 Ambari Spark2 配置界面

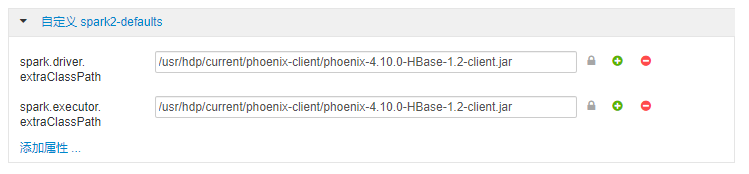

自定义 spark2-defaults并添加如下配置项:

spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-4.10.0-HBase-1.2-client.jar

spark.executor.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-4.10.0-HBase-1.2-client.jar

如果配置了Yarn HA, 则需要修改 Yarn HA 配置,否则spark-submit提交任务会报如下错误:

Exception in thread "main" java.lang.IllegalAccessError: tried to access method org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider.getProxyInternal()Ljava/lang/Object; from class org.apache.hadoop.yarn.client.RequestHedgingRMFailoverProxyProvider

at org.apache.hadoop.yarn.client.RequestHedgingRMFailoverProxyProvider.init(RequestHedgingRMFailoverProxyProvider.java:75)

at org.apache.hadoop.yarn.client.RMProxy.createRMFailoverProxyProvider(RMProxy.java:163)

at org.apache.hadoop.yarn.client.RMProxy.createRMProxy(RMProxy.java:94)

at org.apache.hadoop.yarn.client.ClientRMProxy.createRMProxy(ClientRMProxy.java:72)

at org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.serviceStart(YarnClientImpl.java:187)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:193)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:153)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:56)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:173)

at org.apache.spark.SparkContext.(SparkContext.scala:509)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2516)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:922)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$7.apply(SparkSession.scala:914)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:914)

at cn.spark.sxt.SparkOnPhoenix$.main(SparkOnPhoenix.scala:13)

at cn.spark.sxt.SparkOnPhoenix.main(SparkOnPhoenix.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.i

修改Yarn HA配置:

将原来的配置:

yarn.client.failover-proxy-provider=org.apache.hadoop.yarn.client.RequestHedgingRMFailoverProxyProvider

改为现在的配置:

yarn.client.failover-proxy-provider=org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider

如果没有配置 Yarn HA, 则不需要进行此步配置

---

作者:跟着大数据和AI去旅行