Reptile: On First-Order Meta-Learning Algorithms

Paper:https://arxiv.org/pdf/1803.02999.pdf

Code:https://github.com/openai/supervised-reptile

Tips:OpenAi的一篇相似MAML的Meta-learning相关的paper。

(阅读笔记)

回顾了MAML相关工作。

目标是求解下式,其中τ\tauτ是不同的任务集,ϕ\phiϕ是初始参数,LLL是损失函数,UτkU_{\tau}^{k}Uτk表示从任务集τ\tauτ抽样出来训练的第kkk次的参数更新操作:

minϕEτ[Lτ(Uτk(ϕ))]

\min_{\phi}\mathbb{E}_{\tau}[L_{\tau}(U_{\tau}^{k}(\phi)) ]

ϕminEτ[Lτ(Uτk(ϕ))]

AAA是原始训练任务集,BBB是新任务集。MAML的训练操作仍然对原始任务集进行训练,但是其损失函数却是针对的BBB,如下所示:

minϕEτ[Lτ,B(Uτ,A(ϕ))]

\min_{\phi}\mathbb{E}_{\tau}[L_{\tau,B}(U_{\tau,A}(\phi)) ]

ϕminEτ[Lτ,B(Uτ,A(ϕ))]

找梯度即需对参数ϕ\phiϕ求偏导(复合函数求导):

g=∂Lτ,B(Uτ,A(ϕ))∂ϕ=Lτ,B′(Uτ,A(ϕ))×Uτ,A′(ϕ)=∂Lτ,B(Uτ,A(ϕ))∂Uτ,A(ϕ)×∂Uτ,A(ϕ)∂ϕ

g=\frac{\partial L_{\tau,B}(U_{\tau,A}(\phi))}{\partial \phi}\\ \\=L_{\tau,B}'(U_{\tau,A}(\phi)) \times U_{\tau,A}'(\phi)=\frac{\partial L_{\tau,B}(U_{\tau,A}(\phi))}{\partial U_{\tau,A}(\phi)} \times \frac{\partial U_{\tau,A}(\phi)}{\partial \phi}

g=∂ϕ∂Lτ,B(Uτ,A(ϕ))=Lτ,B′(Uτ,A(ϕ))×Uτ,A′(ϕ)=∂Uτ,A(ϕ)∂Lτ,B(Uτ,A(ϕ))×∂ϕ∂Uτ,A(ϕ)

使用恒等操作(对第二项偏微分变为常量1),得到First-order MAML为:

g=∂Lτ,B(Uτ,A(ϕ))∂Uτ,A(ϕ)

g=\frac{\partial L_{\tau,B}(U_{\tau,A}(\phi))}{\partial U_{\tau,A}(\phi)}

g=∂Uτ,A(ϕ)∂Lτ,B(Uτ,A(ϕ))

即损失下降梯度的方向为在任务集AAA得到参数ϕ\phiϕ的情况下,通过对测试集BBB得到的损失最小化的方向即是外循环的方向。

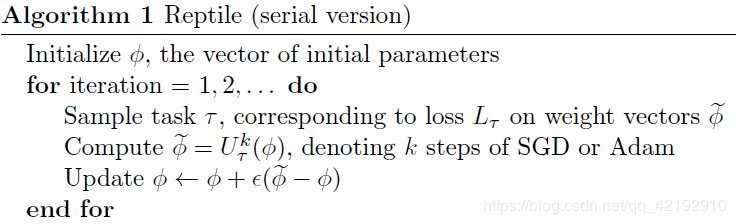

注意到可以一次迭代中将ϕ~\widetilde{\phi}ϕ进行kkk步后,最后才确定梯度的方向。

作者:强大源