TensorFlow写卷积神经网络_数据集MNist_详细说明

导入包和MNist

其中strides=[B, H, W, C]分别代表在batch、矩阵的高度、矩阵的宽度、输入的通道四个维度移动的步长。

padding:SAME:补零;VAILID:原图 tf.nn.max_pool(x, ksize=[1, k, k, 1], strides=[1, k, k, 1], padding=‘SAME’)

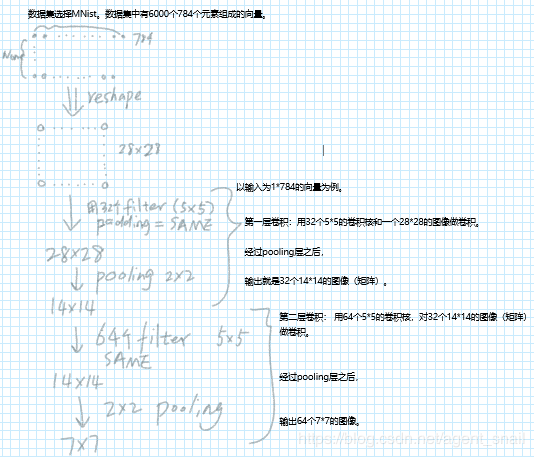

其中ksize是池化窗口的大小,由于一般不在batch和channel维度上做池化,所以这里为1 x.get_shape(),只有tensor才可以使用这种方法,返回的是一个TensorShape对象。as_list()将元组转换成list. tf.nn.dropout():tensorflow里面为了防止或减轻过拟合而使用的函数,它一般用在全连接层。Dropout就是在不同的训练过程中随机扔掉一部分神经元。也就是让某个神经元的激活值以一定的概率p,让其停止工作,这次训练过程中不更新权值,也不参加神经网络的计算。但是它的权重得保留下来(只是暂时不更新而已),因为下次样本输入时它可能又得工作了 网络结构定义 第一个卷积层:5 * 5卷积核,1个输入([-1,28,28,1]中的1),32个输出 第二个卷积层:5 * 5卷积核,32个输入(上一层输出),64个输出 全连接层:7×7×64 个输入和 1024 个输出

(输出图像的个数为认为设定) 卷积层的结构理解:

作者:pycolar

from __future__ import division, print_function #__future__的作用是升级py2到py3的一些用法,division:精确除法

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

#从当前文件夹中找到MNist数据,“”中为文件路径

#MNist中,每一幅图片都是由28*28像素矩阵构成的,(28*28=784即784个像素点)

mnist = input_data.read_data_sets("datasets/MNIST_data/", one_hot = True)

观察数据

def train_size(num):

print('Training = ' + str(mnist.train.images.shape))

print('-------------------------------------------')

x_train = mnist.train.images[:num, :]

print('x_Training = ' + str(x_train.shape))

y_train = mnist.train.labels[:num, :]

print('y_Training = ' + str(y_train.shape))

print('')

return x_train, y_train

def test_size(num):

print('Test = ' + str(mnist.train.images.shape))

print('-------------------------------------------')

x_test = mnist.test.images[:num, :]

print('x_Test = ' + str(x_test.shape))

y_test = mnist.test.labels[:num, :]

print('y_Training = ' + str(y_test.shape))

print('')

return x_test, y_test

显示图

def display_digit(num):

print(y_train[num])

label = y_train[num].argmax(axis = 0)

image = x_train[num].reshape([28, 28]) #将784位一组改一下形状写成28*28变成容易观察的图形

plt.title('Example: %d Label: %d' % (num, label))

plt.imshow(image, cmap = plt.get_cmap('gray_r')) #cmap:可视化色彩图。gray:【0:黑;255:白】,gray_r:【0:白,255:黑】

plt.show()

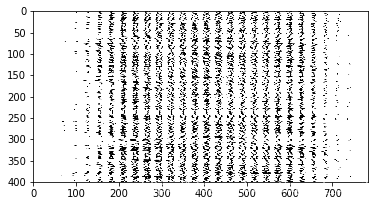

显示重新塑形的矩阵

def display_mult_flat(start, stop):

images = x_train[start].reshape([1,784])

for i in range(start + 1, stop):

#将多幅图形连接在一起显示,numpy.concatenate((a1, a2, ...), axis=0)(按轴axis连接array组成一个新的array,default=0:逐行)

images = np.concatenate((images, x_train[i].reshape([1, 784])))

plt.imshow(images, cmap = plt.get_cmap('gray_r'))

plt.show()

x_train, y_train = train_size(55000)

#随机显示一个数字。np.random.randint(low, high=None, size=None, dtype='l')。函数的作用是,返回一个随机整型数,范围从低(包括)到高(不包括),即[low, high)。如果没有写参数high的值,则返回[0,low)的值。

display_digit(np.random.randint(0, x_train.shape[0])) #x_train.shape是55000*784

display_mult_flat(0, 400)

Training = (55000, 784)

-------------------------------------------

x_Training = (55000, 784)

y_Training = (55000, 10)

[0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]

learning_rate = 0.001

training_iters = 500

batch_size = 128

display_step = 10

n_input = 784

n_classes = 10

dropout = 0.85

计算图输入的占位

x = tf.placeholder(tf.float32, [None,n_input]) #输入是none个,784列的矩阵

y = tf.placeholder(tf.float32, [None,n_classes])

keep_prob = tf.placeholder(tf.float32) #dropout参数,元素被保留下来的概率

建立网络

关键命令用法

x = tf.nn.conv2d(x, W, strides=[1, strides, strides, 1], padding=‘SAME’)其中strides=[B, H, W, C]分别代表在batch、矩阵的高度、矩阵的宽度、输入的通道四个维度移动的步长。

padding:SAME:补零;VAILID:原图 tf.nn.max_pool(x, ksize=[1, k, k, 1], strides=[1, k, k, 1], padding=‘SAME’)

其中ksize是池化窗口的大小,由于一般不在batch和channel维度上做池化,所以这里为1 x.get_shape(),只有tensor才可以使用这种方法,返回的是一个TensorShape对象。as_list()将元组转换成list. tf.nn.dropout():tensorflow里面为了防止或减轻过拟合而使用的函数,它一般用在全连接层。Dropout就是在不同的训练过程中随机扔掉一部分神经元。也就是让某个神经元的激活值以一定的概率p,让其停止工作,这次训练过程中不更新权值,也不参加神经网络的计算。但是它的权重得保留下来(只是暂时不更新而已),因为下次样本输入时它可能又得工作了 网络结构定义 第一个卷积层:5 * 5卷积核,1个输入([-1,28,28,1]中的1),32个输出 第二个卷积层:5 * 5卷积核,32个输入(上一层输出),64个输出 全连接层:7×7×64 个输入和 1024 个输出

(输出图像的个数为认为设定) 卷积层的结构理解:

#给定步幅的卷积层,激活函数是 ReLU,padding 设定为 SAME 模式:

def conv2d(x, W, b, strides=1):

x = tf.nn.conv2d(x, W, strides=[1, strides, strides, 1], padding='SAME')

x = tf.nn.bias_add(x, b) #一个叫bias的向量加到一个叫value的矩阵上,是向量与矩阵的每一行进行相加,得到的结果和value矩阵大小相同。

return tf.nn.relu(x)

#定义一个输入是 x 的 maxpool 层,卷积核为 ksize 并且 padding 为 SAME:

def maxpool2d(x, k=2):

return tf.nn.max_pool(x, ksize=[1, k, k, 1], strides=[1, k, k, 1], padding='SAME')

#定义convnet: 卷积-卷积-全连接-dropout

def conv_net(x, weights, biases, dropout):

x = tf.reshape(x, shape=[-1, 28, 28, 1])

conv1 = conv2d(x, weights['wc1'], biases['bc1']) #卷积

conv1 = maxpool2d(conv1, k=2) #池化

conv2 = conv2d(conv1, weights['wc2'], biases['bc2']) #conv

conv2 = maxpool2d(conv2, k=2) #pooling

#两层卷积之后的结果得到了:64个7*7大小矩阵(或者称为图片)

#将卷积层的输出作为全连接层的输入,重新调整形状:7*7*64个元素为一列

fc1 = tf.reshape(conv2, [-1, weights['wd1'].get_shape().as_list()[0]])

fc1 = tf.add(tf.matmul(fc1, weights['wd1']), biases['bd1']) #fully connected

fc1 = tf.nn.relu(fc1)

fc1 = tf.nn.dropout(fc1, dropout)

out = tf.add(tf.matmul(fc1, weights['out']), biases['out'])

return out

定义网络的权重和偏置

weights = {

'wc1' : tf.Variable(tf.random_normal([5, 5, 1, 32])),

'wc2' : tf.Variable(tf.random_normal([5, 5, 32, 64])),

'wd1' : tf.Variable(tf.random_normal([7*7*64, 1024])),

'out' : tf.Variable(tf.random_normal([1024, n_classes])),

}

biases = {

'bc1' : tf.Variable(tf.random_normal([32])),

'bc2' : tf.Variable(tf.random_normal([64])),

'bd1' : tf.Variable(tf.random_normal([1024])),

'out' : tf.Variable(tf.random_normal([n_classes])),

}

搭建模型

pred = conv_net(x, weights, biases, keep_prob)

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

init = tf.global_variables_initializer()

train_loss = []

train_acc = []

test_acc = []

with tf.Session() as sess:

sess.run(init)

step = 1

while step <= training_iters:

batch_x, batch_y = mnist.train.next_batch(batch_size)

#batch_x = np.reshape(batch_x, [-1, 28, 28, 1])

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y, keep_prob: dropout})

#每隔display_step这些步显示一次

if step % display_step == 0:

loss_train, acc_train = sess.run([cost, accuracy], feed_dict={x: batch_x, y: batch_y, keep_prob: dropout})

print("Iter" + str(step) + ",Minibatch Loss = " + \

"{:.2f}".format(loss_train) + ", Training Accuracy = " + \

"{:.2f}".format(acc_train))

acc_test = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels , keep_prob: 1})

print("Testing Accuracy = " + "{:.2f}".format(acc_train))

train_loss.append(loss_train)

train_acc.append(acc_train)

test_acc.append(acc_test)

step += 1

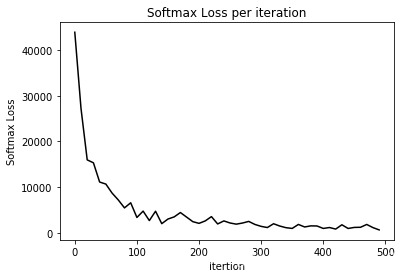

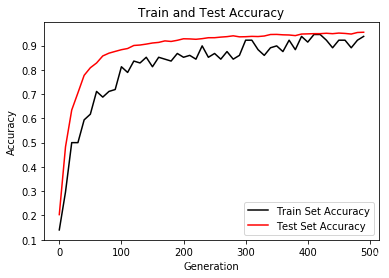

运行结果:

Iter10,Minibatch Loss = 43936.12, Training Accuracy = 0.14

Testing Accuracy = 0.14

Iter20,Minibatch Loss = 27234.01, Training Accuracy = 0.30

Testing Accuracy = 0.30

Iter30,Minibatch Loss = 15954.67, Training Accuracy = 0.50

Testing Accuracy = 0.50

Iter40,Minibatch Loss = 15314.48, Training Accuracy = 0.50

Testing Accuracy = 0.50

Iter50,Minibatch Loss = 11097.48, Training Accuracy = 0.59

Testing Accuracy = 0.59

Iter60,Minibatch Loss = 10651.96, Training Accuracy = 0.62

Testing Accuracy = 0.62

Iter70,Minibatch Loss = 8680.00, Training Accuracy = 0.71

Testing Accuracy = 0.71

Iter80,Minibatch Loss = 7176.53, Training Accuracy = 0.69

Testing Accuracy = 0.69

Iter90,Minibatch Loss = 5430.07, Training Accuracy = 0.71

Testing Accuracy = 0.71

Iter100,Minibatch Loss = 6548.58, Training Accuracy = 0.72

Testing Accuracy = 0.72

Iter110,Minibatch Loss = 3333.26, Training Accuracy = 0.81

Testing Accuracy = 0.81

Iter120,Minibatch Loss = 4729.37, Training Accuracy = 0.79

Testing Accuracy = 0.79

Iter130,Minibatch Loss = 2650.15, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter140,Minibatch Loss = 4700.38, Training Accuracy = 0.83

Testing Accuracy = 0.83

Iter150,Minibatch Loss = 1974.79, Training Accuracy = 0.85

Testing Accuracy = 0.85

Iter160,Minibatch Loss = 2988.53, Training Accuracy = 0.81

Testing Accuracy = 0.81

Iter170,Minibatch Loss = 3457.32, Training Accuracy = 0.85

Testing Accuracy = 0.85

Iter180,Minibatch Loss = 4411.01, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter190,Minibatch Loss = 3413.62, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter200,Minibatch Loss = 2411.36, Training Accuracy = 0.87

Testing Accuracy = 0.87

Iter210,Minibatch Loss = 2040.41, Training Accuracy = 0.85

Testing Accuracy = 0.85

Iter220,Minibatch Loss = 2574.14, Training Accuracy = 0.86

Testing Accuracy = 0.86

Iter230,Minibatch Loss = 3515.91, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter240,Minibatch Loss = 1911.09, Training Accuracy = 0.90

Testing Accuracy = 0.90

Iter250,Minibatch Loss = 2576.21, Training Accuracy = 0.85

Testing Accuracy = 0.85

Iter260,Minibatch Loss = 2118.93, Training Accuracy = 0.87

Testing Accuracy = 0.87

Iter270,Minibatch Loss = 1865.62, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter280,Minibatch Loss = 2111.70, Training Accuracy = 0.88

Testing Accuracy = 0.88

Iter290,Minibatch Loss = 2465.34, Training Accuracy = 0.84

Testing Accuracy = 0.84

Iter300,Minibatch Loss = 1801.69, Training Accuracy = 0.86

Testing Accuracy = 0.86

Iter310,Minibatch Loss = 1379.81, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter320,Minibatch Loss = 1124.99, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter330,Minibatch Loss = 1956.47, Training Accuracy = 0.88

Testing Accuracy = 0.88

Iter340,Minibatch Loss = 1452.55, Training Accuracy = 0.86

Testing Accuracy = 0.86

Iter350,Minibatch Loss = 1092.12, Training Accuracy = 0.89

Testing Accuracy = 0.89

Iter360,Minibatch Loss = 928.34, Training Accuracy = 0.90

Testing Accuracy = 0.90

Iter370,Minibatch Loss = 1805.11, Training Accuracy = 0.88

Testing Accuracy = 0.88

Iter380,Minibatch Loss = 1248.89, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter390,Minibatch Loss = 1489.37, Training Accuracy = 0.88

Testing Accuracy = 0.88

Iter400,Minibatch Loss = 1462.85, Training Accuracy = 0.94

Testing Accuracy = 0.94

Iter410,Minibatch Loss = 926.76, Training Accuracy = 0.91

Testing Accuracy = 0.91

Iter420,Minibatch Loss = 1127.02, Training Accuracy = 0.95

Testing Accuracy = 0.95

Iter430,Minibatch Loss = 786.22, Training Accuracy = 0.95

Testing Accuracy = 0.95

Iter440,Minibatch Loss = 1727.24, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter450,Minibatch Loss = 920.39, Training Accuracy = 0.89

Testing Accuracy = 0.89

Iter460,Minibatch Loss = 1150.05, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter470,Minibatch Loss = 1181.85, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter480,Minibatch Loss = 1799.36, Training Accuracy = 0.89

Testing Accuracy = 0.89

Iter490,Minibatch Loss = 1111.24, Training Accuracy = 0.92

Testing Accuracy = 0.92

Iter500,Minibatch Loss = 602.50, Training Accuracy = 0.94

Testing Accuracy = 0.94

eval_indices = range(0, training_iters, display_step)

#画Loss图像

plt.plot(eval_indices, train_loss, 'k-')

plt.title('Softmax Loss per iteration')

plt.xlabel('Itertion')

plt.ylabel('Softmax Loss')

plt.show()

#画acc图像

plt.plot(eval_indices, train_acc, 'k-', label='Train Set Accuracy')

plt.plot(eval_indices, test_acc, 'r-', label='Test Set Accuracy')

plt.title('Train and Test Accuracy')

plt.xlabel('Generation')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

plt.show()

参考文章

作者:pycolar