机器学习入门 --- 基于随机森林的气温预测(三)随机森林参数选择

本文中将针对树模型的参数进行优化

数据预处理前面已经做过好几次数据预处理了,这里直接上代码

得到数据

# 导入工具包

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

# 读取数据

features = pd.read_csv('data/temps_extended.csv')

# 独热编码处理数据

features = pd.get_dummies(features)

# 标签和特征划分

labels = features['actual']

features = features.drop('actual', axis = 1)

# 获取特征list

feature_list = list(features.columns)

# 转换数据类型

features = np.array(features)

labels = np.array(labels)

# 划分数据集

train_features, test_features, train_labels, test_labels = train_test_split(features, labels,

test_size = 0.25,

random_state = 42)

准备训练集、测试集

# 选择6个总要特征

important_feature_names = ['temp_1', 'average', 'ws_1', 'temp_2', 'friend', 'year']

# 得到索引

important_indices = [feature_list.index(feature) for feature in important_feature_names]

# 提取特征

important_train_features = train_features[:, important_indices]

important_test_features = test_features[:, important_indices]

开始调参

首先需要看一下决策树模型中一共有多少可调参数

from sklearn.ensemble import RandomForestRegressor

from pprint import pprint

# 建模

rf = RandomForestRegressor(random_state = 42)

print('Parameters currently in use:\n')

# 打印所有参数

pprint(rf.get_params())

Parameters currently in use:

{'bootstrap': True,

'criterion': 'mse',

'max_depth': None,

'max_features': 'auto',

'max_leaf_nodes': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 1,

'min_samples_split': 2,

'min_weight_fraction_leaf': 0.0,

'n_estimators': 10,

'n_jobs': 1,

'oob_score': False,

'random_state': 42,

'verbose': 0,

'warm_start': False}

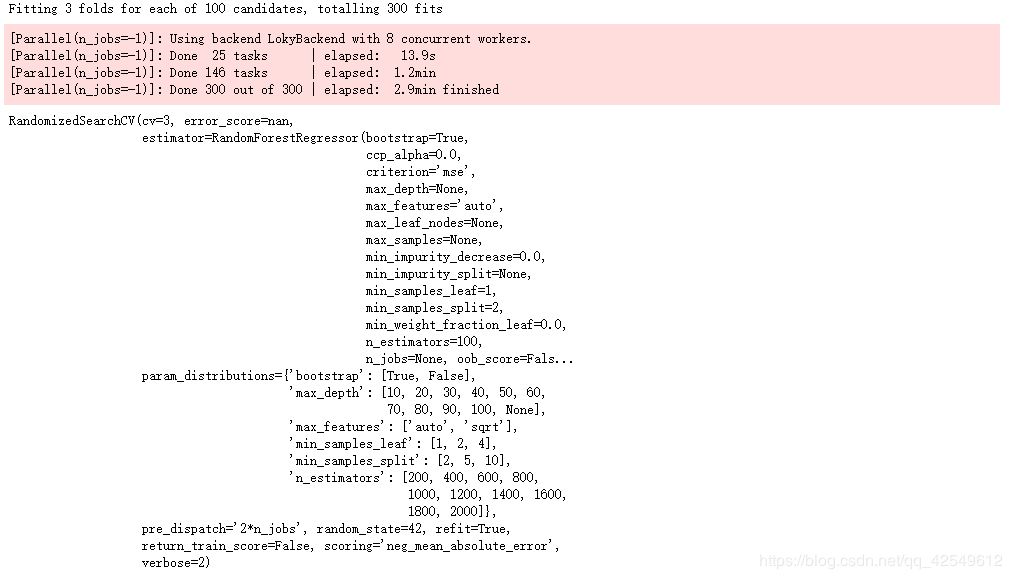

随机搜索交叉验证

使用 RandomizedSearchCV 在设定的参数空间中进行随机寻找100组,并得到效果最好的一组参数

from sklearn.model_selection import RandomizedSearchCV

# 建立树的个数

n_estimators = [int(x) for x in np.linspace(start = 200, stop = 2000, num = 10)]

# 最大特征的方式

max_features = ['auto', 'sqrt']

# 树的最大深度

max_depth = [int(x) for x in np.linspace(10, 100, num = 10)]

max_depth.append(None)

# 节点最小分裂所需样本个数

min_samples_split = [2, 5, 10]

# 叶子节点最小样本数,任何分裂不能让其子节点样本数少于此值

min_samples_leaf = [1, 2, 4]

# 样本采样方法

bootstrap = [True, False]

# Random grid

random_grid = {'n_estimators': n_estimators,

'max_features': max_features,

'max_depth': max_depth,

'min_samples_split': min_samples_split,

'min_samples_leaf': min_samples_leaf,

'bootstrap': bootstrap}

# Use the random grid to search for best hyperparameters

# 指定算法

rf = RandomForestRegressor()

# 传参

rf_random = RandomizedSearchCV(estimator=rf, # 模型

param_distributions=random_grid, # 算法空间

n_iter = 100, # 迭代次数

scoring='neg_mean_absolute_error', # 评估标准

cv = 3, # 几折的交叉验证

verbose=2, # 打印信息

random_state=42, # 随机种子

n_jobs=-1) # 用所有CPU执行

# 执行寻找操作

rf_random.fit(train_features, train_labels)

这里解释一下RandomizedSearchCV中常用的参数:

本文建立100次模型来选择参数,并且还是带有3折交叉验证的,那就相当于300个任务了,所以建议把n_ jobs设置成了-1来程序运行

这里我们看一下刚才的300组参数训练的进程中,最好的一组训练参数

rf_random.best_params_

输出结果:

{'bootstrap': True,

'max_depth': None,

'max_features': 'auto',

'min_samples_leaf': 4,

'min_samples_split': 5,

'n_estimators': 1000}

因为我们经常需要做平均绝对误差、平均绝对百分比误差,所以干脆设定一个评估函数

def evaluate(model, test_features, test_labels):

predictions = model.predict(test_features)

errors = abs(predictions - test_labels)

mape = 100 * np.mean(errors / test_labels)

accuracy = 100 - mape

print('Average Error: {:0.4f} '.format(np.mean(errors)))

print('Accuracy = {:0.2f}%'.format(accuracy))

原模型

全部使用默认参数进行训练

base_model = RandomForestRegressor(random_state = 42)

base_model.fit(train_features, train_labels)

evaluate(base_model, test_features, test_labels)

Average Error: 3.8290

Accuracy = 93.56%

新模型

使用在参数空间中找到的最好的参数进行训练

best_random = rf_random.best_estimator_

evaluate(best_random, test_features, test_labels)

Average Error: 3.7190

Accuracy = 93.73%

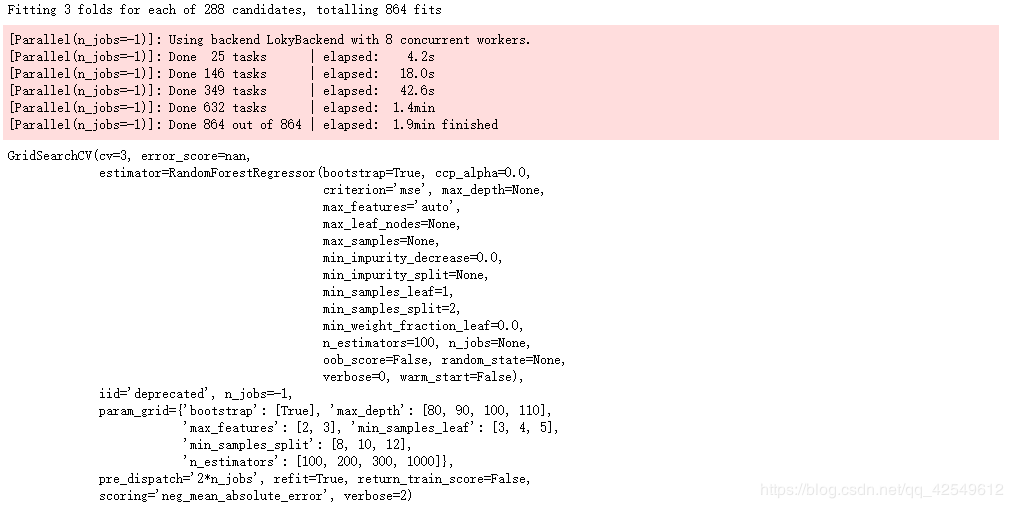

网格搜索交叉验证

可以看到进行了随机搜索进行了参数调整之后,模型的效果提升了一些,但是这个还不是上限,我们再进行网格搜索交叉验证,在随机搜索得到的最优参数附近进行一个个的遍历,把所有参数组合全部走一遍

from sklearn.model_selection import GridSearchCV

# 构建网格

param_grid = {

'bootstrap': [True],

'max_depth': [80, 90, 100, 110],

'max_features': [2, 3],

'min_samples_leaf': [3, 4, 5],

'min_samples_split': [8, 10, 12],

'n_estimators': [100, 200, 300, 1000]

}

# 实例化随机森林模型

rf = RandomForestRegressor()

# 网络搜索

grid_search = GridSearchCV(estimator = rf, param_grid = param_grid,

scoring = 'neg_mean_absolute_error', cv = 3,

n_jobs = -1, verbose = 2)

# 执行搜索

grid_search.fit(train_features, train_labels)

这里我们再看一下,经过遍历后,寻找到的最优参数组合

grid_search.best_params_

{'bootstrap': True,

'max_depth': 80,

'max_features': 3,

'min_samples_leaf': 5,

'min_samples_split': 10,

'n_estimators': 200}

再对这组参数的结果进行一个评估

best_grid = grid_search.best_estimator_

evaluate(best_grid, test_features, test_labels)

Average Error: 3.6549

Accuracy = 93.84%

结果显示,遍历后找到的参数组合,相对于随机搜索得到的参数组合,得到的结果有了进一步提升

最终模型经过了调参之后,得到了一个最优结果的参数组合如下,最终准确率为93.84%,当然这也不一定是全局最优解,想要进一步提高精度,需要不断的围绕着我们已经得到的最优参数进行不断地调整,遍历

print('Final Model Parameters:\n')

pprint(best_grid_ad.get_params())

print('\n')

evaluate(best_grid_ad, test_features, test_labels)

Final Model Parameters:

{'bootstrap': True,

'ccp_alpha': 0.0,

'criterion': 'mse',

'max_depth': 80,

'max_features': 3,

'max_leaf_nodes': None,

'max_samples': None,

'min_impurity_decrease': 0.0,

'min_impurity_split': None,

'min_samples_leaf': 5,

'min_samples_split': 10,

'min_weight_fraction_leaf': 0.0,

'n_estimators': 200,

'n_jobs': None,

'oob_score': False,

'random_state': None,

'verbose': 0,

'warm_start': False}

Average Error: 3.6549

Accuracy = 93.84%

作者:六之

相关文章

Quirita

2021-04-07

Hope

2021-03-01

Iris

2021-08-03

Grace

2020-11-05

Bunny

2023-07-20

Willow

2023-07-20

Janna

2023-07-20

Ophelia

2023-07-20

Natalia

2023-07-20

Xanthe

2023-07-20

Samira

2023-07-20

Irma

2023-07-20

Crystal

2023-07-20

Viridis

2023-07-20

Kirima

2023-07-20

Fawn

2023-07-21

Lida

2023-07-21

Eleanor

2023-07-21