机器学习 算法基础 七 XGBoost

XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable. It implements machine learning algorithms under the Gradient Boosting framework. XGBoost provides a parallel tree boosting (also known as GBDT, GBM) that solve many data science problems in a fast and accurate way.

Git

所用练习数据已上传https://download.csdn.net/download/qq_22096121/12201850

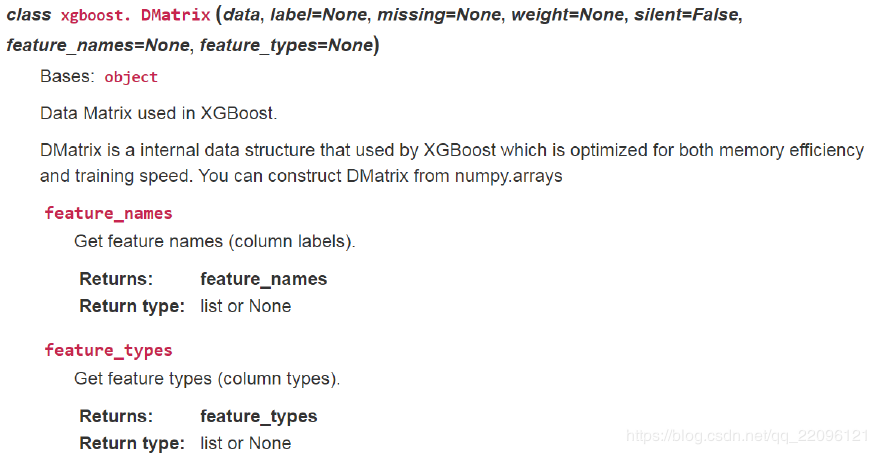

xgboost.DMatrix是此包的核心数据结构 train()函数为训练操作

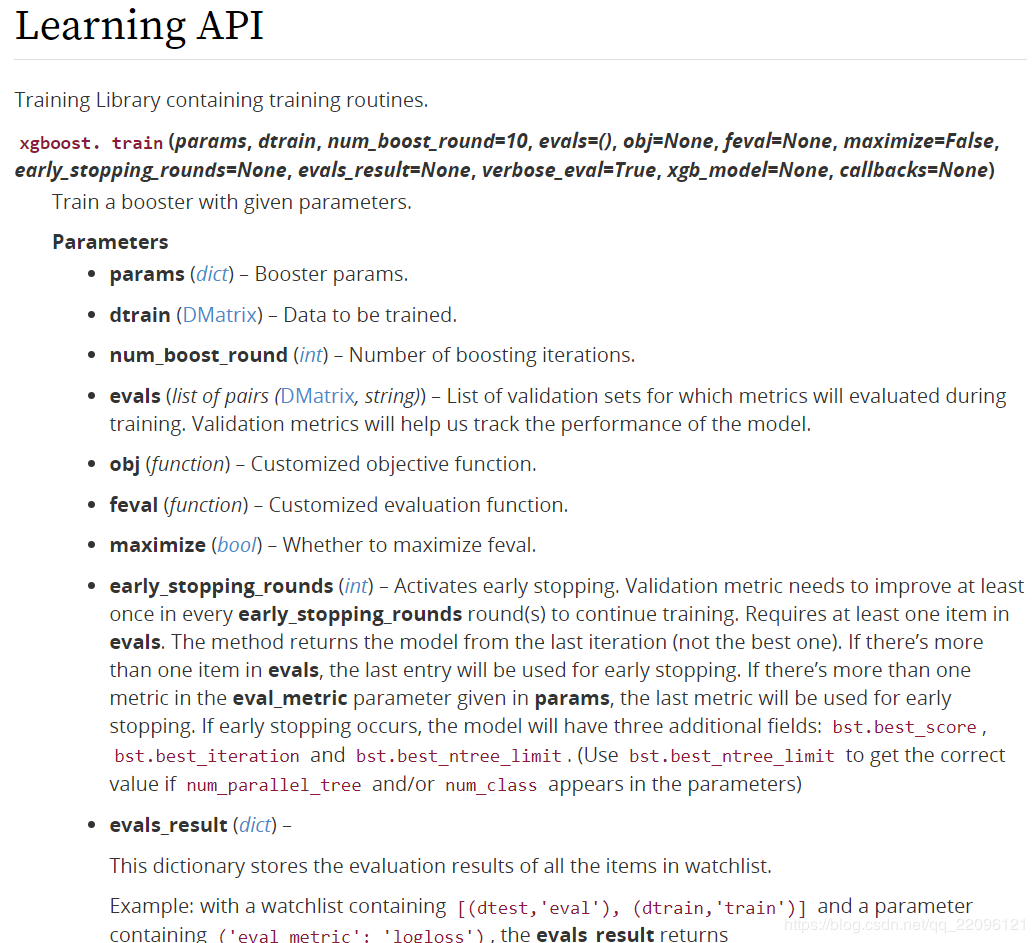

train()函数为训练操作

练习1

练习1

# /usr/bin/python

# -*- encoding:utf-8 -*-

import xgboost as xgb

import numpy as np

# 1、xgBoost的基本使用

# 2、自定义损失函数的梯度和二阶导

# 3、binary:logistic/logitraw

# 定义f: theta * x

def log_reg(y_hat, y):

p = 1.0 / (1.0 + np.exp(-y_hat))

g = p - y.get_label()

h = p * (1.0-p)

return g, h

def error_rate(y_hat, y):

return 'error', float(sum(y.get_label() != (y_hat > 0.5))) / len(y_hat)

if __name__ == "__main__":

# 读取数据

data_train = xgb.DMatrix('agaricus_train.txt')

data_test = xgb.DMatrix('agaricus_test.txt')

print(data_train)

print(type(data_train))

# 设置参数

param = {'max_depth': 3, 'eta': 1, 'silent': 1, 'objective': 'binary:logistic'} # logitraw

# param = {'max_depth': 3, 'eta': 0.3, 'silent': 1, 'objective': 'reg:logistic'}

watchlist = [(data_test, 'eval'), (data_train, 'train')]

n_round = 7

# bst = xgb.train(param, data_train, num_boost_round=n_round, evals=watchlist)

bst = xgb.train(param, data_train, num_boost_round=n_round, evals=watchlist, obj=log_reg, feval=error_rate)

# 计算错误率

y_hat = bst.predict(data_test)

y = data_test.get_label()

print(y_hat)

print(y)

error = sum(y != (y_hat > 0.5))

error_rate = float(error) / len(y_hat)

print('样本总数:\t', len(y_hat))

print('错误数目:\t%4d' % error)

print('错误率:\t%.5f%%' % (100*error_rate))

Output:

[00:08:28] 6513x126 matrix with 143286 entries loaded from agaricus_train.txt

[00:08:28] 1611x126 matrix with 35442 entries loaded from agaricus_test.txt

[0] eval-error:0.01614 train-error:0.01443 eval-error:0.01614 train-error:0.01443

[1] eval-error:0.01614 train-error:0.01443 eval-error:0.01614 train-error:0.01443

[2] eval-error:0.01614 train-error:0.01443 eval-error:0.01614 train-error:0.01443

[3] eval-error:0.01614 train-error:0.01443 eval-error:0.01614 train-error:0.01443

[4] eval-error:0.00248 train-error:0.00307 eval-error:0.00248 train-error:0.00307

[5] eval-error:0.00248 train-error:0.00307 eval-error:0.00248 train-error:0.00307

[6] eval-error:0.00248 train-error:0.00307 eval-error:0.00248 train-error:0.00307

[6.0993789e-06 9.8472750e-01 6.0993789e-06 … 9.9993265e-01 4.4560062e-07

9.9993265e-01]

[0. 1. 0. … 1. 0. 1.]

样本总数: 1611

错误数目: 4

错误率: 0.24829%

# /usr/bin/python

# -*- encoding:utf-8 -*-

import numpy as np

import pandas as pd

import xgboost as xgb

from sklearn.model_selection import train_test_split # cross_validation

from sklearn.linear_model import LogisticRegressionCV

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

def iris_type(s):

it = {b'Iris-setosa': 0, b'Iris-versicolor': 1, b'Iris-virginica': 2}

return it[s]

if __name__ == "__main__":

path = u'..\\8.Regression\\iris.data' # 数据文件路径

# data = np.loadtxt(path, dtype=float, delimiter=',', converters={4: iris_type})

data = pd.read_csv(path, header=None)

x, y = data[range(4)], data[4]

y = pd.Categorical(y).codes

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1, test_size=50)

data_train = xgb.DMatrix(x_train, label=y_train)

data_test = xgb.DMatrix(x_test, label=y_test)

watch_list = [(data_test, 'eval'), (data_train, 'train')]

param = {'max_depth': 2, 'eta': 0.3, 'silent': 1, 'objective': 'multi:softmax', 'num_class': 3}

bst = xgb.train(param, data_train, num_boost_round=6, evals=watch_list)

y_hat = bst.predict(data_test)

result = y_test.reshape(1, -1) == y_hat

print('正确率:\t', float(np.sum(result)) / len(y_hat))

print('END.....\n')

models = [('LogisticRegression', LogisticRegressionCV(Cs=10 ,cv=3)),

('RandomForest', RandomForestClassifier(n_estimators=30, criterion='gini'))]

for name, model in models:

model.fit(x_train, y_train)

print(name, '训练集正确率:', accuracy_score(y_train, model.predict(x_train)))

print(name, '测试机正确率:', accuracy_score(y_test, model.predict(x_test)))

Output:

[0] eval-merror:0.04000 train-merror:0.04000

[1] eval-merror:0.04000 train-merror:0.04000

[2] eval-merror:0.02000 train-merror:0.02000

[3] eval-merror:0.02000 train-merror:0.02000

[4] eval-merror:0.02000 train-merror:0.02000

[5] eval-merror:0.02000 train-merror:0.02000

正确率: 0.98

END…

LogisticRegression 训练集正确率: 0.97

LogisticRegression 测试机正确率: 0.96

RandomForest 训练集正确率: 1.0

RandomForest 测试机正确率: 0.96

LogisticRegression精度不足但相对简单

# !/usr/bin/python

# -*- encoding:utf-8 -*-

import xgboost as xgb

import numpy as np

from sklearn.model_selection import train_test_split # cross_validation

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

if __name__ == "__main__":

# 作业:尝试用Pandas读取试试?

data = np.loadtxt('wine.data', dtype=float, delimiter=',')

y, x = np.split(data, (1,), axis=1)

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1, test_size=0.5)

# Logistic回归

lr = LogisticRegression(penalty='l2')

lr.fit(x_train, y_train.ravel())

y_hat = lr.predict(x_test)

print('Logistic回归正确率:', accuracy_score(y_test, y_hat))

# XGBoost

# 要求标记为0, 做转换

y_train[y_train == 3] = 0

y_test[y_test == 3] = 0

data_train = xgb.DMatrix(x_train, label=y_train)

data_test = xgb.DMatrix(x_test, label=y_test)

watch_list = [(data_test, 'eval'), (data_train, 'train')]

params = {'max_depth': 3, 'eta': 1, 'silent': 0, 'objective': 'multi:softmax', 'num_class': 3}

bst = xgb.train(params, data_train, num_boost_round=2, evals=watch_list)

y_hat = bst.predict(data_test)

print('XGBoost正确率:', accuracy_score(y_test, y_hat))

Output:

Logistic回归正确率: 0.9438202247191011

[0] eval-merror:0.01124 train-merror:0.00000

[1] eval-merror:0.00000 train-merror:0.00000

XGBoost正确率: 1.0

# /usr/bin/python

# -*- coding:utf-8 -*-

import xgboost as xgb

import numpy as np

import scipy.sparse

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

def read_data(path):

y = []

row = []

col = []

values = []

r = 0 # 首行

for d in open(path):

d = d.strip().split() # 以空格分开

y.append(int(d[0]))

d = d[1:]

for c in d:

key, value = c.split(':')

row.append(r)

col.append(int(key))

values.append(float(value))

r += 1

x = scipy.sparse.csr_matrix((values, (row, col))).toarray()

y = np.array(y)

return x, y

if __name__ == '__main__':

x, y = read_data('agaricus_train.txt')

x_train, x_test, y_train, y_test = train_test_split(x, y, random_state=1, train_size=0.6)

# Logistic回归

lr = LogisticRegression(penalty='l2')

lr.fit(x_train, y_train.ravel())

y_hat = lr.predict(x_test)

print('Logistic回归正确率:', accuracy_score(y_test, y_hat))

# XGBoost

data_train = xgb.DMatrix(x_train, label=y_train)

data_test = xgb.DMatrix(x_test, label=y_test)

watch_list = [(data_test, 'eval'), (data_train, 'train')]

param = {'max_depth': 3, 'eta': 1, 'silent': 0, 'objective': 'multi:softmax', 'num_class': 3}

bst = xgb.train(param, data_train, num_boost_round=4, evals=watch_list)

y_hat = bst.predict(data_test)

print('XGBoost正确率:', accuracy_score(y_test, y_hat))

Output:

Logistic回归正确率: 1.0

[0] eval-merror:0.03569 train-merror:0.04070

[1] eval-merror:0.00729 train-merror:0.00998

[2] eval-merror:0.00077 train-merror:0.00051

[3] eval-merror:0.00077 train-merror:0.00051

XGBoost正确率: 0.9992325402916347

# /usr/bin/python

# -*- encoding:utf-8 -*-

import xgboost as xgb

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

import pandas as pd

import csv

def show_accuracy(a, b, tip):

acc = a.ravel() == b.ravel()

acc_rate = 100 * float(acc.sum()) / a.size

print('%s正确率:%.3f%%' % (tip, acc_rate))

return acc_rate

def load_data(file_name, is_train):

data = pd.read_csv(file_name) # 数据文件路径

# print 'data.describe() = \n', data.describe()

# 性别

data['Sex'] = data['Sex'].map({'female': 0, 'male': 1}).astype(int)

# 补齐船票价格缺失值

if len(data.Fare[data.Fare.isnull()]) > 0:

fare = np.zeros(3)

for f in range(0, 3):

fare[f] = data[data.Pclass == f + 1]['Fare'].dropna().median()

for f in range(0, 3): # loop 0 to 2

data.loc[(data.Fare.isnull()) & (data.Pclass == f + 1), 'Fare'] = fare[f]

# 年龄:使用均值代替缺失值

# mean_age = data['Age'].dropna().mean()

# data.loc[(data.Age.isnull()), 'Age'] = mean_age

if is_train:

# 年龄:使用随机森林预测年龄缺失值

print('随机森林预测缺失年龄:--start--')

data_for_age = data[['Age', 'Survived', 'Fare', 'Parch', 'SibSp', 'Pclass']]

age_exist = data_for_age.loc[(data.Age.notnull())] # 年龄不缺失的数据

age_null = data_for_age.loc[(data.Age.isnull())]

# print age_exist

x = age_exist.values[:, 1:]

y = age_exist.values[:, 0]

rfr = RandomForestRegressor(n_estimators=1000)

rfr.fit(x, y)

age_hat = rfr.predict(age_null.values[:, 1:])

# print age_hat

data.loc[(data.Age.isnull()), 'Age'] = age_hat

print('随机森林预测缺失年龄:--over--')

else:

print('随机森林预测缺失年龄2:--start--')

data_for_age = data[['Age', 'Fare', 'Parch', 'SibSp', 'Pclass']]

age_exist = data_for_age.loc[(data.Age.notnull())] # 年龄不缺失的数据

age_null = data_for_age.loc[(data.Age.isnull())]

# print age_exist

x = age_exist.values[:, 1:]

y = age_exist.values[:, 0]

rfr = RandomForestRegressor(n_estimators=1000)

rfr.fit(x, y)

age_hat = rfr.predict(age_null.values[:, 1:])

# print age_hat

data.loc[(data.Age.isnull()), 'Age'] = age_hat

print('随机森林预测缺失年龄2:--over--')

# 起始城市

data.loc[(data.Embarked.isnull()), 'Embarked'] = 'S' # 保留缺失出发城市

# data['Embarked'] = data['Embarked'].map({'S': 0, 'C': 1, 'Q': 2, 'U': 0}).astype(int)

# print data['Embarked']

embarked_data = pd.get_dummies(data.Embarked)

print(embarked_data)

# embarked_data = embarked_data.rename(columns={'S': 'Southampton', 'C': 'Cherbourg', 'Q': 'Queenstown', 'U': 'UnknownCity'})

embarked_data = embarked_data.rename(columns=lambda x: 'Embarked_' + str(x))

data = pd.concat([data, embarked_data], axis=1)

print(data.describe())

data.to_csv('New_Data.csv')

x = data[['Pclass', 'Sex', 'Age', 'SibSp', 'Parch', 'Fare', 'Embarked_C', 'Embarked_Q', 'Embarked_S']]

# x = data[['Pclass', 'Sex', 'Age', 'SibSp', 'Parch', 'Fare', 'Embarked']]

y = None

if 'Survived' in data:

y = data['Survived']

x = np.array(x)

y = np.array(y)

# 思考:这样做,其实发生了什么?

x = np.tile(x, (5, 1))

y = np.tile(y, (5, ))

if is_train:

return x, y

return x, data['PassengerId']

def write_result(c, c_type):

file_name = 'Titanic.test.csv'

x, passenger_id = load_data(file_name, False)

if type == 3:

x = xgb.DMatrix(x)

y = c.predict(x)

y[y > 0.5] = 1

y[~(y > 0.5)] = 0

predictions_file = open("Prediction_%d.csv" % c_type, "wb")

open_file_object = csv.writer(predictions_file)

open_file_object.writerow(["PassengerId", "Survived"])

open_file_object.writerows(zip(passenger_id, y))

predictions_file.close()

if __name__ == "__main__":

x, y = load_data('Titanic.train.csv', True)

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.25, random_state=1)

#

lr = LogisticRegression(penalty='l2')

lr.fit(x_train, y_train)

y_hat = lr.predict(x_test)

lr_acc = accuracy_score(y_test, y_hat)

# write_result(lr, 1)

rfc = RandomForestClassifier(n_estimators=100)

rfc.fit(x_train, y_train)

y_hat = rfc.predict(x_test)

rfc_acc = accuracy_score(y_test, y_hat)

# write_result(rfc, 2)

# XGBoost

data_train = xgb.DMatrix(x_train, label=y_train)

data_test = xgb.DMatrix(x_test, label=y_test)

watch_list = [(data_test, 'eval'), (data_train, 'train')]

param = {'max_depth': 6, 'eta': 0.8, 'silent': 1, 'objective': 'binary:logistic'}

# 'subsample': 1, 'alpha': 0, 'lambda': 0, 'min_child_weight': 1}

bst = xgb.train(param, data_train, num_boost_round=100, evals=watch_list)

y_hat = bst.predict(data_test)

# write_result(bst, 3)

y_hat[y_hat > 0.5] = 1

y_hat[~(y_hat > 0.5)] = 0

xgb_acc = accuracy_score(y_test, y_hat)

print('Logistic回归:%.3f%%' % lr_acc)

print('随机森林:%.3f%%' % rfc_acc)

print('XGBoost:%.3f%%' % xgb_acc)

作者:不可描述的两脚兽