吴恩达机器学习代码作业答案ex1

整理方式:

作业原文件:

链接: https://pan.baidu.com/s/1BSiu57cDfiC4grCIx7zalA 提取码: a8sm

可成功运行的答案:

链接: https://pan.baidu.com/s/1S3yvdSMbaKwuukyl3R6t3A 提取码: uyhx

运行结果整理:

machine-learning-ex1-linear regression:

基础部分代码运行结果,图片就不贴了,在作业说明里已经给了运行结果:

Running warmUpExercise ...

5x5 Identity Matrix:

ans =

Diagonal Matrix

1 0 0 0 0

0 1 0 0 0

0 0 1 0 0

0 0 0 1 0

0 0 0 0 1

Program paused. Press enter to continue.

Plotting Data ...

Program paused. Press enter to continue.

Testing the cost function ...

With theta = [0 ; 0]

Cost computed = 32.072734

Expected cost value (approx) 32.07

With theta = [-1 ; 2]

Cost computed = 54.242455

Expected cost value (approx) 54.24

Program paused. Press enter to continue.

Running Gradient Descent ...

Theta found by gradient descent:

-3.630291

1.166362

Expected theta values (approx)

-3.6303

1.1664

For population = 35,000, we predict a profit of 4519.767868

For population = 70,000, we predict a profit of 45342.450129

Program paused. Press enter to continue.

Visualizing J(theta_0, theta_1) ...

Optional Exercises 部分:

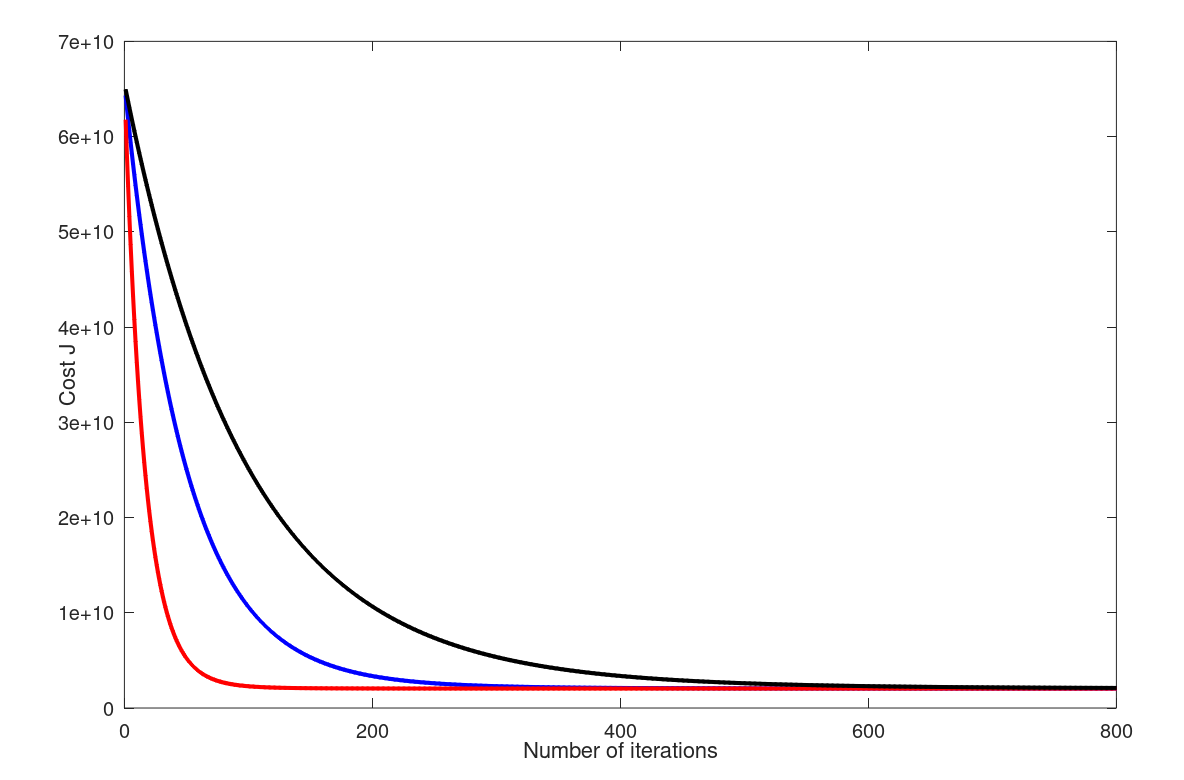

这里我修改了ex1_multi.m文件中源代码的迭代次数和theta值,如下:

#

alpha = 0.01;

num_iters = 800;

% Init Theta and Run Gradient Descent

theta = zeros(3, 1);

[theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters);

theta = zeros(3, 1);

[theta, J_history1] = gradientDescentMulti(X, y, theta, 0.03, num_iters);

theta = zeros(3, 1);

[theta, J_history2] = gradientDescentMulti(X, y, theta, 0.005, num_iters);

因此,这里的运行结果Theta是alph=0.005时拟合出来的结果:

# 运行结果

octave:36> ex1_multi

Loading data ...

First 10 examples from the dataset:

x = [2104 3], y = 399900

x = [1600 3], y = 329900

x = [2400 3], y = 369000

x = [1416 2], y = 232000

x = [3000 4], y = 539900

x = [1985 4], y = 299900

x = [1534 3], y = 314900

x = [1427 3], y = 198999

x = [1380 3], y = 212000

x = [1494 3], y = 242500

Program paused. Press enter to continue.

Normalizing Features ...

Running gradient descent ...

Theta computed from gradient descent:

334240.028807

100065.016724

3690.356114

Predicted price of a 1650 sq-ft, 3 br house (using gradient descent):

$289258.577516

Program paused. Press enter to continue.

Solving with normal equations...

Theta computed from the normal equations:

89597.909542

139.210674

-8738.019112

Predicted price of a 1650 sq-ft, 3 br house (using normal equations):

$293081.464335

红蓝黑分别代表theta的值为0.02,0.01.0.005时的结果。

后记:

我运行的第一版代码的结果中gradient descent与normal equations的结果差别较大,当时使用的gradient descent中price计算代码:

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

% Recall that the first column of X is all-ones. Thus, it does

% not need to be normalized.

price = 0; % You should change this

price = [1 1650 3]*theta;

% ============================================================

fprintf(['Predicted price of a 1650 sq-ft, 3 br house ' ...

'(using gradient descent):\n $%f\n'], price);

其实这边在作业要求中有被提醒:

也就是说,因为之前我们拟合theta的值的时候使用的是特征缩放之后的X,所以,在我们有一个新的x需要进行预测时,也要使用特征缩放之后的x值:

% Estimate the price of a 1650 sq-ft, 3 br house

% ====================== YOUR CODE HERE ======================

% Recall that the first column of X is all-ones. Thus, it does

% not need to be normalized.

price = 0; % You should change this

xt=([1650 3]-mu)./sigma; #对x进行特征缩放

price=[1,xt]*theta;

作者:XIAXIAgo

相关文章

Quirita

2021-04-07

Iris

2021-08-03

Tania

2020-03-07

Jessica

2020-10-10

Kande

2023-05-13

Ula

2023-05-13

Jacinda

2023-05-13

Winona

2023-05-13

Fawn

2023-05-13

Echo

2023-05-13

Maha

2023-05-13

Kande

2023-05-15

Viridis

2023-05-17

Pandora

2023-07-07

Tallulah

2023-07-17

Janna

2023-07-20

Ophelia

2023-07-20

Natalia

2023-07-20