【kettle集成cdh6.1】hadoop file output浏览目录报错:java.lang.NoClassDefFoundError: com/ctc/wstx/io/SystemId

最近试着上手了一下kettle,搭建过程很简单,就是下载个包解压一下,但是在配置数据源的过程中着实踩了不少坑,这里记录一下。

环境这里介绍一下几个组件的版本

kettle: 8.0

CDH: 6.1.0

HADOOP: 3.0.0

MYSQL: 5.5.62

报错

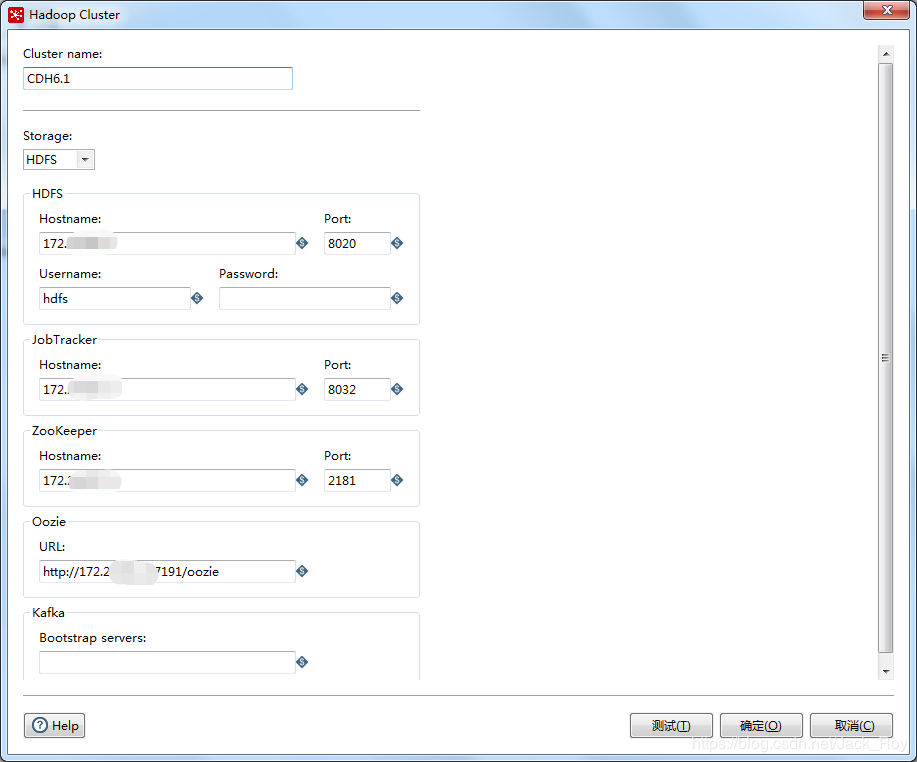

在此之前,我已经从CDH HDFS管理页面将所需要的core-site.xml、hdfs-site.xml等文件下载并放置至相应的插件位置,又从HADOOP在里将hadoop-client-3.0.0-cdh6.1.0.jar、hadoop-common-3.0.0-cdh6.1.0.jar等jar包下载并放置至lib文件夹中,像网上通用的教程我基本上跟着都做了一遍,这里再贴一下CDH集群配置:

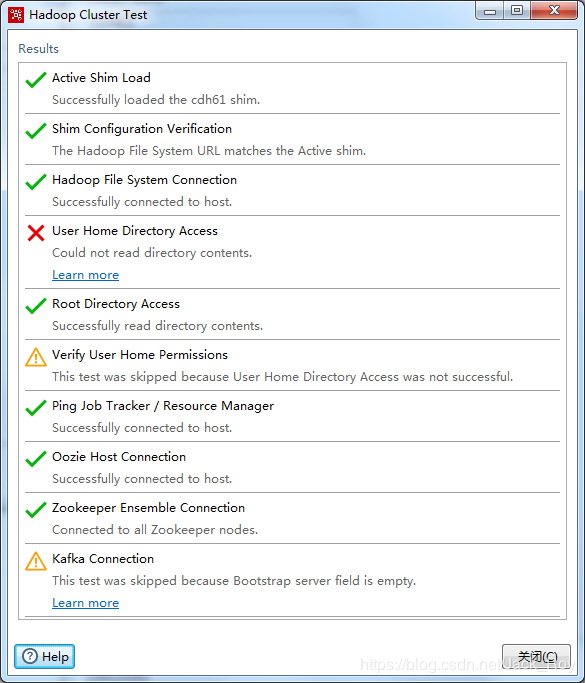

hdfs用户密码我没有写,因为我压根不知道密码(后来的操作也不受影响),点击测试一下:

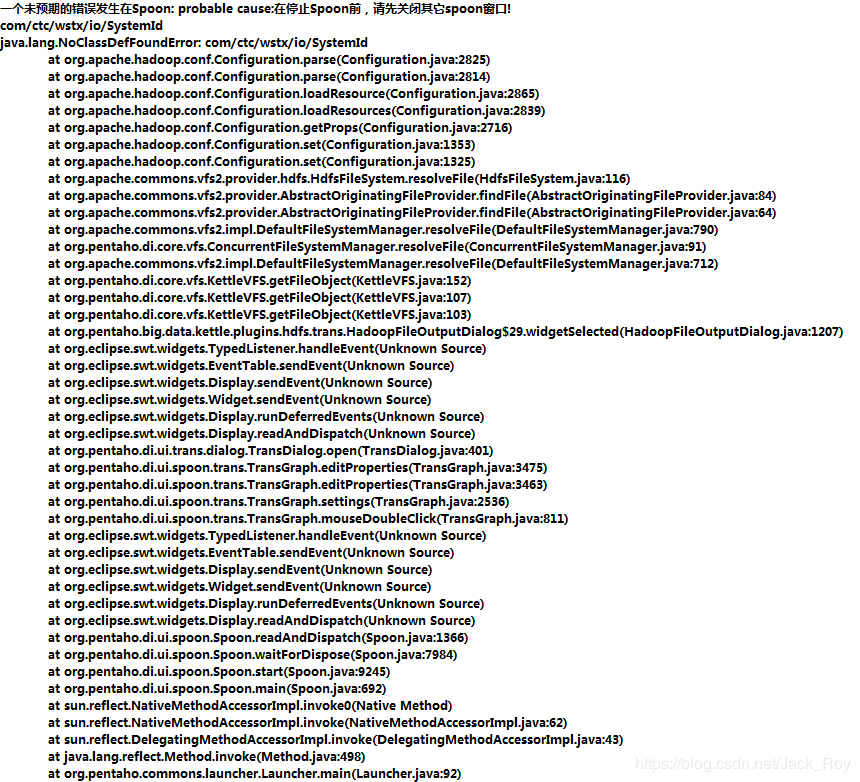

看起来没什么大问题,但是当我将mysql的数据往hdfs上写,打算浏览一下hhdfs目录的时候,报错了:

报错明细:

无法打开这个步骤窗口

java.lang.NoClassDefFoundError: com/ctc/wstx/io/SystemId

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2825)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2814)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2865)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2839)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2716)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1353)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1325)

at org.apache.commons.vfs2.provider.hdfs.HdfsFileSystem.resolveFile(HdfsFileSystem.java:116)

at org.apache.commons.vfs2.provider.AbstractOriginatingFileProvider.findFile(AbstractOriginatingFileProvider.java:84)

at org.apache.commons.vfs2.provider.AbstractOriginatingFileProvider.findFile(AbstractOriginatingFileProvider.java:64)

at org.apache.commons.vfs2.impl.DefaultFileSystemManager.resolveFile(DefaultFileSystemManager.java:790)

at org.pentaho.di.core.vfs.ConcurrentFileSystemManager.resolveFile(ConcurrentFileSystemManager.java:91)

at org.apache.commons.vfs2.impl.DefaultFileSystemManager.resolveFile(DefaultFileSystemManager.java:712)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:152)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:107)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:103)

at org.pentaho.big.data.kettle.plugins.hdfs.trans.HadoopFileOutputDialog$29.widgetSelected(HadoopFileOutputDialog.java:1207)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.pentaho.big.data.kettle.plugins.hdfs.trans.HadoopFileOutputDialog.open(HadoopFileOutputDialog.java:1316)

at org.pentaho.di.ui.spoon.delegates.SpoonStepsDelegate.editStep(SpoonStepsDelegate.java:127)

at org.pentaho.di.ui.spoon.Spoon.editStep(Spoon.java:8728)

at org.pentaho.di.ui.spoon.trans.TransGraph.editStep(TransGraph.java:3214)

at org.pentaho.di.ui.spoon.trans.TransGraph.mouseDoubleClick(TransGraph.java:780)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.pentaho.di.ui.spoon.Spoon.readAndDispatch(Spoon.java:1366)

at org.pentaho.di.ui.spoon.Spoon.waitForDispose(Spoon.java:7984)

at org.pentaho.di.ui.spoon.Spoon.start(Spoon.java:9245)

at org.pentaho.di.ui.spoon.Spoon.main(Spoon.java:692)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.pentaho.commons.launcher.Launcher.main(Launcher.java:92)

Caused by: java.lang.ClassNotFoundException: com.ctc.wstx.io.SystemId

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

问题分析

笔者一看这报错信息,不就是找不到一个名叫com.ctc.wstx.io.SystemId的类么,找来不就得了!

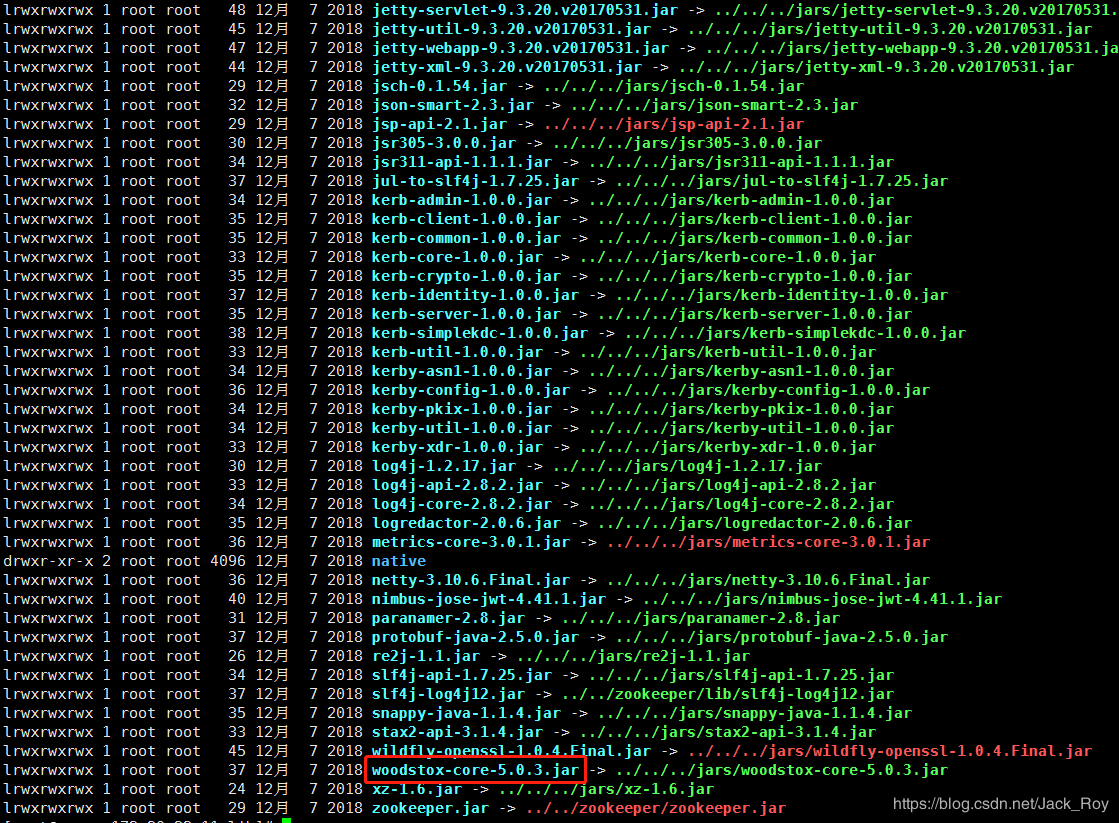

于是去往namenode所在节点的hadoop的lib目录下一瞧,真有“woodstox-core-5.0.3.jar”这个包:

毫无疑问,将其拖下放入kettle的lib目录并重启kettle,然后再查看一下hdfs的目录,这回报了个新错:

报错明细:

无法打开这个步骤窗口

java.lang.NoSuchMethodError: com.ctc.wstx.io.StreamBootstrapper.getInstance(Ljava/lang/String;Lcom/ctc/wstx/io/SystemId;Ljava/io/InputStream;)Lcom/ctc/wstx/io/StreamBootstrapper;

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2831)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2814)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2865)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2839)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2716)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1353)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1325)

at org.apache.commons.vfs2.provider.hdfs.HdfsFileSystem.resolveFile(HdfsFileSystem.java:116)

at org.apache.commons.vfs2.provider.AbstractOriginatingFileProvider.findFile(AbstractOriginatingFileProvider.java:84)

at org.apache.commons.vfs2.provider.AbstractOriginatingFileProvider.findFile(AbstractOriginatingFileProvider.java:64)

at org.apache.commons.vfs2.impl.DefaultFileSystemManager.resolveFile(DefaultFileSystemManager.java:790)

at org.pentaho.di.core.vfs.ConcurrentFileSystemManager.resolveFile(ConcurrentFileSystemManager.java:91)

at org.apache.commons.vfs2.impl.DefaultFileSystemManager.resolveFile(DefaultFileSystemManager.java:712)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:152)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:107)

at org.pentaho.di.core.vfs.KettleVFS.getFileObject(KettleVFS.java:103)

at org.pentaho.big.data.kettle.plugins.hdfs.trans.HadoopFileOutputDialog$29.widgetSelected(HadoopFileOutputDialog.java:1207)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.pentaho.big.data.kettle.plugins.hdfs.trans.HadoopFileOutputDialog.open(HadoopFileOutputDialog.java:1316)

at org.pentaho.di.ui.spoon.delegates.SpoonStepsDelegate.editStep(SpoonStepsDelegate.java:127)

at org.pentaho.di.ui.spoon.Spoon.editStep(Spoon.java:8728)

at org.pentaho.di.ui.spoon.trans.TransGraph.editStep(TransGraph.java:3214)

at org.pentaho.di.ui.spoon.trans.TransGraph.mouseDoubleClick(TransGraph.java:780)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.pentaho.di.ui.spoon.Spoon.readAndDispatch(Spoon.java:1366)

at org.pentaho.di.ui.spoon.Spoon.waitForDispose(Spoon.java:7984)

at org.pentaho.di.ui.spoon.Spoon.start(Spoon.java:9245)

at org.pentaho.di.ui.spoon.Spoon.main(Spoon.java:692)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.pentaho.commons.launcher.Launcher.main(Launcher.java:92)

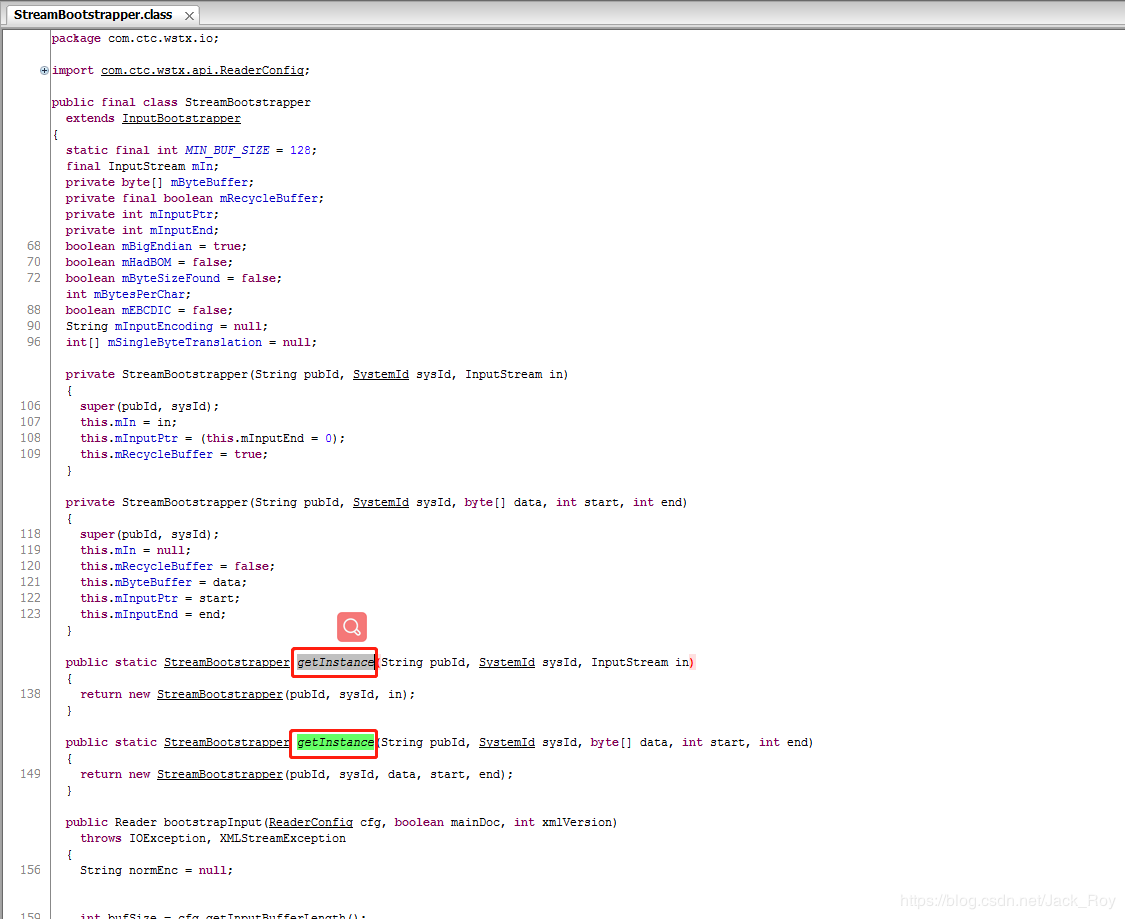

这回不是报找不到类com.ctc.wstx.io.StreamBootstrapper,而是报找不到类com.ctc.wstx.io.StreamBootstrapper中的getInstance方法了,笔者找来反编译软件进去看了看:

What fuck?该有的方法一个不少的好吧!看来问题绝不是缺包、包冲突那么简单,冷静下来想想(实则慌的一批,临近过年,老子还想早点出去神游嬉戏一番),似是数据源版本和kettle之间的问题。

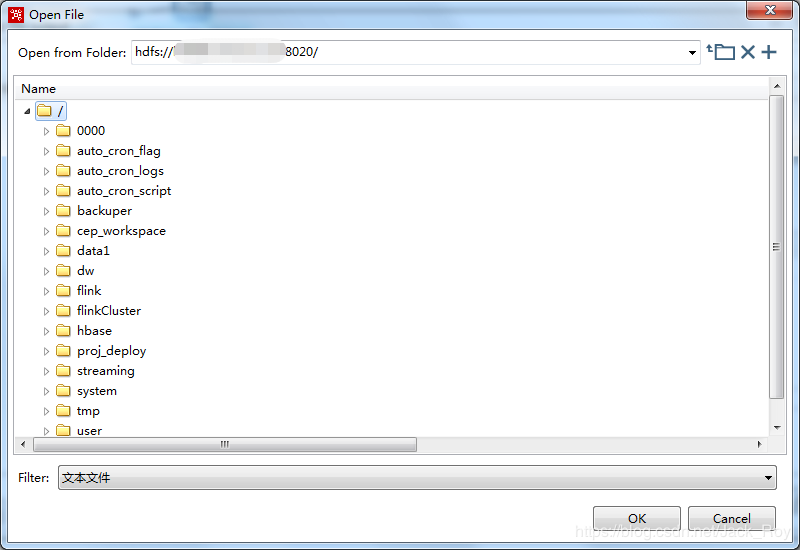

改变了思路之后,笔者又经过一番查阅之后,终于通过换Keettle版本的方式搞定了这个棘手的问题,效果如下:

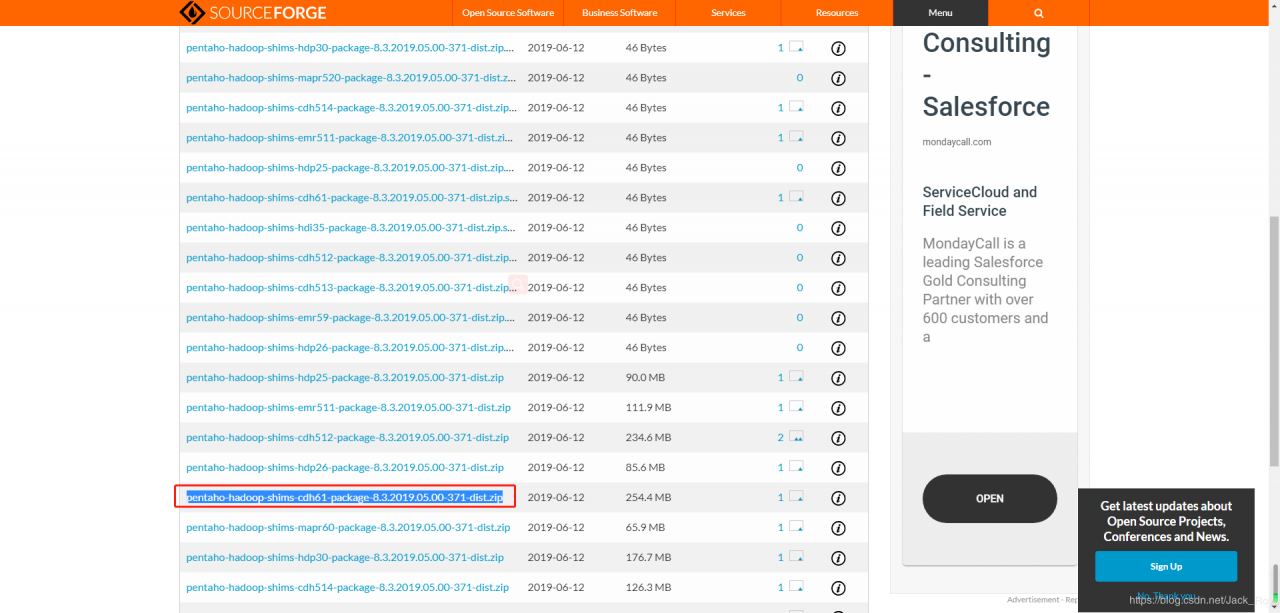

不管是7.版本的kettle还是8.0的kettle,我们从“\data-integration\plugins\pentaho-big-data-plugin\hadoop-configurations”路径下看只能看到对hadoop2.、cdh5.*的支持,因此要想支持cdh6.1唯有自己寻找插件,或者是使用更高版本的kettle,这里贴一下kettle下载路径:

https://sourceforge.net/projects/pentaho/files/Pentaho%208.3/client-tools/

访问url下拉至底部,下载pdi-ce-8.3.0.0-371.zip:

cdh6.1-hadoop3.0插件url:

https://sourceforge.net/projects/pentaho/files/Pentaho%208.3/shims/

下拉找到pentaho-hadoop-shims-cdh61-package-8.3.2019.05.00-371-dist.zip点击下载:

通过这个例子可以看出,版本选不对,后患是无穷,大家在选择组件的时候一定要擦亮眼睛选互相兼容的组件~

作者:Jack_Roy

java.lang

com

output

lang

ctc

io

kettle

cdh

JAVA

file

hadoop