HyperLedger Fabric开发实战 -Kafka集群部署

根据前面章节的介绍,知道了Fabric组网过程的第一步是需要生成证书等文件,而这些默认配置信息的生成依赖于configtx.yaml及crypto-config.yaml配置文件。

在采用Kafka作为启动过类型的Fabric网络中,configtx.yaml 及 cryto-config.yaml配置文件依然有着重要的地位,但是其中的配置样本与先前的内容会有些不同。

本章将进行基于Kafka集群的部署,其中重要的概念是对前三章的总结,也是对本章及后续章节关于智能合约及CouchDB的铺垫。

5.1 Fabric账本 账本 (Ledger)即所有的状态变更是有序且不可篡改的。状态变更是由参与方提交的chaincode(智能合约)调用事务(transactions)的结果。每个事务都将产生一组资产键-值对,这些键-值对用于创建、更新或删除而提交给账本。

账本由BlockChain(区块链)组成,区块则用来存储有序且不可篡改的记录,以及保存当前状态的状态数据库。在每一个Channel中都会存在一个账本。每一个Peer都会维护它作为其中成员的每一个Channel中的本地复制的账本。

链链是一个事务日志,是一个由Hash链接各个区块的结构,其中每个区块都包含了N个事务的序列。

区块header包含了该区块的事务的Hash,以及上一个区块头的Hash。这样,所有在账本上的交易都是按顺序排列的,并以密码方式链接在一起。也就是说在不破坏Hash 链接的情况下篡改账本数据是不可能的。最近的区块Hash代表了以前的每个事务,从而确保所有的Peers都处于一致和可信的状态。

链存储Peer文件系统(本地或附件存储)上,有效的支持BlockChain工作负载的应用程序的特性。

状态数据库该账本的当前状态数据表示链事务日志中包含的所有值的最新值。

由于当前状态表示Channel所知道的全部最新键值,因此有时称为“World State”。

在Chaincode调用对当前状态数据执行操作的事务时,为了使这些Chaincode交互非常有效,所有的键的最新值都存储在一个状态数据库中。状态数据库只是一个索引视图到链的事务日志,因此可以在任何时候从链中重新生成。在事务被接受之前,状态数据库将自动恢复或者在需要时生成。

状态数据库包括LevelDB 和 CouchDB 。 LevelDB 是嵌入在Peer进程中的默认状态数据库,并将Chaincode数据存储为键-值对。CouchDB是一个可选的外部状态数据库,所写的Chaincode数据被建模为JSON时,它提供了额外的查询支持,允许对JSON内容进行丰富的查询。

事务流在高层业务逻辑处理上,事务流是由应用程序客户端发送的事务协议,该协议最终发送到指定的背书节点。

背书节点会验证客户端的签名,并执行一个Chaincode函数来模拟事务。最终返回给客户端的是Chaincode结果,即一组在Chaincode读集中获取的键值版本,以及在Chaincode写集中写入的键值集合,返回该Peer执行Chaincode后模拟出来的读写集结果,同时还会附带一个背书签名。

客户端将背书组合成一个事务payload,并将其广播至一个ordering service (排序服务节点),ordering service 为当前Channel 上的所有Peers 提供排序服务并生成区块。

实际上,客户端在将事务广播到排序服务之前,先将本次请求提交到Peer ,由Peer验证事务。

首先,Peer将检查背书策略,以确保指定的Peer的正确分配已经签署了结果,并且将根据事务payload对签名进行身份验证。

其次,Peer 将对事务集进行版本控制 , 以确保数据完整性 , 并防止诸如重复开销之类问题。

5.2 事务处理流程此处将介绍在标准资产交换过程中发生的事务机制。这个场景包括两个客户,A和B,他们在购买和销售萝卜(产品)。他们每个人在网络上都已一个Peer,通过这个网络,他们发送自己的交易,并与Ledger(账本)进行交互,如下图所示:

假设这个事务流中有一个Channel被设置并运行。应用程序客户端及该组织的证书颁发机构均已注册,并获得了必要的加密资料,用于对网络进行身份验证。

Chaincode(包含一组表示萝卜市场的初始状态的键值对)被安装在Peers上,并在Channel上实例化。Chaincode包含定义一组事务指令的逻辑,以及一个萝卜(商品)的价格。该Chaincode也确定一个背书策略,即peerA和peerB都必须支持任何交易。

完整具体处理流程如下:

5.3 Kafka集群配置搭建Kafka集群的最小单位组成如下:

3个Zookeeper节点集群 4个Kafka节点集群 3个Orderer排序服务节点 其他Peer节点以上集群至少需要10个服务节点提供集群服务,其余节点用于背书验证、提交及数据同步。

准备工作:

| 名称 | IP | hostname | 组织机构 |

|---|---|---|---|

| Zk1 | 172.31.159.137 | zookeeper1 | |

| Zk2 | 172.31.159.135 | zookeeper2 | |

| Zk3 | 172.31.159.136 | zookeeper3 | |

| Kafka1 | 172.31.159.133 | kafka1 | |

| Kafka2 | 172.31.159.132 | kafka2 | |

| Kafka3 | 172.31.159.134 | kafka3 | |

| Kafka4 | 172.31.159.131 | kafka4 | |

| Orderer0 | 172.31.159.130 | orderer0.example.com | |

| Orderer1 | 172.31.143.22 | orderer1.example.com | |

| Orderer2 | 172.31.143.23 | orderer2.example.com | |

| peer0 | 172.31.159.129 | peer0.org1.example.com | Org1 |

| peer1 | 172.31.143.21 | peer1.org2.example.com | Org2 |

如果考虑到高可用性,可以学习参考K8s管理Docker的方案。

在这些服务器中,每一台都会安装Docker、Docker-Compose环境,而Orderer排序服务器及Peer节点服务器会额外的安装Go及Fabric环境。

所有基本的环境部署与前面章节一致,所以有些资源可以直接使用。

5.3.1 crypto-config.yaml 配置OrdererOrgs:

- Name: Orderer

Domain: example.com

Specs:

- Hostname: orderer0

- Hostname: orderer1

- Hostname: orderer2

PeerOrgs:

- Name: Org1

Domain: org1.example.com

Template:

Count: 2

Users:

Count: 1

- Name: Org2

Domain: org2.example.com

Template:

Count: 2

Users:

Count: 1

Specs:

- Hostname: foo

CommonName: foo27.org2.example.com

- Hostname: bar

- Hostname: baz

- Name: Org3

Domain: org3.example.com

Template:

Count: 2

Users:

Count: 1

- Name: Org4

Domain: org4.example.com

Template:

Count: 2

Users:

Count: 1

- Name: Org5

Domain: org5.example.com

Template:

Count: 2

Users:

Count: 1

将该配置文件上传到Orderer0服务器 aberic目录下,执行如下命令生成节点所需配置文件:

./bin/cryptogen generate --config=./crypto-config.yaml

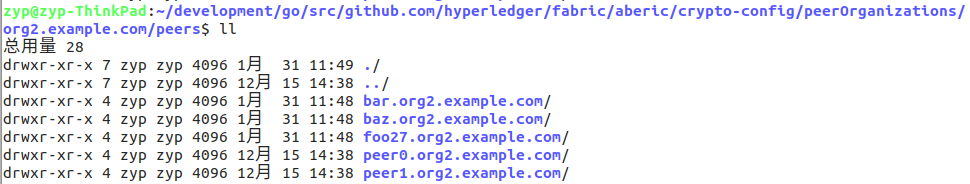

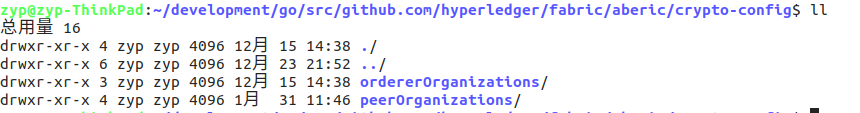

执行完毕可以在如下目录查看到自定义节点的目录信息

由于本次采用的是kafka集群部署,所以本次文件配置中启动类型应该为 “kafka”。还需要在Address中将Orderer可用排序服务即集群排序服务器的地址补全,在kafka的Brokers中可填写非全量Kafka集群所用服务器IP或域名。

configtx.yaml 具体配置如下:

Profiles:

TwoOrgsOrdererGenesis:

Orderer:

<<: *OrdererDefaults

Organizations:

- *OrdererOrg

Consortiums:

SampleConsortium:

Organizations:

- *Org1

- *Org2

- *Org3

- *Org4

- *Org5

TwoOrgsChannel:

Consortium: SampleConsortium

Application:

<<: *ApplicationDefaults

Organizations:

- *Org1

- *Org2

- *Org3

- *Org4

- *Org5

Organizations:

- &OrdererOrg

Name: OrdererMSP

ID: OrdererMSP

MSPDir: crypto-config/ordererOrganizations/example.com/msp

- &Org1

Name: Org1MSP

ID: Org1MSP

MSPDir: crypto-config/peerOrganizations/org1.example.com/msp

AnchorPeers:

- Host: peer0.org1.example.com

Port: 7051

- &Org2

Name: Org2MSP

ID: Org2MSP

MSPDir: crypto-config/peerOrganizations/org2.example.com/msp

AnchorPeers:

- Host: peer0.org2.example.com

Port: 7051

- &Org3

Name: Org3MSP

ID: Org3MSP

MSPDir: crypto-config/peerOrganizations/org3.example.com/msp

AnchorPeers:

- Host: peer0.org3.example.com

Port: 7051

- &Org4

Name: Org4MSP

ID: Org4MSP

MSPDir: crypto-config/peerOrganizations/org4.example.com/msp

AnchorPeers:

- Host: peer0.org4.example.com

Port: 7051

- &Org5

Name: Org5MSP

ID: Org5MSP

MSPDir: crypto-config/peerOrganizations/org5.example.com/msp

AnchorPeers:

- Host: peer0.org5.example.com

Port: 7051

Orderer: &OrdererDefaults

OrdererType: kafka

Addresses:

- orderer0.example.com:7050

- orderer1.example.com:7050

- orderer2.example.com:7050

BatchTimeout: 2s

BatchSize:

MaxMessageCount: 10

AbsoluteMaxBytes: 98 MB

PreferredMaxBytes: 512 KB

Kafka:

Brokers:

- 172.31.159.131:9092

- 172.31.159.132:9092

- 172.31.159.133:9092

- 172.31.159.134:9092

Organizations:

Application: &ApplicationDefaults

Organizations:

Capabilities:

Global: &ChannelCapabilities

V1_1: true

Orderer: &OrdererCapabilities

V1_1: true

Application: &ApplicationCapabilities

V1_1: true

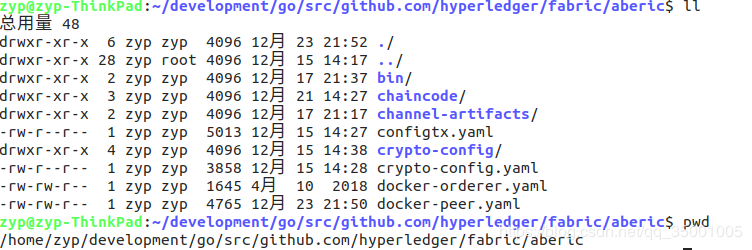

将该文件上传到Orderer0服务器 aberic 目录下,并执行如下命令:

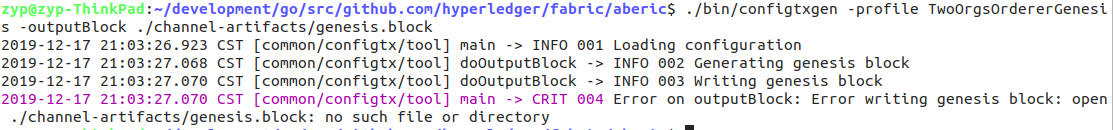

./bin/configtxgen -profile TwoOrgsOrdererGensis -outputBlock ./channel-artifacts/genesis.block

创世区块genesis.block是为了Orderer排序服务启动时用到的,Peer节点在启动后需要创建的Channel的配置文件在这里也一并生成,执行如下命令:

./bin/configtxgen -profile TwoOrgChannel -outputCreateChannelTx ./channel-artifacts/mychannel.tx -channelID mychannel

5.3.3 Zookeeper配置

Zookeeper基本运转要素如下:

选举Leader 同步数据 选举Leader的算法有很多,但要达到的选举标准是一致的 Leader要具有最高的执行ID ,类似Root权限 集群中大多数的机器得到响应并跟随选出的Leader配置文件中的内容始终满足以上5个要素。

docker-zookeeper1.yaml 文件内容如下:

version: '2'

services:

zookeeper1:

container_name: zookeeper1

hostname: zookeeper1

image: hyperledger/fabric-zookeeper

restart: always

environment:

# ID在集合中必须是唯一的并且应该有一个值

# between 1 and 255.

# 在1和255之间。

- ZOO_MY_ID=1

#

# 组成ZK集合的服务器列表。客户端使用的列表必须与ZooKeeper服务器列表所拥有的每一个ZK服务器相匹配。

# 有两个端口号 ,第一个是追随者用来连接领导者的,第二个是用于领导人选举。

- ZOO_SERVERS=server.1=zookeeper1:2888:3888 server.2=zookeeper2:2888:3888 server.3=zookeeper3:2888:3888

ports:

- "2181:2181"

- "2888:2888"

- "3888:3888"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-zookeeper2.yaml 文件内容如下:

version: '2'

services:

zookeeper2:

container_name: zookeeper2

hostname: zookeeper2

image: hyperledger/fabric-zookeeper

restart: always

environment:

- ZOO_MY_ID=2

- ZOO_SERVERS=server.1=zookeeper1:2888:3888 server.2=zookeeper2:2888:3888 server.3=zookeeper3:2888:3888

ports:

- "2181:2181"

- "2888:2888"

- "3888:3888"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-zookeeper3.yaml 文件内容如下:

version: '2'

services:

zookeeper3:

container_name: zookeeper3

hostname: zookeeper3

image: hyperledger/fabric-zookeeper

restart: always

environment:

- ZOO_MY_ID=3

- ZOO_SERVERS=server.1=zookeeper1:2888:3888 server.2=zookeeper2:2888:3888 server.3=zookeeper3:2888:3888

ports:

- "2181:2181"

- "2888:2888"

- "3888:3888"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

注意:Zookeeper集群的数量可以是3、5、 7 ,它的值是一个奇数避免 split-brain 的情况,同时选择大于1的值是为了避免单点故障,如果集群数量超过7个Zookeeper服务将会被认为 overkill , 即无法承受。

5.3.4 Kafka配置Kafka 需要4份启动配置文件,docker-kafka1.yaml , docker-kafka2.yaml , docker-kafka3.yaml , docker-kafka4.yaml 。

docker-kafka1.yaml 文件内容和解释如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

# 我们使用K和Z分别代表Kafka集群和ZooKeeper集群的节点个数

#

# 1)K的最小值应该被设置为4(我们将会在第4步中解释,这是为了满足crash容错的最小节点数。

# 如果有4个代理,那么可以容错一个代理崩溃,一个代理停止服务后,channel仍然可以继续读写,新的channel可以被创建)

# 2)Z可以为3,5或是7。它的值需要是一个奇数避免脑裂(split-brain)情况,同时选择大于1的值为了避免单点故障。

# 超过7个ZooKeeper servers会被认为overkill。

#

version: '2'

services:

kafka1:

container_name: kafka1

hostname: kafka1

image: hyperledger/fabric-kafka

restart: always

environment:

# ========================================================================

# Reference: https://kafka.apache.org/documentation/#configuration

# ========================================================================

#

# broker.id

- KAFKA_BROKER_ID=1

#

# min.insync.replicas

# Let the value of this setting be M. Data is considered committed when

# it is written to at least M replicas (which are then considered in-sync

# and belong to the in-sync replica set, or ISR). In any other case, the

# write operation returns an error. Then:

# 1. If up to M-N replicas -- out of the N (see default.replication.factor

# below) that the channel data is written to -- become unavailable,

# operations proceed normally.

# 2. If more replicas become unavailable, Kafka cannot maintain an ISR set

# of M, so it stops accepting writes. Reads work without issues. The

# channel becomes writeable again when M replicas get in-sync.

#

# min.insync.replicas = M---设置一个M值(例如1<M<N,查看下面的default.replication.factor)

# 数据提交时会写入至少M个副本(这些数据然后会被同步并且归属到in-sync 副本集合或ISR)。

# 其它情况,写入操作会返回一个错误。接下来:

# 1)如果channel写入的数据多达N-M个副本变的不可用,操作可以正常执行。

# 2)如果有更多的副本不可用,Kafka不可以维护一个有M数量的ISR集合,因此Kafka停止接收写操作。Channel只有当同步M个副本后才可以重新可以写。

- KAFKA_MIN_INSYNC_REPLICAS=2

#

# default.replication.factor

# Let the value of this setting be N. A replication factor of N means that

# each channel will have its data replicated to N brokers. These are the

# candidates for the ISR set of a channel. As we noted in the

# min.insync.replicas section above, not all of these brokers have to be

# available all the time. In this sample configuration we choose a

# default.replication.factor of K-1 (where K is the total number of brokers in

# our Kafka cluster) so as to have the largest possible candidate set for

# a channel's ISR. We explicitly avoid setting N equal to K because

# channel creations cannot go forward if less than N brokers are up. If N

# were set equal to K, a single broker going down would mean that we would

# not be able to create new channels, i.e. the crash fault tolerance of

# the ordering service would be non-existent.

#

# 设置一个值N,N<K。

# 设置replication factor参数为N代表着每个channel都保存N个副本的数据到Kafka的代理上。

# 这些都是一个channel的ISR集合的候选。

# 如同在上边min.insync.replicas section设置部分所描述的,不是所有的代理(orderer)在任何时候都是可用的。

# N的值必须小于K,如果少于N个代理的话,channel的创建是不能成功的。

# 因此,如果设置N的值为K,一个代理失效后,那么区块链网络将不能再创建新的channel---orderering service的crash容错也就不存在了。

- KAFKA_DEFAULT_REPLICATION_FACTOR=3

#

# zookeper.connect

# Point to the set of Zookeeper nodes comprising a ZK ensemble.

# 指向Zookeeper节点的集合,其中包含ZK的集合。

- KAFKA_ZOOKEEPER_CONNECT=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

#

# zookeeper.connection.timeout.ms

# The max time that the client waits to establish a connection to

# Zookeeper. If not set, the value in zookeeper.session.timeout.ms (below)

# is used.

#- KAFKA_ZOOKEEPER_CONNECTION_TIMEOUT_MS = 6000

#

# zookeeper.session.timeout.ms

#- KAFKA_ZOOKEEPER_SESSION_TIMEOUT_MS = 6000

#

# socket.request.max.bytes

# The maximum number of bytes in a socket request. ATTN: If you set this

# env var, make sure to update `brokerConfig.Producer.MaxMessageBytes` in

# `newBrokerConfig()` in `fabric/orderer/kafka/config.go` accordingly.

#- KAFKA_SOCKET_REQUEST_MAX_BYTES=104857600 # 100 * 1024 * 1024 B

#

# message.max.bytes

# The maximum size of envelope that the broker can receive.

#

# 在configtx.yaml中会设置最大的区块大小(参考configtx.yaml中AbsoluteMaxBytes参数)。

# 每个区块最大有Orderer.AbsoluteMaxBytes个字节(不包括头部),假定这里设置的值为A(目前99)。

# message.max.bytes和replica.fetch.max.bytes应该设置一个大于A。

# 为header增加一些缓冲区空间---1MB已经足够大。上述不同设置值之间满足如下关系:

# Orderer.AbsoluteMaxBytes < replica.fetch.max.bytes <= message.max.bytes

# (更完整的是,message.max.bytes应该严格小于socket.request.max.bytes的值,socket.request.max.bytes的值默认被设置为100MB。

# 如果想要区块的大小大于100MB,需要编辑fabric/orderer/kafka/config.go文件里硬编码的值brokerConfig.Producer.MaxMessageBytes,

# 修改后重新编译源码得到二进制文件,这种设置是不建议的。)

- KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# replica.fetch.max.bytes

# The number of bytes of messages to attempt to fetch for each channel.

# This is not an absolute maximum, if the fetched envelope is larger than

# this value, the envelope will still be returned to ensure that progress

# can be made. The maximum message size accepted by the broker is defined

# via message.max.bytes above.

#

# 试图为每个通道获取的消息的字节数。

# 这不是绝对最大值,如果获取的信息大于这个值,则仍然会返回信息,以确保可以取得进展。

# 代理所接受的最大消息大小是通过上一条message.max.bytes定义的。

- KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# unclean.leader.election.enable

# Data consistency is key in a blockchain environment. We cannot have a

# leader chosen outside of the in-sync replica set, or we run the risk of

# overwriting the offsets that the previous leader produced, and --as a

# result-- rewriting the blockchain that the orderers produce.

# 数据一致性在区块链环境中是至关重要的。

# 我们不能从in-sync 副本(ISR)集合之外选取channel leader,

# 否则我们将会面临对于之前的leader产生的offsets覆盖的风险,

# 这样的结果是,orderers产生的区块可能会重新写入区块链。

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false

#

# log.retention.ms

# Until the ordering service in Fabric adds support for pruning of the

# Kafka logs, time-based retention should be disabled so as to prevent

# segments from expiring. (Size-based retention -- see

# log.retention.bytes -- is disabled by default so there is no need to set

# it explicitly.)

#

# 除非orderering service对Kafka日志的修剪增加支持,

# 否则需要关闭基于时间的日志保留方式并且避免分段到期

# (基于大小的日志保留方式log.retention.bytes在写本文章时在Kafka中已经默认关闭,因此不需要再次明确设置这个配置)。

- KAFKA_LOG_RETENTION_MS=-1

- KAFKA_HEAP_OPTS=-Xmx256M -Xms128M

ports:

- "9092:9092"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-kafka2.yaml 文件内容和解释如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

# 我们使用K和Z分别代表Kafka集群和ZooKeeper集群的节点个数

#

# 1)K的最小值应该被设置为4(我们将会在第4步中解释,这是为了满足crash容错的最小节点数。

# 如果有4个代理,那么可以容错一个代理崩溃,一个代理停止服务后,channel仍然可以继续读写,新的channel可以被创建)

# 2)Z可以为3,5或是7。它的值需要是一个奇数避免脑裂(split-brain)情况,同时选择大于1的值为了避免单点故障。

# 超过7个ZooKeeper servers会被认为overkill。

#

version: '2'

services:

kafka2:

container_name: kafka2

hostname: kafka2

image: hyperledger/fabric-kafka

restart: always

environment:

# ========================================================================

# Reference: https://kafka.apache.org/documentation/#configuration

# ========================================================================

#

# broker.id

- KAFKA_BROKER_ID=2

#

# min.insync.replicas

# Let the value of this setting be M. Data is considered committed when

# it is written to at least M replicas (which are then considered in-sync

# and belong to the in-sync replica set, or ISR). In any other case, the

# write operation returns an error. Then:

# 1. If up to M-N replicas -- out of the N (see default.replication.factor

# below) that the channel data is written to -- become unavailable,

# operations proceed normally.

# 2. If more replicas become unavailable, Kafka cannot maintain an ISR set

# of M, so it stops accepting writes. Reads work without issues. The

# channel becomes writeable again when M replicas get in-sync.

#

# min.insync.replicas = M---设置一个M值(例如1<M<N,查看下面的default.replication.factor)

# 数据提交时会写入至少M个副本(这些数据然后会被同步并且归属到in-sync 副本集合或ISR)。

# 其它情况,写入操作会返回一个错误。接下来:

# 1)如果channel写入的数据多达N-M个副本变的不可用,操作可以正常执行。

# 2)如果有更多的副本不可用,Kafka不可以维护一个有M数量的ISR集合,因此Kafka停止接收写操作。Channel只有当同步M个副本后才可以重新可以写。

- KAFKA_MIN_INSYNC_REPLICAS=2

#

# default.replication.factor

# Let the value of this setting be N. A replication factor of N means that

# each channel will have its data replicated to N brokers. These are the

# candidates for the ISR set of a channel. As we noted in the

# min.insync.replicas section above, not all of these brokers have to be

# available all the time. In this sample configuration we choose a

# default.replication.factor of K-1 (where K is the total number of brokers in

# our Kafka cluster) so as to have the largest possible candidate set for

# a channel's ISR. We explicitly avoid setting N equal to K because

# channel creations cannot go forward if less than N brokers are up. If N

# were set equal to K, a single broker going down would mean that we would

# not be able to create new channels, i.e. the crash fault tolerance of

# the ordering service would be non-existent.

#

# 设置一个值N,N<K。

# 设置replication factor参数为N代表着每个channel都保存N个副本的数据到Kafka的代理上。

# 这些都是一个channel的ISR集合的候选。

# 如同在上边min.insync.replicas section设置部分所描述的,不是所有的代理(orderer)在任何时候都是可用的。

# N的值必须小于K,如果少于N个代理的话,channel的创建是不能成功的。

# 因此,如果设置N的值为K,一个代理失效后,那么区块链网络将不能再创建新的channel---orderering service的crash容错也就不存在了。

- KAFKA_DEFAULT_REPLICATION_FACTOR=3

#

# zookeper.connect

# Point to the set of Zookeeper nodes comprising a ZK ensemble.

# 指向Zookeeper节点的集合,其中包含ZK的集合。

- KAFKA_ZOOKEEPER_CONNECT=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

#

# zookeeper.connection.timeout.ms

# The max time that the client waits to establish a connection to

# Zookeeper. If not set, the value in zookeeper.session.timeout.ms (below)

# is used.

#- KAFKA_ZOOKEEPER_CONNECTION_TIMEOUT_MS = 6000

#

# zookeeper.session.timeout.ms

#- KAFKA_ZOOKEEPER_SESSION_TIMEOUT_MS = 6000

#

# socket.request.max.bytes

# The maximum number of bytes in a socket request. ATTN: If you set this

# env var, make sure to update `brokerConfig.Producer.MaxMessageBytes` in

# `newBrokerConfig()` in `fabric/orderer/kafka/config.go` accordingly.

#- KAFKA_SOCKET_REQUEST_MAX_BYTES=104857600 # 100 * 1024 * 1024 B

#

# message.max.bytes

# The maximum size of envelope that the broker can receive.

#

# 在configtx.yaml中会设置最大的区块大小(参考configtx.yaml中AbsoluteMaxBytes参数)。

# 每个区块最大有Orderer.AbsoluteMaxBytes个字节(不包括头部),假定这里设置的值为A(目前99)。

# message.max.bytes和replica.fetch.max.bytes应该设置一个大于A。

# 为header增加一些缓冲区空间---1MB已经足够大。上述不同设置值之间满足如下关系:

# Orderer.AbsoluteMaxBytes < replica.fetch.max.bytes <= message.max.bytes

# (更完整的是,message.max.bytes应该严格小于socket.request.max.bytes的值,socket.request.max.bytes的值默认被设置为100MB。

# 如果想要区块的大小大于100MB,需要编辑fabric/orderer/kafka/config.go文件里硬编码的值brokerConfig.Producer.MaxMessageBytes,

# 修改后重新编译源码得到二进制文件,这种设置是不建议的。)

- KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# replica.fetch.max.bytes

# The number of bytes of messages to attempt to fetch for each channel.

# This is not an absolute maximum, if the fetched envelope is larger than

# this value, the envelope will still be returned to ensure that progress

# can be made. The maximum message size accepted by the broker is defined

# via message.max.bytes above.

#

# 试图为每个通道获取的消息的字节数。

# 这不是绝对最大值,如果获取的信息大于这个值,则仍然会返回信息,以确保可以取得进展。

# 代理所接受的最大消息大小是通过上一条message.max.bytes定义的。

- KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# unclean.leader.election.enable

# Data consistency is key in a blockchain environment. We cannot have a

# leader chosen outside of the in-sync replica set, or we run the risk of

# overwriting the offsets that the previous leader produced, and --as a

# result-- rewriting the blockchain that the orderers produce.

# 数据一致性在区块链环境中是至关重要的。

# 我们不能从in-sync 副本(ISR)集合之外选取channel leader,

# 否则我们将会面临对于之前的leader产生的offsets覆盖的风险,

# 这样的结果是,orderers产生的区块可能会重新写入区块链。

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false

#

# log.retention.ms

# Until the ordering service in Fabric adds support for pruning of the

# Kafka logs, time-based retention should be disabled so as to prevent

# segments from expiring. (Size-based retention -- see

# log.retention.bytes -- is disabled by default so there is no need to set

# it explicitly.)

#

# 除非orderering service对Kafka日志的修剪增加支持,

# 否则需要关闭基于时间的日志保留方式并且避免分段到期

# (基于大小的日志保留方式log.retention.bytes在写本文章时在Kafka中已经默认关闭,因此不需要再次明确设置这个配置)。

- KAFKA_LOG_RETENTION_MS=-1

- KAFKA_HEAP_OPTS=-Xmx256M -Xms128M

ports:

- "9092:9092"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-kafka3.yaml 文件内容和解释如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

# 我们使用K和Z分别代表Kafka集群和ZooKeeper集群的节点个数

#

# 1)K的最小值应该被设置为4(我们将会在第4步中解释,这是为了满足crash容错的最小节点数。

# 如果有4个代理,那么可以容错一个代理崩溃,一个代理停止服务后,channel仍然可以继续读写,新的channel可以被创建)

# 2)Z可以为3,5或是7。它的值需要是一个奇数避免脑裂(split-brain)情况,同时选择大于1的值为了避免单点故障。

# 超过7个ZooKeeper servers会被认为overkill。

#

version: '2'

services:

kafka3:

container_name: kafka3

hostname: kafka3

image: hyperledger/fabric-kafka

restart: always

environment:

# ========================================================================

# Reference: https://kafka.apache.org/documentation/#configuration

# ========================================================================

#

# broker.id

- KAFKA_BROKER_ID=3

#

# min.insync.replicas

# Let the value of this setting be M. Data is considered committed when

# it is written to at least M replicas (which are then considered in-sync

# and belong to the in-sync replica set, or ISR). In any other case, the

# write operation returns an error. Then:

# 1. If up to M-N replicas -- out of the N (see default.replication.factor

# below) that the channel data is written to -- become unavailable,

# operations proceed normally.

# 2. If more replicas become unavailable, Kafka cannot maintain an ISR set

# of M, so it stops accepting writes. Reads work without issues. The

# channel becomes writeable again when M replicas get in-sync.

#

# min.insync.replicas = M---设置一个M值(例如1<M<N,查看下面的default.replication.factor)

# 数据提交时会写入至少M个副本(这些数据然后会被同步并且归属到in-sync 副本集合或ISR)。

# 其它情况,写入操作会返回一个错误。接下来:

# 1)如果channel写入的数据多达N-M个副本变的不可用,操作可以正常执行。

# 2)如果有更多的副本不可用,Kafka不可以维护一个有M数量的ISR集合,因此Kafka停止接收写操作。Channel只有当同步M个副本后才可以重新可以写。

- KAFKA_MIN_INSYNC_REPLICAS=2

#

# default.replication.factor

# Let the value of this setting be N. A replication factor of N means that

# each channel will have its data replicated to N brokers. These are the

# candidates for the ISR set of a channel. As we noted in the

# min.insync.replicas section above, not all of these brokers have to be

# available all the time. In this sample configuration we choose a

# default.replication.factor of K-1 (where K is the total number of brokers in

# our Kafka cluster) so as to have the largest possible candidate set for

# a channel's ISR. We explicitly avoid setting N equal to K because

# channel creations cannot go forward if less than N brokers are up. If N

# were set equal to K, a single broker going down would mean that we would

# not be able to create new channels, i.e. the crash fault tolerance of

# the ordering service would be non-existent.

#

# 设置一个值N,N<K。

# 设置replication factor参数为N代表着每个channel都保存N个副本的数据到Kafka的代理上。

# 这些都是一个channel的ISR集合的候选。

# 如同在上边min.insync.replicas section设置部分所描述的,不是所有的代理(orderer)在任何时候都是可用的。

# N的值必须小于K,如果少于N个代理的话,channel的创建是不能成功的。

# 因此,如果设置N的值为K,一个代理失效后,那么区块链网络将不能再创建新的channel---orderering service的crash容错也就不存在了。

- KAFKA_DEFAULT_REPLICATION_FACTOR=3

#

# zookeper.connect

# Point to the set of Zookeeper nodes comprising a ZK ensemble.

# 指向Zookeeper节点的集合,其中包含ZK的集合。

- KAFKA_ZOOKEEPER_CONNECT=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

#

# zookeeper.connection.timeout.ms

# The max time that the client waits to establish a connection to

# Zookeeper. If not set, the value in zookeeper.session.timeout.ms (below)

# is used.

#- KAFKA_ZOOKEEPER_CONNECTION_TIMEOUT_MS = 6000

#

# zookeeper.session.timeout.ms

#- KAFKA_ZOOKEEPER_SESSION_TIMEOUT_MS = 6000

#

# socket.request.max.bytes

# The maximum number of bytes in a socket request. ATTN: If you set this

# env var, make sure to update `brokerConfig.Producer.MaxMessageBytes` in

# `newBrokerConfig()` in `fabric/orderer/kafka/config.go` accordingly.

#- KAFKA_SOCKET_REQUEST_MAX_BYTES=104857600 # 100 * 1024 * 1024 B

#

# message.max.bytes

# The maximum size of envelope that the broker can receive.

#

# 在configtx.yaml中会设置最大的区块大小(参考configtx.yaml中AbsoluteMaxBytes参数)。

# 每个区块最大有Orderer.AbsoluteMaxBytes个字节(不包括头部),假定这里设置的值为A(目前99)。

# message.max.bytes和replica.fetch.max.bytes应该设置一个大于A。

# 为header增加一些缓冲区空间---1MB已经足够大。上述不同设置值之间满足如下关系:

# Orderer.AbsoluteMaxBytes < replica.fetch.max.bytes <= message.max.bytes

# (更完整的是,message.max.bytes应该严格小于socket.request.max.bytes的值,socket.request.max.bytes的值默认被设置为100MB。

# 如果想要区块的大小大于100MB,需要编辑fabric/orderer/kafka/config.go文件里硬编码的值brokerConfig.Producer.MaxMessageBytes,

# 修改后重新编译源码得到二进制文件,这种设置是不建议的。)

- KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# replica.fetch.max.bytes

# The number of bytes of messages to attempt to fetch for each channel.

# This is not an absolute maximum, if the fetched envelope is larger than

# this value, the envelope will still be returned to ensure that progress

# can be made. The maximum message size accepted by the broker is defined

# via message.max.bytes above.

#

# 试图为每个通道获取的消息的字节数。

# 这不是绝对最大值,如果获取的信息大于这个值,则仍然会返回信息,以确保可以取得进展。

# 代理所接受的最大消息大小是通过上一条message.max.bytes定义的。

- KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# unclean.leader.election.enable

# Data consistency is key in a blockchain environment. We cannot have a

# leader chosen outside of the in-sync replica set, or we run the risk of

# overwriting the offsets that the previous leader produced, and --as a

# result-- rewriting the blockchain that the orderers produce.

# 数据一致性在区块链环境中是至关重要的。

# 我们不能从in-sync 副本(ISR)集合之外选取channel leader,

# 否则我们将会面临对于之前的leader产生的offsets覆盖的风险,

# 这样的结果是,orderers产生的区块可能会重新写入区块链。

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false

#

# log.retention.ms

# Until the ordering service in Fabric adds support for pruning of the

# Kafka logs, time-based retention should be disabled so as to prevent

# segments from expiring. (Size-based retention -- see

# log.retention.bytes -- is disabled by default so there is no need to set

# it explicitly.)

#

# 除非orderering service对Kafka日志的修剪增加支持,

# 否则需要关闭基于时间的日志保留方式并且避免分段到期

# (基于大小的日志保留方式log.retention.bytes在写本文章时在Kafka中已经默认关闭,因此不需要再次明确设置这个配置)。

- KAFKA_LOG_RETENTION_MS=-1

- KAFKA_HEAP_OPTS=-Xmx256M -Xms128M

ports:

- "9092:9092"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-kafka4.yaml 文件内容和解释如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

# 我们使用K和Z分别代表Kafka集群和ZooKeeper集群的节点个数

#

# 1)K的最小值应该被设置为4(我们将会在第4步中解释,这是为了满足crash容错的最小节点数。

# 如果有4个代理,那么可以容错一个代理崩溃,一个代理停止服务后,channel仍然可以继续读写,新的channel可以被创建)

# 2)Z可以为3,5或是7。它的值需要是一个奇数避免脑裂(split-brain)情况,同时选择大于1的值为了避免单点故障。

# 超过7个ZooKeeper servers会被认为overkill。

#

version: '2'

services:

kafka4:

container_name: kafka4

hostname: kafka4

image: hyperledger/fabric-kafka

restart: always

environment:

# ========================================================================

# Reference: https://kafka.apache.org/documentation/#configuration

# ========================================================================

#

# broker.id

- KAFKA_BROKER_ID=4

#

# min.insync.replicas

# Let the value of this setting be M. Data is considered committed when

# it is written to at least M replicas (which are then considered in-sync

# and belong to the in-sync replica set, or ISR). In any other case, the

# write operation returns an error. Then:

# 1. If up to M-N replicas -- out of the N (see default.replication.factor

# below) that the channel data is written to -- become unavailable,

# operations proceed normally.

# 2. If more replicas become unavailable, Kafka cannot maintain an ISR set

# of M, so it stops accepting writes. Reads work without issues. The

# channel becomes writeable again when M replicas get in-sync.

#

# min.insync.replicas = M---设置一个M值(例如1<M<N,查看下面的default.replication.factor)

# 数据提交时会写入至少M个副本(这些数据然后会被同步并且归属到in-sync 副本集合或ISR)。

# 其它情况,写入操作会返回一个错误。接下来:

# 1)如果channel写入的数据多达N-M个副本变的不可用,操作可以正常执行。

# 2)如果有更多的副本不可用,Kafka不可以维护一个有M数量的ISR集合,因此Kafka停止接收写操作。Channel只有当同步M个副本后才可以重新可以写。

- KAFKA_MIN_INSYNC_REPLICAS=2

#

# default.replication.factor

# Let the value of this setting be N. A replication factor of N means that

# each channel will have its data replicated to N brokers. These are the

# candidates for the ISR set of a channel. As we noted in the

# min.insync.replicas section above, not all of these brokers have to be

# available all the time. In this sample configuration we choose a

# default.replication.factor of K-1 (where K is the total number of brokers in

# our Kafka cluster) so as to have the largest possible candidate set for

# a channel's ISR. We explicitly avoid setting N equal to K because

# channel creations cannot go forward if less than N brokers are up. If N

# were set equal to K, a single broker going down would mean that we would

# not be able to create new channels, i.e. the crash fault tolerance of

# the ordering service would be non-existent.

#

# 设置一个值N,N<K。

# 设置replication factor参数为N代表着每个channel都保存N个副本的数据到Kafka的代理上。

# 这些都是一个channel的ISR集合的候选。

# 如同在上边min.insync.replicas section设置部分所描述的,不是所有的代理(orderer)在任何时候都是可用的。

# N的值必须小于K,如果少于N个代理的话,channel的创建是不能成功的。

# 因此,如果设置N的值为K,一个代理失效后,那么区块链网络将不能再创建新的channel---orderering service的crash容错也就不存在了。

- KAFKA_DEFAULT_REPLICATION_FACTOR=3

#

# zookeper.connect

# Point to the set of Zookeeper nodes comprising a ZK ensemble.

# 指向Zookeeper节点的集合,其中包含ZK的集合。

- KAFKA_ZOOKEEPER_CONNECT=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181

#

# zookeeper.connection.timeout.ms

# The max time that the client waits to establish a connection to

# Zookeeper. If not set, the value in zookeeper.session.timeout.ms (below)

# is used.

#- KAFKA_ZOOKEEPER_CONNECTION_TIMEOUT_MS = 6000

#

# zookeeper.session.timeout.ms

#- KAFKA_ZOOKEEPER_SESSION_TIMEOUT_MS = 6000

#

# socket.request.max.bytes

# The maximum number of bytes in a socket request. ATTN: If you set this

# env var, make sure to update `brokerConfig.Producer.MaxMessageBytes` in

# `newBrokerConfig()` in `fabric/orderer/kafka/config.go` accordingly.

#- KAFKA_SOCKET_REQUEST_MAX_BYTES=104857600 # 100 * 1024 * 1024 B

#

# message.max.bytes

# The maximum size of envelope that the broker can receive.

#

# 在configtx.yaml中会设置最大的区块大小(参考configtx.yaml中AbsoluteMaxBytes参数)。

# 每个区块最大有Orderer.AbsoluteMaxBytes个字节(不包括头部),假定这里设置的值为A(目前99)。

# message.max.bytes和replica.fetch.max.bytes应该设置一个大于A。

# 为header增加一些缓冲区空间---1MB已经足够大。上述不同设置值之间满足如下关系:

# Orderer.AbsoluteMaxBytes < replica.fetch.max.bytes <= message.max.bytes

# (更完整的是,message.max.bytes应该严格小于socket.request.max.bytes的值,socket.request.max.bytes的值默认被设置为100MB。

# 如果想要区块的大小大于100MB,需要编辑fabric/orderer/kafka/config.go文件里硬编码的值brokerConfig.Producer.MaxMessageBytes,

# 修改后重新编译源码得到二进制文件,这种设置是不建议的。)

- KAFKA_MESSAGE_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# replica.fetch.max.bytes

# The number of bytes of messages to attempt to fetch for each channel.

# This is not an absolute maximum, if the fetched envelope is larger than

# this value, the envelope will still be returned to ensure that progress

# can be made. The maximum message size accepted by the broker is defined

# via message.max.bytes above.

#

# 试图为每个通道获取的消息的字节数。

# 这不是绝对最大值,如果获取的信息大于这个值,则仍然会返回信息,以确保可以取得进展。

# 代理所接受的最大消息大小是通过上一条message.max.bytes定义的。

- KAFKA_REPLICA_FETCH_MAX_BYTES=103809024 # 99 * 1024 * 1024 B

#

# unclean.leader.election.enable

# Data consistency is key in a blockchain environment. We cannot have a

# leader chosen outside of the in-sync replica set, or we run the risk of

# overwriting the offsets that the previous leader produced, and --as a

# result-- rewriting the blockchain that the orderers produce.

# 数据一致性在区块链环境中是至关重要的。

# 我们不能从in-sync 副本(ISR)集合之外选取channel leader,

# 否则我们将会面临对于之前的leader产生的offsets覆盖的风险,

# 这样的结果是,orderers产生的区块可能会重新写入区块链。

- KAFKA_UNCLEAN_LEADER_ELECTION_ENABLE=false

#

# log.retention.ms

# Until the ordering service in Fabric adds support for pruning of the

# Kafka logs, time-based retention should be disabled so as to prevent

# segments from expiring. (Size-based retention -- see

# log.retention.bytes -- is disabled by default so there is no need to set

# it explicitly.)

#

# 除非orderering service对Kafka日志的修剪增加支持,

# 否则需要关闭基于时间的日志保留方式并且避免分段到期

# (基于大小的日志保留方式log.retention.bytes在写本文章时在Kafka中已经默认关闭,因此不需要再次明确设置这个配置)。

- KAFKA_LOG_RETENTION_MS=-1

- KAFKA_HEAP_OPTS=-Xmx256M -Xms128M

ports:

- "9092:9092"

extra_hosts:

- "zookeeper1:172.31.159.137"

- "zookeeper2:172.31.159.135"

- "zookeeper3:172.31.159.136"

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

Kafka 默认端口为 9092。

Kafka的最小值应该被设置为4,这是为了满足Crash容错的最小节点数。如果有4个代理,则可以容错一个代理奔溃,即一个代理停止服务后,Channel 仍然可以继续读写,新的Channel可以被创建。

Orderer有三份配置文件:docker-orderer0.yaml 、 docker-orderer1.yaml 、 docker-orderer2.yaml

docker-orderer0.yaml 文件内容配置如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

version: '2'

services:

orderer0.example.com:

container_name: orderer0.example.com

image: hyperledger/fabric-orderer

environment:

- CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=aberic_default

- ORDERER_GENERAL_LOGLEVEL=debug

# - ORDERER_GENERAL_LOGLEVEL=error

- ORDERER_GENERAL_LISTENADDRESS=0.0.0.0

- ORDERER_GENERAL_LISTENPORT=7050

#- ORDERER_GENERAL_GENESISPROFILE=AntiMothOrdererGenesis

- ORDERER_GENERAL_GENESISMETHOD=file

- ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block

- ORDERER_GENERAL_LOCALMSPID=OrdererMSP

- ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp

#- ORDERER_GENERAL_LEDGERTYPE=ram

#- ORDERER_GENERAL_LEDGERTYPE=file

# enabled TLS

- ORDERER_GENERAL_TLS_ENABLED=false

- ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key

- ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt

- ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt]

- ORDERER_KAFKA_RETRY_LONGINTERVAL=10s

- ORDERER_KAFKA_RETRY_LONGTOTAL=100s

- ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s

- ORDERER_KAFKA_RETRY_SHORTTOTAL=30s

- ORDERER_KAFKA_VERBOSE=true

- ORDERER_KAFKA_BROKERS=[172.31.159.131:9092,172.31.159.132:9092,172.31.159.133:9092,172.31.159.134:9092]

working_dir: /opt/gopath/src/github.com/hyperledger/fabric

command: orderer

volumes:

- ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/msp:/var/hyperledger/orderer/msp

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer0.example.com/tls/:/var/hyperledger/orderer/tls

networks:

default:

aliases:

- aberic

ports:

- 7050:7050

extra_hosts:

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-orderer1.yaml 文件内容配置如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

version: '2'

services:

orderer1.example.com:

container_name: orderer1.example.com

image: hyperledger/fabric-orderer

environment:

- CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=aberic_default

- ORDERER_GENERAL_LOGLEVEL=debug

# - ORDERER_GENERAL_LOGLEVEL=error

- ORDERER_GENERAL_LISTENADDRESS=0.0.0.0

- ORDERER_GENERAL_LISTENPORT=7050

#- ORDERER_GENERAL_GENESISPROFILE=AntiMothOrdererGenesis

- ORDERER_GENERAL_GENESISMETHOD=file

- ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block

- ORDERER_GENERAL_LOCALMSPID=OrdererMSP

- ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp

#- ORDERER_GENERAL_LEDGERTYPE=ram

#- ORDERER_GENERAL_LEDGERTYPE=file

# enabled TLS

- ORDERER_GENERAL_TLS_ENABLED=false

- ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key

- ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt

- ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt]

- ORDERER_KAFKA_RETRY_LONGINTERVAL=10s

- ORDERER_KAFKA_RETRY_LONGTOTAL=100s

- ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s

- ORDERER_KAFKA_RETRY_SHORTTOTAL=30s

- ORDERER_KAFKA_VERBOSE=true

- ORDERER_KAFKA_BROKERS=[172.31.159.131:9092,172.31.159.132:9092,172.31.159.133:9092,172.31.159.134:9092]

working_dir: /opt/gopath/src/github.com/hyperledger/fabric

command: orderer

volumes:

- ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer1.example.com/msp:/var/hyperledger/orderer/msp

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer1.example.com/tls/:/var/hyperledger/orderer/tls

networks:

default:

aliases:

- aberic

ports:

- 7050:7050

extra_hosts:

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

docker-orderer2.yaml 文件内容配置如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

version: '2'

services:

orderer2.example.com:

container_name: orderer2.example.com

image: hyperledger/fabric-orderer

environment:

- CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE=aberic_default

- ORDERER_GENERAL_LOGLEVEL=debug

# - ORDERER_GENERAL_LOGLEVEL=error

- ORDERER_GENERAL_LISTENADDRESS=0.0.0.0

- ORDERER_GENERAL_LISTENPORT=7050

#- ORDERER_GENERAL_GENESISPROFILE=AntiMothOrdererGenesis

- ORDERER_GENERAL_GENESISMETHOD=file

- ORDERER_GENERAL_GENESISFILE=/var/hyperledger/orderer/orderer.genesis.block

- ORDERER_GENERAL_LOCALMSPID=OrdererMSP

- ORDERER_GENERAL_LOCALMSPDIR=/var/hyperledger/orderer/msp

#- ORDERER_GENERAL_LEDGERTYPE=ram

#- ORDERER_GENERAL_LEDGERTYPE=file

# enabled TLS

- ORDERER_GENERAL_TLS_ENABLED=false

- ORDERER_GENERAL_TLS_PRIVATEKEY=/var/hyperledger/orderer/tls/server.key

- ORDERER_GENERAL_TLS_CERTIFICATE=/var/hyperledger/orderer/tls/server.crt

- ORDERER_GENERAL_TLS_ROOTCAS=[/var/hyperledger/orderer/tls/ca.crt]

- ORDERER_KAFKA_RETRY_LONGINTERVAL=10s

- ORDERER_KAFKA_RETRY_LONGTOTAL=100s

- ORDERER_KAFKA_RETRY_SHORTINTERVAL=1s

- ORDERER_KAFKA_RETRY_SHORTTOTAL=30s

- ORDERER_KAFKA_VERBOSE=true

- ORDERER_KAFKA_BROKERS=[172.31.159.131:9092,172.31.159.132:9092,172.31.159.133:9092,172.31.159.134:9092]

working_dir: /opt/gopath/src/github.com/hyperledger/fabric

command: orderer

volumes:

- ./channel-artifacts/genesis.block:/var/hyperledger/orderer/orderer.genesis.block

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer2.example.com/msp:/var/hyperledger/orderer/msp

- ./crypto-config/ordererOrganizations/example.com/orderers/orderer2.example.com/tls/:/var/hyperledger/orderer/tls

networks:

default:

aliases:

- aberic

ports:

- 7050:7050

extra_hosts:

- "kafka1:172.31.159.133"

- "kafka2:172.31.159.132"

- "kafka3:172.31.159.134"

- "kafka4:172.31.159.131"

参数解释:

CORE_VM_DOCKER_HOSTCONFIG_NETWORKMODE : 用来创建Docker容器的参数

ORDERER_GENERAL_LOGLEVEL : 设置当前程序的日志级别,当前为debug方便调试。生产环境中应该设置为error等较高级别。

ORDERER_GENERAL_GENESISMETHOD : 告知关于本 Fabric 网络的创世区块被包含在一个文件信息中

ORDERER_GENERAL_GENESISFILE : 指定创世区块的确切路径。

ORDERER_GENERAL_LOCALMSPID : 在crypto-config.yaml文件中定义的 MSP 的 ID

ORDERER_GENERAL_LOCALMSPDIR : MSP ID 目录的路径

ORDERER_GENERAL_TLS_ENABLED : 表示是否启用TLS

ORDERER_GENERAL_TLS_PRIVATEKEY : 私钥文件位置

ORDERER_GENERAL_TLS_CERTIFICATE : 证书位置

ORDERER_GENERAL_TLS_ROOTCAS : TLS 根证书的位置

ORDERER_KAFKA_RETRY_LONGINTERVAL : 表示每间隔最大多长时间进行一次重试

ORDERER_KAFKA_RETRY_LONGTOTAL : 表示总共重试最长时长

ORDERER_KAFKA_RETRY_SHORTINTERVAL :表示每间隔最小多长时间进行一次重试

ORDERER_KAFKA_RETRY_SHORTTOTAL : 表示总共重试最短时长

ORDERER_KAFKA_VERBOSE : 表示启用日志与Kafka进行交互

ORDERER_KAFKA_BROKERS : 指向Kafka的集合,包括自身

working_dir : 用于设置Orderer排序服务的工作路径

volumes : 表示为了映射环境配置中使用的目录,说明了MSP 、 TLS 、ROOT 和 CERT 文件的位置,其中还包括了创世区块的信息

Kafka集群启动顺序应该是由上至下,即根集群必须优先启动:先启动Zookeeper集群,随后是Kafka集群,最后是Orderer排序服务集群。

5.4.1 启动Zookeeper集群分别将docker-zookeeper1.yaml , docker-zookeeper2.yaml , docker-zookeerper3.yaml 上传到ZK1 , ZK2 ,ZK3 服务器上自定义位置 。

说明:

Zookeeper服务不需要部署GO和Fabric环境。为便于清晰理解和操作,可以同样建立一个 /home/zyp/development/go/src/github.com/hyperledger/fabric/aberic

目录。

参考 fabric网络部署的文件位置

上传完毕后,在ZK1,ZK2,ZK3分别执行如下命令:

docker-compose -f docker-zookeeper1.yaml up //ZK1

docker-compose -f docker-zookeeper2.yaml up //ZK2

docker-compose -f docker-zookeeper3.yaml up //ZK3

5.4.2 启动Kafka集群

同上,建立一个熟悉的路径(…/fabric/aberic目录),将docker-kafka.yaml 四个配置文件分别上传到Kafka1 , Kafka2, Kafka3 , Kafka4 服务器。

分别在对应服务器执行对应命令启动:

docker-compose -f docker-kafka1.yaml up // Kafka1服务器

docker-compose -f docker-kafka2.yaml up // Kafka2服务器

docker-compose -f docker-kafka3.yaml up // Kafka3服务器

docker-compose -f docker-kafka4.yaml up // Kafka4服务器

可能遇到的问题:

"OpenJDK 64-Bit Server VM warning : INFO : os :commit_memory "

Kafka中heap-opts 默认是1G, 如果你的测试服务器配置小于1G,就会报如上的错

解决方案:

在Kafka配置文件中环境变量里加入如下参数

- KAFKA_HEAP_OPTS = -Xmx256M -Xms128M

5.5.3 启动Orderer集群

同上,分别上传各自的配置文件docker-orderer0.yaml , docker-oderer1.yaml , docker-orderer2.yaml 到 Orderer0 , Orderer1 , Orderer2 服务器 的 aberic 目录下。(自行创建)

将 第3章 部署单机多节点网络 中 生成的genesis.block 创世区块文件(下图所示),分别上传至各Orderer服务器 …/aberic/channel-artifacts 目录下。(没有手动创建即可)

还需上传 crypto-config.yaml 配置文件和 crypto-config 文件夹下的 ordererOrganizations 整个上传至 各Orderer服务器 …/aberic/crypto-config 目录下。(手动创建该目录即可)

所需文件 在 第3章 部署单机多节点网络 都生成和配置过,直接复制过来用

所需文件准备完后,在各自服务器分别执行启动命令:

docker-compose -f docker-orderer0.yaml up -d // Orderer0 启动命令

docker-compose -f docker-orderer1.yaml up -d // Orderer1 启动命令

docker-compose -f docker-orderer2.yaml up -d // Orderer2 启动命令

Orderer启动的时候,创建了一个名为testchainid的系统Channel。排序服务启动后看到如下日志即为启动成功

2020-02-02 18:58:44.571 CST [orderer.consensus.kafka] startThread -> INFO 011 [channel: testchainid] Channel consumer set up successfully

2020-02-02 18:58:44.571 CST [orderer.consensus.kafka] startThread -> INFO 012 [channel: testchainid] Start phase completed successfully

5.5 集群环境测试

准备docker-peer0org1.yaml配置文件

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

version: '2'

services:

couchdb:

container_name: couchdb

image: hyperledger/fabric-couchdb

# Comment/Uncomment the port mapping if you want to hide/expose the CouchDB service,

# for example map it to utilize Fauxton User Interface in dev environments.

ports:

- "5984:5984"

ca:

container_name: ca

image: hyperledger/fabric-ca

environment:

- FABRIC_CA_HOME=/etc/hyperledger/fabric-ca-server

- FABRIC_CA_SERVER_CA_NAME=ca

- FABRIC_CA_SERVER_TLS_ENABLED=false

- FABRIC_CA_SERVER_TLS_CERTFILE=/etc/hyperledger/fabric-ca-server-config/ca.org1.example.com-cert.pem

- FABRIC_CA_SERVER_TLS_KEYFILE=/etc/hyperledger/fabric-ca-server-config/dbb4538c1dacb57bdca5d39bdaf0066a98826bebb47b86a05d18972db5876d1e_sk

ports:

- "7054:7054"

command: sh -c 'fabric-ca-server start --ca.certfile /etc/hyperledger/fabric-ca-server-config/ca.org1.example.com-cert.pem --ca.keyfile /etc/hyperledger/fabric-ca-server-config/dbb4538c1dacb57bdca5d39bdaf0066a98826bebb47b86a05d18972db5876d1e_sk -b admin:adminpw -d'

volumes:

- ./crypto-config/peerOrganizations/org1.example.com/ca/:/etc/hyperledger/fabric-ca-server-config

peer0.org1.example.com:

container_name: peer0.org1.example.com

image: hyperledger/fabric-peer

environment:

- CORE_LEDGER_STATE_STATEDATABASE=CouchDB

- CORE_LEDGER_STATE_COUCHDBCONFIG_COUCHDBADDRESS=172.31.159.129:5984

- CORE_PEER_ID=peer0.org1.example.com

- CORE_PEER_NETWORKID=aberic

- CORE_PEER_ADDRESS=peer0.org1.example.com:7051

- CORE_PEER_CHAINCODEADDRESS=peer0.org1.example.com:7052

- CORE_PEER_CHAINCODELISTENADDRESS=peer0.org1.example.com:7052

- CORE_PEER_GOSSIP_EXTERNALENDPOINT=peer0.org1.example.com:7051

- CORE_PEER_LOCALMSPID=Org1MSP

- CORE_VM_ENDPOINT=unix:///host/var/run/docker.sock

# the following